| CATEGORII DOCUMENTE |

| Asp | Autocad | C | Dot net | Excel | Fox pro | Html | Java |

| Linux | Mathcad | Photoshop | Php | Sql | Visual studio | Windows | Xml |

DOCUMENTE SIMILARE |

|||||

|

|||||

Visual Studio 2005 provides many types of tests for testing application functional and operational requirements. This section examines some of these types in detail.

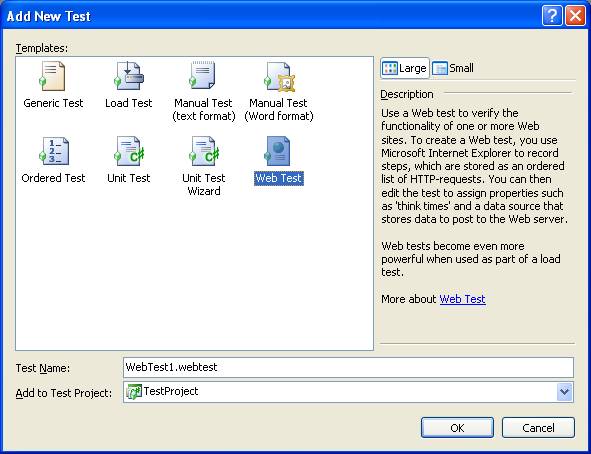

Creating a test in Visual Studio 2005 begins by invoking the new Add New Test Wizard. Figure 7-4 shows the Add New Test Wizard and test types available to a C# Test Project. In addition to creating unit tests, testers can create generic, load, manual, ordered, and Web tests. Each test type has an associated designer and, in some cases, a wizard to guide through the creation steps.

Figure 7-4

The Add New Test Wizard, which presents various test types to add to the test projects

Integration and system testing are generally performed by testers in a Quality Assurance (QA) group. Integration and system tests generally consist of scripted instructions that a tester follows to exercise specific features and integration points within an application. These scripts usually take the form of text documents stored in some repository. They are called "manual" tests because a human tester must manually execute each step in the script and record the results. Because the tools and repositories used for manual testing are typically separate from those used for automated unit testing, developers and testers end up learning and managing two separate processes for running tests and tracking bugs. Visual Studio 2005 Team System solves this disparity by including manual tests as first-class project artifacts and fully integrates them into the test-running and defect-tracking systems.

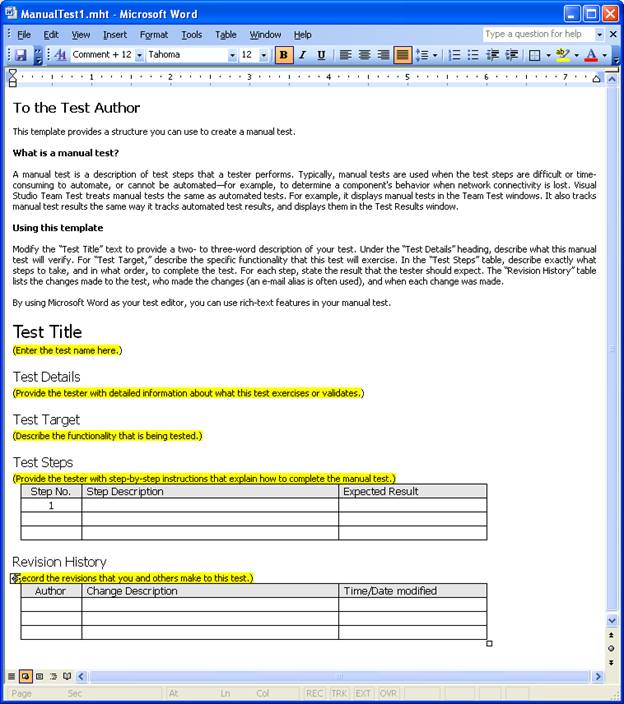

Manual tests are implemented as either plain text files or Microsoft Office Word documents. Figure 7-5 shows the default Word document provided for new tests. Testers can choose not to use the default document, though, and create their own templates instead. Visual Studio 2005 automatically launches Word when a new manual test is created so that test writers can work with a tool they're familiar with. In addition, they can leverage the Word importing features to ease migration of existing test scripts into VSTS.

Figure 7-5

The basic Word document provided by VSTS for use with manual tests

Testers begin the process of executing a manual test by running it from within Test Manager. Visual Studio launches the manual test runner, which displays the test script and provides input options to record the results of the test. The result information is maintained in the VSTS database separate from, but associated with, the test script. The status of the test remains Pending until the tester saves the results with either Pass or Fail. Upon completion of the process, a work item can be created for a failed test result and assigned to a developer.

Generic tests provide a mechanism to integrate with existing automated testing technologies or any other technology that does not integrate directly into Visual Studio 2005. For example, testing might have an existing system for automated user interface testing of applications. Although Visual Studio 2005 Team System does not provide its own mechanism for Windows Forms testing, testers can create generic tests that wrap the existing testing tool. This feature allows them to execute the tests and record the results in Visual Studio with the rest of the tests. Another scenario where generic tests could be useful is in the automated testing of install/uninstall scripts. Testers could create a generic test that invokes the installation program with a particular set of parameters. If the test passes, they know the program installed successfully. A second generic test could run the uninstall program. If the test passes, they know the uninstall program ran without error. Testers could then run a third test, either unit or generic, that verifies that the application was fully uninstalled by checking registry entries, directory structures, etc.

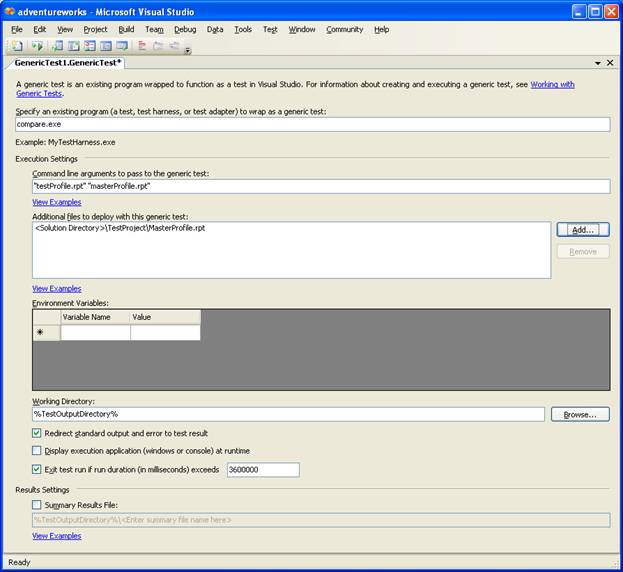

Figure 7-6 shows the generic test designer. There are many other optional settings, such as command-line arguments, files to deploy with the test, environment variables to set, the working directory, redirection of the test's standard output/error streams, time-outs, and others.

Figure 7-6

The generic test designer, which provides many options for defining how to invoke the external resource

If testers want to test the search page of a Web site without an automated system, they have to follow a manual script to navigate to the search page, enter a query value into a field, submit the page, and evaluate that the return page contains the expected results. Each time changes are made to the search system, a tester will need to manually re-execute the test. A Web test allows them to automate the testing of Web-based interfaces by recording browser sessions into editable scripts. They can then add value to the scripts with features such as validation and data binding. These scripts become tests that can be run at any time, individually, in groups, or as part of a load test by simply clicking a button.

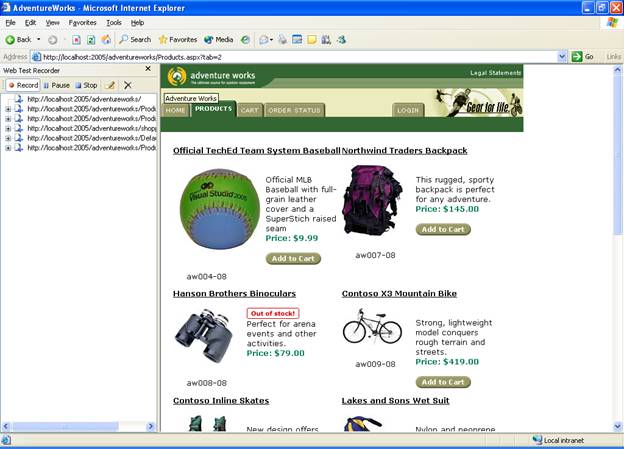

When testers create a new Web test, Visual Studio launches the Web Test Recorder, which is Internet Explorer extended with a utility that records each HTTP request sent to the server as the tester navigates the application. Figure 7-7 shows the Web Test Recorder in the middle e of a session where the user is testing the search feature of a Web site. Testers can pause the recorder if they need to perform an action that should not be captured as part of the script, and can end the recording session by clicking the Stop button, at which point Visual Studio will open the test in the Web Test designer.

Figure 7-7

The Web Test Recorder, which automatically captures all requests in a browser session

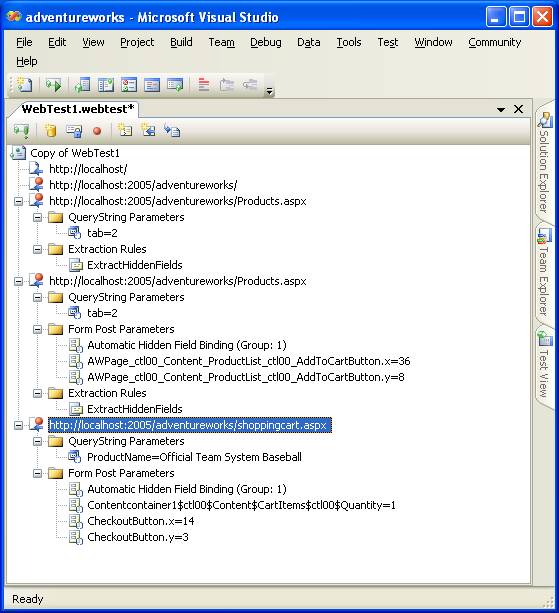

The Web Test designer allows testers to examine and modify a Web test by exposing the details of each HTTP request, including its headers, query string parameters, and post parameters. They can even create and insert entirely new requests from scratch. Taken together, these features let testers modify the test as requirements change, without forcing them to rerecord the entire script. Figure 7-8 shows the Web Test designer for the recorded test described earlier with some of the request nodes expanded.

Figure 7-8

The Web Test designer, which provides access to the details of each request and allows the addition of validation and data binding

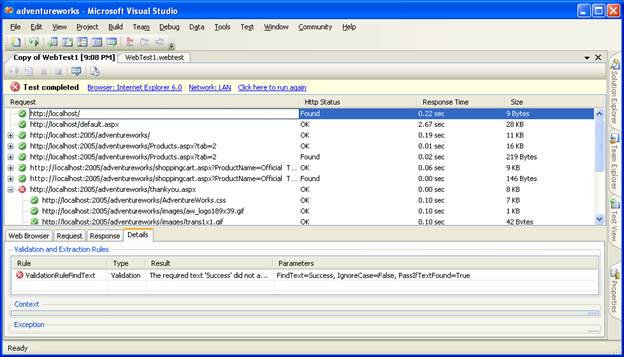

The last step is to validate that execution of the script provided a set of expected results. Web tests allow testers to validate results by attaching validation rules to requests. Although these rules appear in the designer attached to HTTP requests, they're actually used during execution to validate the HTTP response returned for that request. In Figure 7-9, it is shown that validation rule has been applied to the request. Testers configure validation rules through the Properties window.

In Figure 7-9, Data Sources nodes are also shown. Web tests

The Web Test Runner, shown in Figure 7-9, displays the results of executing a Web test. For each top-level request, the list view displays its validation status, HTTP status, response time, and transferred bytes. Testers can drill into each request to view the details of all dependent requests, or they can select a request and view its details in the tabs on the bottom half of the screen. The Web Browser tab displays the response as rendered in Internet Explorer, and the Details tab displays information regarding any validation rules and exception information. Figure 7-9 show the request succeeded to return a required text response as defined by the validation rule.

Figure 7-9

The Web Test Runner, which provides convenient access to all the details of a test run

Load tests allow testers to simulate an environment where multiple users are using an application simultaneously. The primary purpose of load testing is to discover performance and scalability bottlenecks in the application prior to deployment. It also enables testers to record baseline performance statistics that they can compare future performance against as the application is modified and extended. Of course, to analyze performance trends over time there must be a repository in which to store all the performance data. VSTS provides robust design tools, an efficient execution engine, and a reliable repository for creating, running, and analyzing load tests.

The creation of a load

test starts with the New Load Test Wizard. This wizard steps through the

process of defining a load test scenario. A scenario includes definitions of a

test mix, load profile, user profile, counter sets, and run settings.

The creation of a load

test starts with the New Load Test Wizard. This wizard steps through the

process of defining a load test scenario. A scenario includes definitions of a

test mix, load profile, user profile, counter sets, and run settings.

The test mix defines which tests are included in the load and how the load should be distributed among them. Testers can include any automated test type-that is, anything other than manual tests. They can define what percentage of the total load should be executing each test. The load profile defines how the runner should apply the load to the application. For example, testers can select Constant Load, in which case the runner immediately starts with a full load as defined by the maximum user count setting. This option is useful for testing peak usage and system stability scenarios, but it does not help in examining a system's scalability. For scalability scenarios, testers can select Stepped Load, which allows them to specify a starting load, step duration, step user count, and a maximum load. The runner will increase the load by the step user count after each step interval until the maximum load is reached. With a stepped load, testers can examine how performance of various parts of the system changes as user load increases over time.

For Web tests, User Profile allows testers to define the browser and network types the runner should simulate. Again, they can define multiple types and assign a distribution percentage to each. Browser types include various flavors of Internet Explorer, Netscape/Mozilla, Pocket Internet Explorer, and Smartphone profiles. The available network types range from dial-up through T3 connections.

After defining profiles, testers have to decide which information is important to collect during the load test and which machines to collect it from. They can do this by creating Counter Sets, which are a collection of Microsoft Windows performance counters, and applying them to specific machines. Advanced users can even define threshold rules and apply them to specific counters. This feature allows the runner to show threshold violations in the test result data. Creating Counter Sets can be a complicated task, as it requires a thorough understanding of the various platform, SQL, .NET, and ASP.NET performance counters. However, VSTS provides some default Counter Sets to simplify the creation of basic tests.

The final step in defining load test is to specify the test's Run Settings. These settings govern how the runner will execute the test, sample performance counter data, handle errors, and log test results. For example, testers can specify how often counters should be sampled, the total run duration, and the maximum number of errors to log details for.

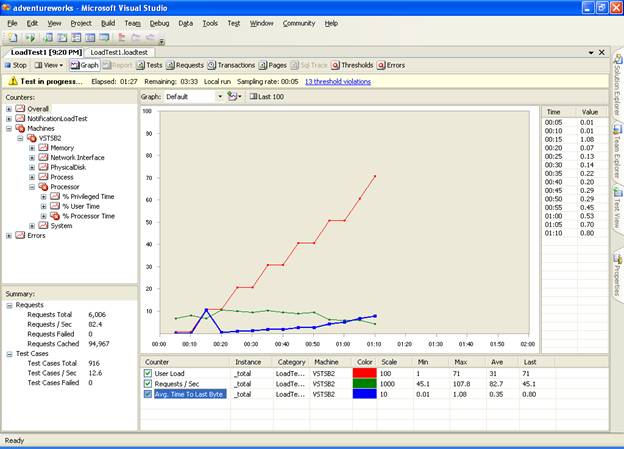

When a load test is run, Visual Studio will display the performance counter data in real time in the Load Test runner. Figure 7-10 shows a load test in progress. Testers can add and remove counters from the graph as the test is executing. The Counters tree view will also display warning and error indicators when a threshold is passed on a particular counter. All the data captured by the runner is then logged to a database and made available through Team Services reports to managers and team members.

Figure 7-10

The

load test runner displaying load test results in real-time

The

load test runner displaying load test results in real-time

|

Politica de confidentialitate | Termeni si conditii de utilizare |

Vizualizari: 1292

Importanta: ![]()

Termeni si conditii de utilizare | Contact

© SCRIGROUP 2025 . All rights reserved