| CATEGORII DOCUMENTE |

| Bulgara | Ceha slovaca | Croata | Engleza | Estona | Finlandeza | Franceza |

| Germana | Italiana | Letona | Lituaniana | Maghiara | Olandeza | Poloneza |

| Sarba | Slovena | Spaniola | Suedeza | Turca | Ucraineana |

Hunger, Poverty, and Economic Development

The persistence of widespread hunger is one of the most appalling features of the modern world. The fact that so many people continue to die each year from famines, and that many millions more go on perishing from persistent deprivation on a regular basis, is a calamity to which the world has, somewhat incredibly, got coolly accustomed. Indeed, the subject often generates either cynicism ('not a lot can be done about it') or complacent irresponsibility ('don't blame meit is not a problem for which I am answerable ').

Jean Dreze and Amartya Sen, Hunger and Public Action

Poverty is the main reason why babies are not vaccinated, why clean water and sanitation are not provided, why curative drugs and other treatments are unavailable and why mothers die in childbirth. It is the underlying cause of reduced life expectancy, handicaps, disability and starvation. Poverty is a major contributor to mental illness, stress, suicide, family disintegration and substance abuse. Every year in the developing world 12.2 million children under 5 years die, most of them from causes which could be prevented for just a few US cents per child. They die largely because of world indifference, but most of all they die because they are poor.

World Health Organization, World Health Report (1995)

At the end of World War II public

officials and scientists from all over the world predicted that with

advances in modern technology it would be possible by the end of the century to

end

poverty, famine, and endemic hunger in the world. Freed from colonial

domination and assisted by new global institutions such as the United Nations and

the World Bank, the impoverished countries of Africa, Asia, and

Today these optimistic projections have been replaced by hopelessness and resignation as 1.2 billion people are estimated to still live on less than $1 per day, and almost 3 billion on less than $2 per day. Estimates of the number of people with insufficient food range from 800 million to well over a billion, virtually one-fifth of the world's population. Children are particularly vulnerable; food aid organizations estimate that 250,000 children

per week, almost 1,500 per hour, die from inadequate diets and the diseases that thrive on malnourished bodies. And hunger is not just a problem of the poor countries of the world. Estimates of the number of Americans living in hunger rose from 20 million in 1985 to 31 million Americans who presently live in households that are food insecure (hungry or at risk of hunger).

Common misunderstandings about world hunger should be quickly dispelled. First, world hunger is not the result of insufficient food production. There is enough food in the world to feed 120 percent of the world's population on a vegetarian diet, although probably not enough to feed the world on the diet of the core countries. Even in countries where people are starving, there is either more than enough food for everyone or the capacity to produce it.

Second, famine is not the most common reason for hunger. While famines such as those in recent years in Ethiopia, Sudan, Somalia, and Chad receive the most press coverage, endemic hungerdaily insufficiencies in foodis far more common.

Third, famine

itself is rarely caused by food insufficiency. When hundreds of thousands starved

to death in

Finally, hunger is not caused by overpopulation. While growing populations may require more food, there is no evidence that the food could not be produced and delivered if people had the means to pay for it. This does not mean population and food availability play no role in world hunger, but that the relationship is far more complex than it appears.

The question, then, is why do people continue to starve to death in the midst of plenty? More important, is it still possible to believe that poverty and hunger can be eliminated? If so, how?

To answer these questions we need to know about the nature and history of food production and to understand the reasons why people are hungry. There is a prevailing view that hunger is inevitable, but that need not be the case. We will examine some possible solutions to world hunger, and how specific countries, some rich and some poor, have tried to ensure that people have adequate food.

The Evolution of Food Production: From the Neolithic to the Neocaloric

Until recently in world history virtually everyone

lived on farms, grew their own food, and

used whatever surplus they produced to pay tribute or taxes, to sell at local

markets, and to keep for seed for the

following year. Since the beginning of the Industrial Revolution people have left the land in increasing

numbers, converging on cities and living on wages. As late as 1880, half of the

purchase the products of American agriculture (Schusky 1989:101). This shift of the population from farming to other sources of livelihood is intensifying all over the world. Why do people leave the land on which they produce their own food to seek wage employment, which requires that they buy food from others?

To answer this question we need to understand the history of agriculture, why food production has changed, and how economic and agricultural policies have resulted in increased poverty and hunger.

From Gathering and Hunting to the Neolithic

For most of their existence

human beings produced food by gathering wild plantsnuts, roots, berries,

grainsand hunting large and small game. Generally these people enjoyed a high quality

of life. They devoted only about twenty hours per week to work, and archeological

studies and research among contemporary gathering and hunting societies indicate

that food was relatively plentiful and nutritious. Life span and health

standards seem to have been better than those of later

agriculturists. Consequently, a major question for anthropologists

has been why human populations that lived by gathering and hunting began to plant and

cultivate crops. Before 1960 anthropologists assumed that domesticating plants and

animals provided more nourishment and food security than gathering and hunting. But when

the research in the 1960s of Richard Lee and James Woodburn revealed that the food

supply of gatherers and hunters was relatively secure and that the investment

of energy in food production was small, speculation turned to the effects of

population increase on food production. Mark Cohen (1977) suggested that increases in

population densities may have required people to forage over larger

areas in search of food, eventually making it more efficient to domesticate and

cultivate their own rather than travel large distances in search of game

and wild plants. Even then the shift from gathering and hunting to

domestication of plants and animals must have been very gradual, emerging first

on a large scale in

Slash and burn or swidden agriculture is the simplest way of cultivating crops; although it is highly efficient when practiced correctly it requires considerable knowledge of local habitats. In swidden agriculture a plot of land is cleared by cutting down the vegetation, spreading it over the area to be used for planting, and then burned. Seeds are planted and the plants cultivated and then harvested. After one to three years of use, the plot is more or less abandoned and a new area cut, burned, and planted. If there is sufficient land, the original plot lies fallow for ten years or more until bushes and trees reestablish themselves; then it is used again. Of the four major factors in agricultural productionland, water, labor, and energyswidden agriculture is land-intensive only. It uses only natural rainfall and solar energy and requires about twenty-five hours per week of labor. Tools consist of only an ax or machete and a hoe or digging stick.

Swidden agriculture is still practiced in much of the periphery, but only recently have researchers begun to appreciate its efficiency and sophistication. Burning vegetation, for example, once thought only to add nutrients in the form of ash to the soil, also kills pests, insects, and weeds. When practiced correctly, it is environmentally sound because it recreates the natural habitat. Swidden agriculturists must choose a site with the proper vegetation and desirable drainage and soil qualities. They must cut and spread the brush properly and evenly to maximize the burn and the heat. Cuttings that dry slowly must be cut earlier than those that dry quickly. Unlike in monoculturethe growing of a single cropswidden plots are planted with a variety of foodstuffs as well as medicinal plants and other vegetable products. Swidden agriculturists must know when to abandon a plot so that grasses do not invade and prevent regeneration of brush and forest. Furthermore, abandoned plots are rarely truly abandoned, being used to grow tree crops or attract animals that are hunted for food.

Just as there is debate as to why gatherers and hunters began to practice agriculture, there is some question as to why swidden agriculturists began to practice the more labor-intensive irrigation or plow agriculture. These techniques do not necessarily produce a greater yield relative to the labor energy used; however, they do allow use of more land more often by reducing or eliminating the fallow period. Land that in swidden agriculture would lie fallow is put into production. Irrigation agriculture also allows continual production on available land, sometimes allowing harvest of two or three crops in a year. Moreover, it permits the use of land that without irrigation could not be used for agriculture. Ester Boserup (1965) suggested that increasing population density required more frequent use of land, eliminating the possibility of allowing land to lie fallow to regain its fertility. Thus plow and irrigation agriculture, while requiring greater amounts of work, does produce more food.

The

productivity comes at a cost, however. Of the four agricultural

essentialsland, water,

labor, and energyirrigation requires more water, more labor, and more energy than swidden agriculture. In addition, irrigation

generally requires a more complex social and political structure, with a highly

centralized bureaucracy to direct the construction, maintenance, and oversight of the required

canals, dikes, and, in some cases, dams. Furthermore, irrigation can be environmentally destructive, leading to

salinization (excess salt buildup) of

the soil and silting. For example, it is estimated that 50 percent of the

irrigated land in

If we surveyed the world of 2,000

years ago, when virtually all of the food crops now in use were domesticated and in use somewhere, we would find centers

of irrigation agriculture in

the Andes and

Capitalism and Agriculture

The next great revolution in food production was a consequence of the growing importance of world trade in the sixteenth through eighteenth centuries and the gradual increase in the number of people living in cities and not engaged in food production. The expansion of trade and growth of the nonfarming population had at least four profound consequences for agricultural production.

First, food

became a commodity that like any other commodity, such as silk, swords, or household furniture,

could be produced, bought, and sold for profit. Second, the growth of trade and the number of people engaged in nonagricultural

production created competition for labor between agricultural and

industrial sectors of the economy. Third,

the growth of the nonagricultural workforce created greater vulnerability on

the part of those who depended on others for food. The availability of

food no longer depended solely on a farmer's ability to produce it but also on

people's wages, food prices, and an infrastructure required for the delivery,

storage, and marketing of food products. Finally,

the increasing role of food as a capitalist commodity resulted in the increased

intervention of the state in food production. For example, food prices

needed to be regulated; if they were too

high not only might people starve but industrial wages would need to increase; if they were too low, agricultural

producers might not bring their food to market. Import quotas or tariffs needed to be set to maximize food

availability on the one hand and protect domestic food producers on the other.

New lands needed to be colonized,

not only to produce enough food but to keep food production profitable. The

state might also regulate agricultural

wages or, as in the

As it turned out, the most important change in food production inspired by the transformation of food into a capitalist commodity was the continual reduction of the amount in human energy and labor involved directly in food production and the increase of the amount in nonhuman energy in the form of new technologies, such as tractors, reapers, and water delivery systems. The results of this trend continue to define the nature of agricultural production in most of the world.

Reducing labor demands in agriculture and increasing technology accomplishes a whole range of things that contribute to trade and profit in both the agricultural and industrial sectors. First, substituting technology for human labor and reducing the number of people involved in agriculture makes agriculture more profitable. Labor costs are reduced and agricultural wealth is concentrated in fewer hands. Increasing technology also creates a need for greater capital investments. As a result, those people with access to capital, that is the more affluent, are able to profit, forcing the less affluent to give up their farms. This further concentrates agricultural wealth. The increase in the need for capital also creates

opportunities for investors (banks, multilateral organizations, commodity traders) to enter the agricultural sector, influence its operation by supplying and/or withholding capital, and profiting from it.

Second, reducing the number of

people in agriculture and concentrating agricultural wealth to ensure profits

of those who remain helps keep food prices and consequently industrial wages relatively low. This creates a danger of food

monopolies and the potential for a

rise in prices. For example, cereal production in the

Third, reducing agricultural labor frees labor to work in industry and creates competition for industrial jobs, which helps keep wages low. That is, the more people who are dependent for their livelihood on jobs, the lower the wages industry needs to pay.

Finally, to keep labor costs down

and increase the amount of technology required for the maintenance of food production, the state must subsidize the

agricultural sector. In the

In sum, the results for the capitalist economy of the reduction of agricultural labor and the subsequent increase in technology are:

■ A capital-intensive agricultural system, dependent on the use of subsidized energy

■

The exploitation of domestic farm labor and of foreign

land and labor to keep food

prices low and

agricultural and industrial profits high

■

A large labor pool from which industry can draw

workers, whose wages are kept

down by competition

for scarce jobs and the availability of cheap food

It is important to note that until the 1950s the technological intensification of agriculture did not substantially increase yield. That is, though an American corn farmer needed only one hundred hours of labor to produce one hectare (2.41 acres) of corn, the amount of corn produced was no greater than that produced by a Mexican swidden farmer, working ten times as many hours using only a machete and hoe. In other words, while mechanization made the American farm more economical by reducing labor energy and costs, it did not produce more food per hectare (Schusky 1989:115). One question we need to ask is what happens when we export the American agricultural system to developing countries?

The Neocaloric and the Green Revolution

The culmination of the development of capitalist agriculture, a system that is technologically intensive and substitutes nonhuman energy for human energy, was dubbed the neo-caloric revolution by Ernest Schusky. For Schusky, the major characteristic of the

neocaloric revolution has been the vast increase in nonhuman energy devoted to food production in the form of fertilizers, pesticides, herbicides, and machinery.

David and Marcia Pimentel (1979) provided a unique perspective on the neocaloric. Their idea was to measure the number of kilocalories' produced per crop per hectare of land and compare it to the amount of both human and nonhuman energy expended in kilocalories to produce the crop. Their work dramatized both the efficiency of traditional forms of agriculture and the energy use required of modern capitalist agriculture.

For example, to produce a crop of corn a traditional Mexican swidden farmer clears land with an ax and machete to prepare it for burning and uses a hoe to plant and weed. Pimentel and Pimentel (1979:63) figured that crop care takes 143 days and the worker exerts about 4,120 kilocalories per day. Thus, labor for one hectare amounts to 589,160 kilocalories. Energy expended for ax, hoe, and seed is estimated at 53,178 kilocalories, for a total of 642,338 kilocalories. The corn crop produced yields about 6 million kilocalories, for an input-output ratio of 1 to 11; that is, for every kilocalorie expended, eleven are produced, a ratio that is about average for peasant farmers. Nonhuman energy used is minimal, consisting only in the fossil fuel (wood, coal, or oil) used in producing the hoe, ax, and machete.

Now let's examine plow agriculture with the use of an ox. One hour of ox time is equal to four hours of human time, so labor input for one hectare drops to 197,245 kilocalories from 589,160 kilocalories. But the ox requires 495,000 kilocalories to maintain. Moreover, the steel plow requires more energy in its manufacture, so fossil fuel energy used rises to 41,400, with energy for seeds remaining at 36,608, for a total of 573,008. However, the corn yield drops significantly, to half, so the input-output ratio drops to 1 to 4.3. The reason for the drop is likely the lowering of soil fertility. If leaves, compost, or manure is added to the soil yields might increase, but so would the energy required to gather and spread the fertilizer.

Finally, if we

consider a corn farmer in the

The

intensification of the use of technology in agriculture is largely the result

of what

has been called the green revolution. The green revolution began with research

conducted in

'A calorie or gram-calorie is the amount of heat needed to raise 1 gram of water 1 C to 15 C. The kilocalorie (kcal) or kilogram-calorie is 1,000 gram-calories or the amount of heat needed to raise 1 kg of water 1 C to 15 C. It can also be conceptualized as follows; 1 horsepower of energy is the same as 641 kilocalories; 1 gallon contains 31,000 kilocalories; if used in a mechanical engine that works at 20 percent efficiency, 1 gallon of gasoline is equal to 6,200 kilocalories of work, or one horse working at capacity for a ten-hour day, or a person working eight hours per day, five days per week, for 2.5 weeks.

wheat suitable for Mexican

agriculture. Soon the research produced dramatic results all over the world as

farmers began using specially produced strains of crops such as wheat, corn, and rice called high-yielding

varieties (HYVs). The productivity of the new seeds lay largely in their

increased capacity for using fertilizer and water. Whereas increased use of fertilizer and water did not increase the

yields of old varieties (in fact, it might harm them), it vastly increased the yields of the new varieties.

Consequently, more and more farmers

around the world adopted them. Use of these new varieties was encouraged by

the petrochemical industry and fertilizer production plants, which sought to

expand their markets. Thus, research

efforts to develop HYV plants were expanded in

But the green revolution soon ran into some problems. First, the new plants required greater inputs of fertilizer and water. Because of the added energy costs farmers often skimped on fertilizer and water use, resulting in yields similar to those prior to the adoption of the new crops. Consequently, farmers returned to their previous crops and methods. Second, the OPEC oil embargo in 1973 raised oil prices, and since fertilizers, irrigation, and other tools of the green revolution were dependent on oil, costs rose even higher. Some people began to refer to HYV as energy-intensive varieties (EIVs).

The expense was increased further by the amount of water needed for fertilizers. Early adaptations of the new technology tended to be in areas rich in water sources; in fact, most of the early research on HYV was done in areas where irrigation was available. When the techniques were applied to areas without water resources, the results were not nearly as dramatic. It also became apparent that the energy required for irrigation was as great in some cases as the energy required for fertilizer.

In addition, the green revolution requires much greater use of chemical pesticides. If farmers plant only a single crop or single variety of a crop it is more susceptible to the rapid spread of disease, which, because of the added expense, could create catastrophic financial losses. Consequently, pesticide use to control disease becomes crucial. Furthermore, since the crop is subject to threats from insect and animal pests at all stages of productionin the fields, in storage, in transportation, and in processingpesticide costs rise even further.

Finally, the new fertilizers and irrigation favor the growth of weeds; therefore, herbicides must be applied, further increasing energy expenditure.

One result of

the change from subsistence farming, in which the major investment was land, to

a form of agriculture that is highly land-, water-, and energy-intensive is, as

Ernest

Schusky (1989:133) noted, to put the small farmer at a major disadvantage

because of the difficulty in raising the capital to finance the modern

technological complex. The result in the

It is in

livestock production, however, that the neocaloric revolution really comes into focus.

One innovation of the past hundred years in beef production was feeding grain to cattle. By

1975 the

TABLE 6.1 Changes in Number of

![]()

![]() Size of Farm (Acres) 1992 1969 1950 % Change

Size of Farm (Acres) 1992 1969 1950 % Change

|

1,310,000 |

1,944,224 |

4,606,497 |

-172% |

|

255,000 |

419,421 |

478,170 |

-47% |

|

186,000 |

215,659 |

182,297 |

+.08% |

|

173,000 |

150,946 |

121,473 |

+58% |

|

1,925,000 |

2,730,250 |

5,388,437 |

-166% |

1-59

260-499

500-999

1,000+

Total

Adapted from Eric Ross, Beyond the Myths of Culture: Essays in

Cultural Materialism.

percent from grain. If we calculate the energy that goes into grain production and the operation of feedlots, the productive ratio is as low as 1 kilocalorie produced for every 78 kilocalories expended.

If we factor in processing, packaging, and delivery of food, the energy expenditure is even higher. For example, the aluminum tray on which a frozen TV dinner is served requires more calories to produce than the food on the tray. David Pimentel estimated that the American grain farmer using modern machinery, fertilizer, and pesticides uses 8 calories of energy for every 1 calorie produced. Transportation, storage, and processing consume another 8 fossil calories to produce 1 calorie (see Schusky 1989:102).

As Schusky

said, in a world short on energy such production makes no sense, but in a world

of cheap energy, particularly oil, especially if it is subsidized by the

nation-state, it is highly profitable. The real problem comes when agricultural

production that substitutes

fossil fuel energy for human energy is exported to developing countries. To

begin with, there is simply not enough

fossil energy to maintain this type of production for any length of time. One

research project estimated that if the rest of the world used energy at the rate it is used in the

Furthermore, in countries with a large rural population the substitution of energy-intensive agriculture for labor-intensive agriculture will throw thousands off the land or out of work, resulting in more people fleeing to the cities in search of employment. And since modern agriculture is capital-intensive, the only farmers who can afford to remain will be those who are relatively wealthy, consequently leading to increasing income gaps in rural as well as urban areas. Where the green revolution has been successful, small farmers have been driven off the land to become day laborers or have fled to cities in search of jobs, as commercial investors bought up agricultural land. Or more wealthy farmers have bought up their neighbor's since only they had the capital to invest in fertilizers and irrigation.

Then there is the potential for green revolution II, the application of genetic engineering to agricultural production. Genetically engineered crops are controversial, largely because of claims that they have not been rigorously tested, and that we do not know yet what affects they may have on the environment or on the people who eat them. Clearly

claims by agribusiness concerns, such as Monsanto Corporation, that such crops will help us feed the hungry are, as we shall see, disingenuous at best. Furthermore, in some cases, genetically engineered crops simply try to correct the damage done by capitalist agriculture. For example, there is the much touted 'golden rice,' a genetically engineered variety with vitamin A that is a cure for blindness that afflicts some 300,000 people a year. Yet, as Vandana Shiva (2000) notes, nature ordinarily provides abundant and diverse sources of vitamin A; rice that is not polished provides vitamin A, as do herbs such as bathua, amaranth, and mustard leaves, which would grow in wheat fields if they were not sprayed with herbicides.

This is not to

say there have not been some successes in modernizing agriculture without

creating greater social and economic inequality and depriving people of access

to food. Murray Leaf (1984) described how one Indian village succeeded in

increasing access to water, using chemical fertilizers, and increasing

production while maintaining landhold-ings and building farm

cooperatives and credit unions. In fact, some countries, including

The Politics of Hunger

The obvious consequence of the reduction of labor needed for food production and the concentration of food production in fewer hands is that the world's population is more dependent on wage labor for access to food. People are consequently more vulnerable to hunger if opportunities for employment decrease, if wages fall, or if food prices rise; they can starve even in the midst of food availability. This is not to say that lack of food is never a factor in hunger, but it is rare for people to have economic resources and not be able to acquire food.

The role of food in a capitalist economy has other important consequences. For example, food production is not determined necessarily by the global need for food, it is determined by the market for food, that is how many people have the means to pay for it. That is why world food production is rarely at its maximum and why it is difficult to estimate how much food could be produced if the market demand existed. The problem is that not only are there not enough people with sufficient income to pay for all the food that could be produced, but so-called overproduction would result in lower prices and decreased profitability. For that reason, in many countries food production is discouraged. Furthermore, land that could be used to grow food crops, is generally dedicated to nonfood crops (e.g., tobacco, cotton, sisal) or marginally nutritious crops (e.g., sugar, coffee, tea) for which there is a market. Finally, the kind of food that is produced is determined by the demands of those who have the money to pay for it. For example, meat is notoriously inefficient as a food source, but as long as people in wealthy countries demand it, it will be produced in spite of the fact that the grain, land, and water required to produce it

would feed far more people if

devoted to vegetable crops. Thus people in

In sum, we need to understand the economic, political, and social relations that connect people to food. Economist Amartya Sen (1990:374) suggested that people command food through entitlements, that is their socially defined rights to food resources. Entitlement might consist of the inheritance or purchase of land on which to grow food, employment to obtain wages with which to buy food, sociopolitical rights such as the religious or moral obligation of some to see that others have food, or state-run welfare or social security programs that guarantee adequate food to all. Not all of these kinds of entitlements exist in all societies, but some exist in all. From this perspective, hunger is a failure of entitlement. The failure of entitlement may come from land dispossession, unemployment, high food prices, or lack or collapse of state-run food security programs, but the results are that people may starve to death in the midst of a food surplus.

|

|

|

One of the

images that emerged from the famine in |

Viewing hunger as a failure of entitlements also corrects ideological biases in the culture of capitalism, the tendency to overemphasize fast growth and production, the neglect of the problem of distribution, and hostility to government intervention in food distribution. Thus, rather than seeing hunger or famine as a failure of production (which it

seems not to be), we can focus

on a failure of distribution (see Vaughn 1987:158). Furthermore, we

are able to appreciate the range of possible solutions to hunger. The goal is simply to

establish, reestablish, or protect entitlements, the legitimate claim to food. Seeing hunger

as a failure of entitlement also focuses on the kinds of public actions that are possible.

For example, access to education and health care are seen in most core countries as basic

entitlements that should be supplied by the state, not by a person's ability to

pay.

And most core countries see basic nutrition as a state-guaranteed entitlement,

in spite of recent attempts in countries such as the

To understand the range of solutions to hunger, we also need to distinguish between the more publicized instances of famine, generally caused by war, government miscalculations, civil conflict, or climatic disruptions; food poverty, in which a particular household cannot obtain sufficient food to meet the dietary requirements of its members; and food deprivation, in which individuals within the household do not get adequate dietary intake (Sen 1990:374).

To illustrate, let's take a look at two situations of hunger, first the more publicized instance of famine and then the less widely publicized endemic hunger.

The Anatomy of Famine

Famines have long been a part of

history. Administrators in

Many historic famines were caused by crop failures, climatic disruptions, and war. Archaeologists have speculated that widespread climatic changes reduced the yields of Mayan agriculturists and resulted in the destruction of Mayan civilization. Yet it is clear that even historically famines resulted from entitlement failures rather than insufficient food (Newman 1990). Even during the Irish potato famine of 1846-1847, when one-eighth of the population starved to death, shiploads of food, often protected from starving Irish by armed guards, sailed down the Shannon River, bound for English ports and consumers who could pay for it.

We can better

appreciate the dynamics of famine and the importance of entitlements by examining a famine crisis

in the African country of

The famine began with a drought. The lack of rain was first noticed after Christmas 1948 and drew serious attention when in January, normally the wettest month of the year, there was no rain at all; it remained dry until some rain fell in March. In some areas the first and second plantings of maize, the main food crop, failed completely, and wild pigs, baboons, and hippos devastated the remaining crops. Old people who remembered the last famine in 1922 said there were signs of a major crisis, and within a few months it was apparent that people were starving. The British colonial government began to organize relief efforts, sending agricultural representatives into the countryside to organize the

planting of root crops, replanting of crops that failed, and opening food distribution camps. By the time the rains came in October, there were reports of real malnutrition and of hundreds of children and adults starving to death. Many died, ironically, at the beginning of 1950 as the maize crop was being harvested, many from eating the crop before it ripened (Vaughn 1987:48).

According to

Vaughn, women suffered most from the famine. The question is, what happened to

women's food entitlements that resulted in their being the most severely affected? To answer this question we need to

know a little about food production, the role of kinship in

The predominant form of kinship structure was matrilineal, that is people traced relations in the female line. The most important kin tie was between brother and sister, and the basic social unit was a group of sisters headed by a brother. Under this system rights to land were passed through women, men gaining rights to land only through marriage. Traditionally, women would work their land with their husbands, who lived with their wives and children. In this system a woman's entitlement to food could come from various sources: her control of land and the food grown on it, sharing of food with matrilineal kin, wages she might earn selling beer or liquor or working occasionally for African farmers, or wages her husband or children might earn. In addition, during the famine the government established an emergency food distribution system from which women could theoretically obtain food. What happened to those entitlements when the crops failed?

Changes in

the agricultural economy and the introduction of wage labor under European

colonial rule had already undermined women's entitlements (Boserup 1970). The British

colonial government was under pressure, as it was in other parts of

When the famine became evident to the British authorities, they took measures that further reduced women's entitlements. First, partly to conserve grain and partly because of a fear of social disorder, they forbade the making and sale of beer, removing a major

source of income for women. Next, they assumed the family unit consisted of a husband, wife, and children, presided over by the husband, and, consequently, refused to distribute food relief to married women, assuming they would obtain food from their husbands. However, many husbands were traveling to seek work elsewhere to buy food, and they might or might not send food or money home. Next, the government food distributions gave preference to those, mostly urban people who were employed in the formal economy by Europeans, Indians, or the government, neglecting those in the rural economy such as part-time women laborers. Furthermore, Europeans and Indians, who had ample food supplies during the famine, often shared with their workers who, again, were mostly men. Many of these men, of course, were conscientious husbands, fathers, brothers, and uncles and shared the food they received. But some did not, either keeping it for themselves or selling it at high prices on the black market.

To make

matters worse, as the famine wore on social units began to fragment. This is a common feature of famines.

Raymond Firth (1959) reported that during the early phases of a famine on the

In sum, then, the most vulnerable portion of the population was women without male support but for whom the colonial authorities took no responsibility, married women whose husbands had abandoned them, and wives of long-term migrants who did not remit money (Vaughn 1987:147). In addition, of course, the children of these women suffered and died disproportionately.

The lesson of

the

The Anatomy of Endemic Hunger

While famine as a cause of

hunger has decreased, endemic hunger caused by poverty has increased. One

problem with endemic hunger is that it goes largely unnoticed, by the press who prefer to

cover the more spectacular instances of famine, and by governments whose

economic and social policies might be held responsible for the hunger. Yet

endemic hunger is a far more serious problem.

Governments often refuse to recognize hunger because it marks an admission of failure to provide adequately for its citizens. And hunger sometimes goes unrecognized because it is assumed to be a medical problem, rather than a problem of food entitlements. Doing anthropological fieldwork in Mali, Katherine A. Dettwyler (1994:71-73) encountered a little girl with swelling of the face, hands, feet, and abdomen, classic symptoms of kwashiorkor, a disease that results from a diet that is sufficient in calories but deficient in protein. The girl's mother said she had funu bana, 'swelling sickness,' and asked Dettwyler for medicine for her daughter, failing to recognize that what her daughter needed was extra meat or milk. When Dettwyler suggested just that, the mother responded by saying the little girl wasn't hungry and that she needed medicine. For the mother it continued to be a medical problem not a food problem.

The denial of hunger prevents the development of programs to alleviate it. More insidiously, when hunger goes unrecognized or unacknowledged, problems that are a consequence of hunger, such as poor work performance, poor academic achievement, and stunted growth, are attributed to other factors, such as lack of motivation or cultural background.

To illustrate

how social, economic, and cultural factors converge to produce hunger in the

midst of plenty, as well as the complexity of identifying hunger and even

admitting it exists, let's examine the problem of starvation in

Sugar has

dominated the economy of northeastern

mid-twentieth century. A

plantation-owning elite ruled over a large peasant population that cut sugarcane for wages, using

unused land to grow their own food crops. A few others worked for wages in the sugar mills and refineries. However, as

the sugar industry expanded and as technological improvements were made in the

1950s and 1960s because of

government policies to expand production for export, many peasants were evicted

from the land, fleeing to the cities

in search of jobs. Poverty was widespread, and even people with jobs did not make enough to meet family

expenses. For example, in northeastern

To make

matters worse, in the mid-1980s

In 1982 Nancy Scheper-Hughes returned to a shantytown, Alto do Cruzeiro, located in the city of Bom Jesus da Mata, where she had worked as a Peace Corps volunteer in 1965. The shantytown consisted of five thousand rural workers, one-third of whom lived in straw huts. The vast majority of residents had no electricity, and water was collected by the women twice a day from a single spigot located in the center of the community. Most men and boys worked as sugarcane cutters during the harvest season. A few men and some women worked in the local slaughterhouse. Other than this part-time work, there was little employment. Many women found jobs as domestics among the middle- and upper-class families or sold what they could in the market. Many women and children worked in the cane fields as unregistered workers at less than minimum pay.

The economics

of hunger in the shantytowns is simplethere is not enough money to buy food.

Because of the economic situation in

With jobs scarce, available wages inadequate, land unavailable to grow food, and government assistance inadequate or nonexistent, residents of the shantytown scramble as best they can to acquire food. In fact, according to Scheper-Hughes, slow starvation is a primary motivating force in the social life of the shantytowns. People eat every day, but the diet is so meager that they are left hungry. Women beg while their children wait for

food, and children's growth is stunted by hunger and malnutrition. Children of one and two years of age cannot sit unaided and cannot or do not speak. Breastbones and ribs protrude from taut skin, flesh hangs in folds from arms, legs, and buttocks, and sunken eyes stare vacantly. The plight of the worker and his or her family is, according to Scheper-Hughes (1992:146), 'the slow starvation of a population trapped in a veritable concentration camp for more than thirty million people.'

Perhaps the

most tragic result of hunger is the death of infants. Approximately 1 million

children younger than five years of age die each year in

Scheper-Hughes found that reliable statistics on infant mortality in the shantytowns were difficult to acquire. She finally obtained statistics for selected years in the 1970s, all of which indicated an infant mortality rate of 36 to 41 percent. From information she collected by combing public records, Scheper-Hughes found the following infant mortality rates for Bom Jesus da Mata: 49.3 percent in 1965; 40.9 percent in 1977; 17.4 percent in 1985; and 21.1 percent in 1987.

While the

infant mortality rate in northeastern

News reports

and government studies about poverty in

Amid the sea of froth and brine that carried away the infants and babies of the Alto do Cruzeiro, what kind of professional prudery it was that 'failed to see' what every mother of the Alto do Cruzeiro knew without ever being told. 'They die,' said one woman going to the heart of the matter, 'because their bodies turn to water.'

One way to

mask the evidence of starvation is to turn it into a medical problem; the medicalization

of hunger is exactly what Scheper-Hughes discovered in

One of the things anthropology teaches about the human capacity for culture is that people place experiences in systems of meaning that allow them to make sense of their

|

|

A homeless mother and child trying to survive on the streets of a Brazilian city.

experiences. Illness and disease

are no exception; different people define differently what constitutes

illness as opposed to something that is not illness. Moreover, people will define what constitutes a medical

problem (e.g., infant diarrhea) as opposed to, say, a social problem (e.g., hunger). In northeastern

One of the

major traditional illness syndromes in

symptoms, they say they are sick with nervos. People believe nervos is the result of an innately weak and nervous body. In years past when people thought they suffered an attack of nervos, they drew on their traditional store of herbal medicines and the practical knowledge of elderly women in the household. Today they seek medical help from one of the local clinics; now nervos, a traditional disease category, is thought to be treatable by modern medicine.

But there was another more

insidious change in how people defined their physical condition: symptoms associated with hunger began to be talked about as nervos.

That is, hunger and nervos became synonymous. This was not

always the case. Starvation and famine have long been a feature of life in

northeastern

Nancy Scheper-Hughes (1992:174) said a hungry body represents a potent critique of the nation-state in which it exists, but a sick body implicates no one and conveys no blame, guilt, or responsibility. Sickness just happens. The sick person and the sick social system are let off the hook. A population suffering from starvation, however, represents a threat to the state that requires economic and social solutionssocial programs, jobs, or land redistribution. Nervos, a sickness, is a personal and 'psychological' problem that requires only medical intervention and faults no one, except perhaps the person suffering from it. Sickness requires little state action, other than supplying the occasional prescription of tranquilizers, vitamins, or sleeping pills. Instead of the responsible use of state power to relieve starvation and hunger, there is a misuse of medical knowledge to deny that there is a social problem at all.

Scheper-Hughes (1992:207) related the story of a young single mother who brought her nine-month-old child to the clinic, explaining that the baby suffered from nervoso in-fantil. She complained that the small, listless, and anemic child was irritable and fussy and disturbed the sleep of family members, especially the grandmother, who was the family's economic mainstay. Herbal teas did not work, and the grandmother threatened to throw them out if the child did not sleep. The doctor refused to give her sleeping pills for the child and instead wrote a prescription for vitamins. The doctor failed to acknowledge the mother's real distress, as well as the child's state of malnutrition, and the vitamins served virtually no purpose other than to redefine the infant's condition from starvation to sickness or malnutrition.

On other occasions children will be brought to doctors with severe diarrhea, a classic symptom of starvation. Simple rehydration therapy, feeding the child special fluids, will usually cure the diarrhea for a time. But the child is still returned to an environment where, with lack of food, the problem is likely to recur until after being 'saved' perhaps a dozen times the child finally dies of hunger.

The question, said Scheper-Hughes, is how do people come to see themselves as primarily nervous, and only secondarily hungry? How do they come to see themselves as weak, rather than exploited? How does overwork and exploitation come to be redefined as a sickness for which the appropriate cure is a tonic, vitamin A, a sugar injection? Why do chronically hungry people 'eat' medicines and go without food?

One reason is that since hungry people truly suffer from headaches, tremors, weakness, irritability, and other symptoms of nervous hunger, they look to doctors, healers, political leaders, and pharmacologists to 'cure' them. They look for strong-acting medicines, so they line up at clinics and drugstores until they get them. One cannot, said Scheper-Hughes, underestimate the attractiveness of drugs to people who cannot read warning labels and who have a long tradition of 'magical medicines.'

Furthermore, she said, health is a political symbol subject to manipulation. Slogans such as 'health for all by the year 2000,' or 'community health,' filter down to poor, exploited communities where they serve as a cover for neglect and violence. There is power and domination to be obtained by defining a population as sick or nervous and in need of the power of politicians and doctors:

The medicalization of hunger and childhood malnutrition in the clinics, pharmacies, and political chambers of Bom Jesus da Mata represents a macabre performance of distorted institutional and political relations. Gradually the people of Bom Jesus da Mata have come to believe that they desperately need what is readily given to them, and they have forgotten that what they need most is what is cleverly denied. (Scheper-Hughes 1992: 169-170)

Conspiracy by medical workers is not necessary to effect this transformation. Doctors and clinic workers themselves accept the magical efficacy of cures; either that or they are demoralized to the extent that they prescribe drugs as the only solution they have to ills they are ill-prepared to solve but called on to treat. As one doctor (cited Scheper-Hughes 1992:204) said:

They come in with headaches, no appetite, tiredness, and they hurt all over. They present a whole body in pain or in crisis, with an ailment that attacks them everywhere! That's impossible! How am I to treat that? I'm a surgeon, not a magician! They say they are weak, that they are nervous. They say their head pounds, their heart is racing in their chest, their legs are shaking. It's a litany of complaints from head to toe. Yes, they all have worms, they all have amoebas, they all have parasites. But parasites can't explain everything. How am I supposed to make a diagnosis?

Solutions to Poverty and Hunger

What solutions are there to the problem of world hunger and the poverty that ultimately causes it? Is it best to focus on economic development or, as some call it, growth-mediated security systems, assuming so-called market mechanisms will improve people's lives? Or are famine and endemic hunger best addressed by public support systems in the form of state-financed food and nutrition programs, state-financed employment, or cash distribution programs? The answer to these questions are not easy. Some argue, for example, that

state-run programs take money needed for economic development and divert it to programs that may in the short run alleviate poverty and hunger but in the long run will simply aggravate the problem by undercutting the growth of private enterprise. This is the position generally taken by multilateral organizations, such as the World Bank and IMF, which insist on limitations or cutbacks in state spending as conditions for loans or structural adjustment programs to assist countries in debt. Others respond that state-run anti-poverty programs represent an investment in the human capital necessary for economic development, that a population that is hungry, undernourished, and subject to disease is less productive. To answer these questions we'll first examine the role of economic development in reducing poverty and hunger, then examine the role of foreign aid, and, finally, examine the potential of what are called 'micro-credit' programs.

Economic Development

The concept of 'economic development' is

generally traced to U.S. President Harry S. Truman's

inaugural address before congress in 1949 in which he referred to the conditions in 'poorer' nations, and

described them as 'underdeveloped areas.' At that point multilaterial

institutions, such as the World Bank and the IMF were mobilized to further development goals, often recommending massive

economic and social changes, and a total reorganization of societies deemed to

be 'undeveloped.' For example, the 1949 World Bank report sent back from

piecemeal and sporadic efforts are apt to make little impression on the general picture. Only through a generalized attack throughout the whole economy on education, health, housing, food, and productivity can the vicious circle of poverty, ignorance, ill health, and low productivity be decisively broken (IBRD 1950: xv)

Proponents of

economic development point to successes in raising life expectancy rates

worldwide, lowering infant mortality rates, and raising literacy rates. They

can point to the development of successful national economies in

Critics,

however, paint a different picture. They argue that the goal of economic development to

raise the living standards of people in the periphery, fifty years after its inception, has largely failed. A

higher percentage of the world's population, they say, is hungrier today than in 1950. Studies by the very

institutions at the forefront of development, such as the World Bank and IMF,

conclude that the goals of development have not been met. While poverty rates have declined somewhat in East Asia (from

26.6 percent in 1987 to 15.32 percent in 1999), and the

people living on less than $2 a day is added, those living in poverty number 2.8 billion people.' Furthermore, the projects that were to promote 'development' have notably decreased people's quality of life. Hundreds of millions of people have been displaced from their communities and homes or thrown off their land by World Bank hydroelectric and agricultural mega-projects. Instead of lifting the standard of living of peripheral countries, there has been a doubling of the degree of economic inequality between rich and poor countries in the past forty years. As one study concludes:

.. .there is no region in the world that the [World] Bank or [International Monetary] Fund can point to as having succeeded through adopting the policies that they promoteor, in many cases, imposein borrowing countries (Weisbrot et al. 2000:3)

The question then is, why, up to this point, has the economic development initiative largely failed?

There are three features of the economic development program that we need to examine. The first is the tendency to define the goals of development and an acceptable standard of living in the very limited terms of income and gross national product (GNP). Income and the level of production has become the measure of the worth of a society.

Second, economic development embodies an ideology that assumes that the culture and way of life of the core is universally desirable and should be exported (or imposed, if necessary) on the rest of the people of the world. As Wolfgang Sachs (1999:5) notes, 'development meant nothing less than projecting the American model of society on the rest of the world.'

Third, the concept of development greatly increased the power of core governments over the emerging nation-states of the periphery. If development was to be the goal, then 'undeveloped' or 'underdeveloped' countries must look to the expertise of the core for financial aid, technological aid, and political help. Furthermore, by embracing development as a goal, leaders of peripheral states gain military assistance and support that permit many of them to assume dictatorial powers over the rest of the population.

In his book, Seeing

Like a State: How Human Schemes to Improve the Human Condition Have

Failed (1998), James C. Scott examines a series of failed development projects. Among these, says Scott,

are the design and building of cities such as

First, all these projects engaged in a form of economic reductionism; social reality was reduced to almost solely economic elements, ignoring institutions and behaviors essential to the maintenance of societies and environments. Reductionism of this sort is attractive to nation-states because it makes populations and environments more 'legible,' and, conse-

1 The figures of $ 1.00 and $2.00 a day used by

the World Bank, the United Nations, and other international agencies to measure poverty may also be misleading. In the

quently, amenable to state manipulation and control. The guiding parable for understanding this reductive tendency is, for Scott, that of the 'scientifically' managed forest.

Scientific

forestry emerged in

The language of capitalism, says Scott, constantly betrays its tendency to reduce the world to only those items that are economically productive. Nature, for example, becomes 'natural resources,' identifying only those things that are commodifiable. Plants that are valued become 'crops'; those that compete with them are stigmatized as 'weeds.' Insects that ingest crops are called 'pests.' Valuable trees are 'timber,' while species that compete with them are 'trash' trees or 'underbrush.' Valued animals are 'game,' or 'livestock,' while competitors are 'predators,' or 'varmints' (Scott 1998:13). In the language of economic development the focus is on GNP, income, employment rates, kilowatts of electricity produced, miles of roads, labor units, productive acreage, and so on. Absent in this language are items that are not easily measured or that are noneconomic (e.g., quality of social relations, environmental aesthetics, etc.).

The second ingredient in failed projects, says Scott, is what he refers to as 'high modern ideology.' This ideology is characterized by a supreme (or, as Scott refers to it, a 'muscle-bound') version of self-confidence in scientific and technical progress in satisfying human needs, mastering the environment, and designing the social order to realize these objectives. This ideology emerged as a by-product of unprecedented progress in the core and attributed to science and technology. High modern ideology does not correspond to political ideologies and can be found equally on the left and the right. Generally, however, it entails using state power to bring about huge, Utopian changes to people's lives.

The idea of economic development is a product of high modern ideology. It represents an uncritical acceptance of the idea of scientific and technological progress, and an unrestrained faith in the wisdom of core economic, scientific, and technological principles. The green revolution and the attempt to widely apply the technology of genetic engineering discussed earlier are but two other products of high modern ideology.

Scott is careful not to reject outright the application of high modern ideology. In some cases it can and has led to significant improvements in peoples' lives. Thus economic reductionism and high modern ideology are not, in themselves, sufficient to contribute to a failed project. For failure to occur there is a third ingredientan authoritarian state. When high modern ideology, and the nation-state's tendency to reduce the world to legible, manipulative units is combined with an authoritarian state willing and able to use the full weight of its coercive power to bring these high-modernist designs into being,

along with a civil society that is unable to resist its manipulations, the stage is set for a development disaster. As Scott says,

the legibility of a society provides the capacity for large-scale engineering, high-modernist ideology provides the desire, the authoritarian state provides the determination to act on that desire, and an incapacitated civil society provides the leveled social terrain on which to build (1998:5)

To illustrate

how the goals of economic development and modernization can lead to disruption,

Scott describes the ujamaa village campaign in

Clearly, the village scheme emerged from a desire of the Tanzanian state to make its population more legible and manipulable. At the beginning of the development scheme there were some 11 to 12 million rural Tanzanians living in scattered settlements, growing crops and herding animals, using techniques developed over generations. As the natural forest contained elements to sustain it, so these settlements sustained themselves with local knowledge and practice built up over the years. From the viewpoint of the state, however, these populations were economically unproductive, disordered, and, somehow, primitive. The project, which succeeded in moving over 13 million people into 7,684 villages (Scott 1998:245), was to transform the citizenry into producers of exportable crops, strung out along paved roads, where state services could be easily delivered, and where the population could be easily monitored. Furthermore, the success of the villagization program could be easily tracked by counting the number of people moved, the number of villages built, and so on.

Clearly, the

project was driven by a high modern ideology imported from the West that

assumed that 'modern' settlement patterns, agricultural techniques,

and modes of communication were clearly superior to those practiced by the

indigenous population. In fact, notes Scott (1998:241), these premises

were holdovers from the colonial era. Project designers and supporters

assumed that African cultivators and pastoralists were backward, unscientific, and

inefficient. They assumed that only through the supervision or, if necessary,

coercion by specialists in scientific agriculture could they contribute to a modern

Finally, as with most development schemes, there had to be an authoritarian state to impose the program and to suppress resistance to it. From the beginning, Tanzanians were skeptical about villagization. While they had knowledge of the areas in which they lived, the new, selected sites were chosen without consideration of water or fuel resources and, in fact, threatened to exceed the carrying capacity of the land. They feared that concentrating people in villages would result in overcrowding for people and domestic animals. To meet this resistance the state moved its machinery into place to force compliance. It was, said officials, for the people's own good. 'We cannot tolerate,' said Tanzanian president Julius Nyerere, 'seeing our people live a 'life of death.'' All of this was keeping with World Bank reports, such as one in 1964 that said:

How to overcome the destructive

conservatism of the people and generate the drastic agrarian

reform which must be effected if the country is to survive is one of the most

difficult problems political leaders of

Given the situation, violence was inevitable. The police and militia were given authority to move people, first threatening that they would not be eligible for famine relief if they did not move, later forcing them to tear down and load houses and belongings onto trucks. In some cases houses were burned or run over with trucks. When people realized that resistance was futile, they saved what they could move and fled the new villages at the first opportunity (Scott: 1998:235-236).

As might be

expected, the results of villagization were disastrous. Huge imports of food

were necessary from 1973 to 1975 because of the immediate decline of

agricultural production, the cost of which would have bought a cow for every

Tanzanian family. Some 60

percent of the new villages were built on semi-arid land that required long

walks to reach farm plots. Peasants were

moved from fertile farm lands to poor lands or land on which the soil was

unsuitable for the crops the government demanded farmers grow. Villages were

located at a distance from crops that left the crops open to theft and pests.

The concentration of people and livestock resulted in cholera and livestock

epidemics, while herding cattle in concentrated areas devastated both

range-land and livelihoods (Scott 1998:247).

Instead of achieving legitimacy, the villagization campaign created an alienated and uncooperative peasantry for which

Of course, the

scheme was not totally Tanzanian. It resembled hundreds of colonial schemes, built on the supposed

promise of modern science and technology, as well as projects still supported by the World Bank, the United States Agency

for International Development (USAID), and other development agencies. That it

was a disaster, should come, in

retrospect, as no surprise, in spite of the conviction of virtually everyone involved that it would vastly improve the life of

the subjects. More disturbing yet is that the Tanzanian government, at the

urging of the World Bank and the

Based on his examination of failed projects, Scott (1998:348) concludes that the major fault is that the project planners thought that they were smarter than they really were and that they regarded the subjects of their plans as far more stupid and incompetent than they really were. By trying to impose a vision of modernization and progress built largely on economic criteria and a vision of life that emerged in the West, high modern ideologues fostered the social and cultural equivalent of unsustainable, impoverished, monocropped, 'scientifically managed' forests, environments devoid of the social stuff that enable people in communities to form cooperative units and work together to achieve common goals.

Yet the same ingredients for

failureeconomic reductionism, high modern ideology, and an authoritarian state combined with a suppressed civil

societyare evident (and yet

unexamined) in countless development efforts. Another of these involves the case

of

Foreign Aid: The Case of

The ideologues of high modern ideology received a

wonderful opportunity with the breakup of the

countries and international

financial agencies, the ex-communist economy was to be transformed into a

market economy modeled after those in the West. By the end of 1992 the major industrial countries

along with international financial institutions contributed some $129 billion in debt relief, loans, and

export credits to the former communist bloc countries (Wedel 1998a: 18). The

IMF alone contributed another $22.6 billion bailout to

The plan to

transform

In 1998 an

estimated one out of every five people in the transition countries of Europe and

Why, in spite

of billions of dollars of foreign aid and the influsion of expertise from the West, have

these conditions developed? There are probably no simple answers. Among the factors

contributing to

The case of

foreign aid to

As in most

failed projects, foreign aid to

duction characteristic of Soviet socialism. Aid was to

be used to privatize these institutions in

the belief that this particular reform would result in the restructuring of

The second

ingredient in the story is a high modern ideology. This was supplied, in this

case, by Western economic experts who were paid by core governments and

agencies to 'advise' their counterparts in

The third ingredient was an ability by those involved in loan schemes to bypass democratic institutions and rely for reforms on the authority of the Russian state. In this case, this involved a few Russian government officials who, because of their ties to Western economic institutions, were able to work around elected officials.

The story

begins with Western governments searching for 'Russian reformers' to work with to transform the Russian

economy. They focused on a group of men from

Chubais, who

spoke English, had Western contacts and was conversant with aid donor

vernacular of 'markets,' 'reform,' and 'civil

society,' served as a useful intermediary both for American advisors and

for the Russian government of Boris Yeltsin. Chubais was appointed

head of

The

privatization program led by Chubais shaped the new distribution of wealth in

began to associate terms such as market economy, economic reform, and the West with dubious activities that benefited a few at the expense of everyone else who suffered a devastating decline from the standard of living under socialism (Wedel 1998a: 132-133).

In addition,

the Clan leveraged its support from USAID and HIID to solicit funds from other

international donor agencies. One of the agencies set up by the Clan and HIID

was the

Unfortunately,

the Clan seemed less interested in market reform than in establishing

political power. Furthermore, they were not well regarded by Russians who

considered them little more than a communist-styled group that created and

shared profits (Wedel 1999:11). As a result, by supporting one specific political

faction,

The situation

was also ripe for corruption. Thus members of the Clan and of HIID were in the

position of recommending aid policies while being, at the same time, recipients of aid

money, as well as overseeing aid contracts. The situation made it easy for Clan

members

and supporters to, as Wedel put it, 'work both sides of the table.'

It perhaps is not surprising that in September 2000, the

To what

extent the case of foreign aid to

Targeting Vulnerable Populations: The Grameen Bank and Microcredit

As we saw in the case of the 1949 famine in

One oft-cited

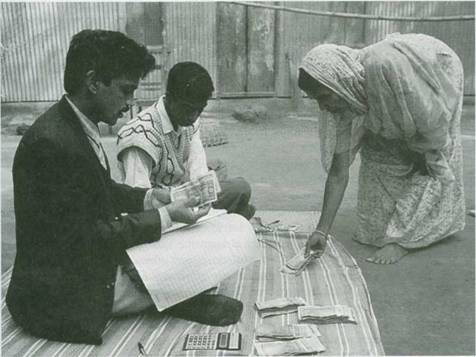

example of programs to aid women is the Grameen Bank in

mostly poor women who, with no collateral, are unable to borrow from regular banks will enable them to establish small retail businesses (e.g., vegetable vendor, fruit seller, tea stall owner, lime seller, egg seller) or small manufacturing businesses (e.g., incense maker, plastic flower maker, wire bag maker, mat weaver, toy maker) whose income will enable them to pay back the loan with interest.

The Grameen

Bank was founded by Mohammad Yunus, an economics professor at

The vast majority of borrowers from the Grameen Bank are women. Here a lender makes one of her regular loan payments.

qualitative change in the living conditions of more members of the household. As of 2000, the Grameen Bank lent more than $2 billion to more than 2 million villagers, most of them women.

The Grameen Bank is unique also because it combines its lending activities with a regimented social program. Money is lent, not to individuals, but to groups of five to six persons who, as a group, meet regularly with bank staff. They are required to observe the bank's four major principlesdiscipline, unity, courage, and hard work. They must also pledge to shun child marriage, keep families small, build and use pit latrines, and plant as many seedlings as possible during planting seasons. At meetings they are required to salute, recite their obligations, do physical drills, and sit in rows of five to mark their group affiliation. Furthermore, they are responsible, as a group, for the repayment of loans; that is, if one person in the group defaults, others in the group are responsible for the payment.

The question we need to ask is does extending financial credit to poor women serve to empower them economically, socially, or politically? Some research suggests that women borrowers work more, have higher incomes, and eat better (Wahid 1994). Other ethnographic research (Schuler and Hashemi 1994) suggests that bank membership not only empowers women economically but significantly increases contraceptive use and fertility control. One study revealed that over 50 percent of Grameen members escaped poverty over a ten-year period, as opposed to only 5 percent in a control group (Gibbons and Sukor 1994).

However, while

the Grameen Bank, and microcredit institutions themselves have been widely praised by development

agencies and emulated worldwide, recent research by anthropologist Aminur Rahman (1999) suggests that the highly

patriarchal structure of

Rahman also questioned whether or not the loans were economically viable. He found that only 33 percent of installment loans came from the investment, 10 percent were paid by using the principal from the loan, and 57 percent were paid from other sourcesrelatives, peers, moneylenders, and others.

The loans may also negatively affect relations between women and other family members. While 18 percent or 120 women borrowers interviewed by Rahman (1999:123) reported a decrease of verbal and physical assault because of involvement with the bank, 57 percent reported an increase of verbal assault, and 13 percent report an increase in verbal and physical assault.

Rahman (1999:148) concludes that women are targeted for microcredit largely because they are vulnerable, submissive, shy, passive, immobile, and easy to discipline. It is only because of their vulnerable societal position that women regularly attend the weekly

meetings of borrowers and honor the rigid schedule for repayment of loans. For example, if a woman fails to make installment payments on time, according to one of Rahman's informants, she is verbally humiliated by peers and male bank workers. If a woman is publicly humiliated, it gives males in the household a bad reputation. Peers may take the defaulter and lock her in the bank building. To do this to a man would mean almost nothing in the village; but, for a woman it will bring durnam ('bad reputation') to her household, lineage, and village (Rahman 1999:75).

Whether or not the problems reported by Rahman in Bangladesh is an anomaly in microcredit because of the society's highly patriarchal social structure or whether other microcredit programs have similar problems is difficult to say. However, as Rahman (1999: 150-151) puts it,

loans alone (which are also debt-liability), without viable opportunities for women to transform the power relations and create their own spaces in the prevailing power structure, make equitable development and empowerment of women unattainable in the society.

Conclusion

It is apparent that hunger is

not caused by a lack of food, but rather by some people's lack of ability to

purchase food. It is apparent, also, that the poverty that causes hunger is a consequence of global economic

forces, such as the financial debt that peripheral countries accumulated in the 1970s. Other instances of hunger and famine

are generally a consequence of

political unrest. Even in relatively wealthy countries such as

We have seen, furthermore, that economic policies of the wealthy countries are not designed to help the poor countries but to further the interests of corporate and political agendas. Economic development programs, as we have seen, have, by and large, done very little to help their intended beneficiaries, and, in many cases, have created enormous suffering and economic and environmental devastation.

Finally we examined the role of women in development and examined programs and initiatives, such as the Grameen Bank, that, by improving the position and power of women, have attempted to reduce poverty and the threat of hunger. We have also seen, however, that their potential for success is severely compromised by societies in which women are oppressed.

|

Politica de confidentialitate | Termeni si conditii de utilizare |

Vizualizari: 2920

Importanta: ![]()

Termeni si conditii de utilizare | Contact

© SCRIGROUP 2026 . All rights reserved