| CATEGORII DOCUMENTE |

| Bulgara | Ceha slovaca | Croata | Engleza | Estona | Finlandeza | Franceza |

| Germana | Italiana | Letona | Lituaniana | Maghiara | Olandeza | Poloneza |

| Sarba | Slovena | Spaniola | Suedeza | Turca | Ucraineana |

Predicting Protein Structure and Function from Sequence

The amino acid sequence of a protein contains interesting information in and of itself. A protein sequence can be compared and contrasted with the sequences of other proteins to establish its relationship, if any, to known protein families, and to provide information about the evolution of biochemical function. However, for the purpose of understanding protein function, the 3D structure of a protein is far more useful than its sequence.

The key property of proteins that allows them to perform a variety of biochemical functions is the sequence of amino acids in the protein chain, which somehow uniquely determines the 3D structure of the protein.[1] Given 20 amino acid possibilities, there are a vast number of ways they can be combined to make up even a short protein sequence, which means that given time, organisms can evolve proteins that carry out practically any imaginable purpose.

[1] That 'somehow,' incidentally, represents several decades of work by hundreds of researchers, on a fundamental question that remains open to this day.

Each time a particular protein chain is synthesized in the cell, it folds together so that each of the critical chemical groups for that particular protein's function is brought into a precise geometric arrangement. The fold assumed by a protein sequence doesn't vary. Every occurrence of that particular protein folds into exactly the same structure.

Despite this consistency on the part of proteins, no one has figured out how to accurately predict the 3D structure that a protein will fold into based on its sequence alone. Patterns are clearly present in the amino acid sequences of proteins, but those patterns are degenerate ; that is, more than one sequence can specify a particular type of fold. While there are thousands upon thousands of ways amino acids can combine to form a sequence of a particular length, the number of unique ways that a protein structure can organize itself seems to be much smaller. Only a few hundred unique protein folds have been observed in the Protein Data Bank. Proteins with almost completely nonhomologous sequences nonetheless fold into structures that are similar. And so, prediction of structure from sequence is a difficult problem.

10.1 Determining the Structures of Proteins

If we can experimentally determine the structures of proteins, and structure prediction is so hard, why bother with predicting structure at all? The answer is that solving protein structures is difficult, and there are many bottlenecks in the experimental process. Although the first protein structure was determined decades before the first DNA sequence, the protein structure database has grown far more slowly in the interim than the sequence database. There are now on the order of 10,000 protein structures in the PDB, and on the order of 10 million gene sequences in GenBank. Only about 3,000 unique protein structures have been solved (excluding structures of proteins that are more than 95% identical in sequence). Approximately 1,000 of these are from proteins that are substantially different from each other (no more than 25% identical in sequence).

10.1.1 Solving Protein Structures by X-ray Crystallography

In the late 1930s, it was already known that proteins were made up of amino acids, although it had not yet been proven that these components came together in a unique sequence. Linus Pauling and Robert Corey began to use x-ray crystallography to study the atomic structures of amino acids and peptides.

Pure proteins had been crystallized by the time that Pauling and Corey began their experiments. However, x-ray crystallography requires large, unflawed protein crystals, and the technology of protein purification and crystallization had not advanced to the point of producing useful crystals. What Pauling and Corey did discover in their studies of amino acids and peptides was that the peptide bond is flat and rigid, and that the carboxylic acid oxygen is almost always on the opposite side of the peptide bond as the amino hydrogen. (See the illustration of a peptide bond in Figure 9-1). Using this information to constrain their models, along with the atomic bond lengths and angles that they observed for amino acids, Pauling and Corey built structural models of polypeptide chains. As a result, they were able to propose two types of repetitive structure that occur in proteins: the alpha helix and the beta sheet, as shown previously in Chapter 9.

In experiments that began in the early 1950s, John Kendrew determined the structure of a protein called myoglobin, and Max Perutz determined the structure of a similar protein called hemoglobin. Both proteins are oxygen transporters, easily isolated in large quantities from blood and readily crystallized. Obtaining x-ray data of reasonably high quality and analyzing it without the aid of modern computers took several years. The structures of hemoglobin and myoglobin were found to be composed of high-density rods of the dimensions expected for Pauling's proposed alpha helix. Two years later, a much higher-quality crystallographic data set allowed the positions of 1200 of myoglobin's 1260 atoms to be determined exactly. The experiments of Kendrew and Perutz paved the way for x-ray crystallographic analysis of other proteins.

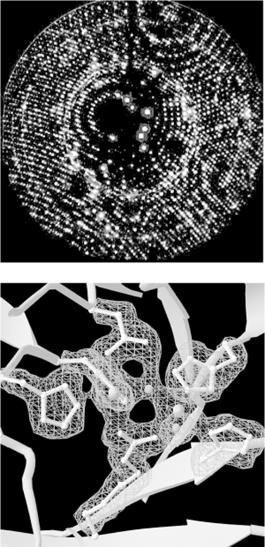

In x-ray crystallography, a crystal of a substance is placed in an x-ray beam. X-rays are reflected by the electron clouds surrounding the atoms in the crystal. In a protein crystal, individual protein molecules are arranged in a regular lattice, so x-rays are reflected by the crystal in regular patterns. The x-ray reflections scattered from a protein crystal can be analyzed to produce an electron density map of the protein (see Figure 10-1: images are courtesy of the Holden Group, University of Wisconsin, Madison, and Bruker Analytical X Ray Systems). Protein atomic coordinates are produced by modeling the best possible way for the atoms making up the known sequence of the protein to fit into this electron density. The fitting process isn't unambiguous; there are many incrementally different ways in which an atomic structure can be fit into an electron density map, and not all of them are chemically correct. A protein structure isn't an exact representation of the positions of atoms in the crystal; it's simply the model that best fits both the electron density map of the protein and the stereochemical constraints that govern protein structures.

Figure 10-1. X-ray diffraction pattern and corresponding electron density map of the 3D structure of zinc-containing phosphodiesterase

In order to determine a protein structure by x-ray crystallography, an extremely pure protein sample is needed. The protein sample has to form crystals that are relatively large (about 0.5 mm) and singular, without flaws. Producing large samples of pure protein has become easier with recombinant DNA technology. However, crystallizing proteins is still somewhat of an art form. There is no generic recipe for crystallization conditions (e.g., the salt content and pH of the protein solution) that cause proteins to crystallize rapidly, and even when the right solution conditions are found, it may take months for crystals of suitable size to form.

Many protein structures aren't amenable to crystallization at all. For instance, proteins that do their work in the cell membrane usually aren't soluble in water and tend to aggregate in solution, so it's difficult to solve the structures of membrane proteins by x-ray crystallography. Integral membrane proteins account for about 30% of the protein complement (proteome) of living things, and yet less than a dozen proteins of this type have been crystallized in a pure enough form for their structures to be solved at atomic resolution.

10.1.2 Solving Structures by NMR Spectroscopy

An increasing number of protein structures are being solved by nuclear magnetic resonance (NMR) spectroscopy. Figure 10-2 shows raw data from NMR spectroscopy. NMR detects atomic nuclei with nonzero spin; the signals produced by these nuclei are shifted in the magnetic field depending on their electronic environment. By interpreting the chemical shifts observed in the NMR spectrum of a molecule, distances between particular atoms in the molecule can be estimated. (The image in Figure 10-2 is courtesy of Clemens Anklin, Bruker Analytical X Ray Systems.)

Figure 10-2. NOESY (2D NMR) spectrum of lysozyme

To be studied using NMR, a protein needs to be small enough to rotate rapidly in solution (on the order of 30 kilodaltons in molecular weight), soluble at high concentrations, and stable at room temperature for time periods as long as several days.

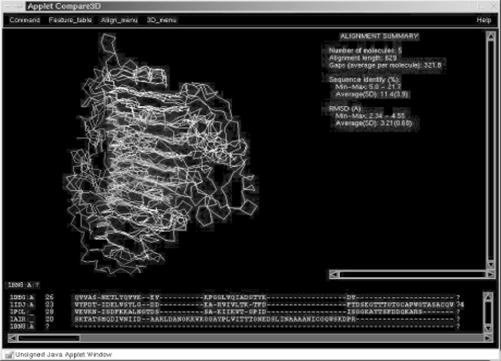

Analysis of the chemical shift data from an NMR experiment produces a set of distance constraints between labeled atoms in a protein. Solving an NMR structure means producing a model or set of models that manage to satisfy all the known distance constraints determined by the NMR experiment, as well as the general stereochemical constraints that govern protein structures. NMR models are often released in groups of 20 -40 models, because the solution to a structure determined by NMR is more ambiguous than the solution to a structure determined by crystallography. An NMR average structure is created by averaging such a group of models (Figure 10-3). Depending on how this averaging is done, stereochemical errors may be introduced into the resulting structure, so it's generally wise to check the quality of average structures before using them in modeling.

Figure 10-3. Structural diversity in a set of NMR models

10.2 Predicting the Structures of Proteins

Ideally, what we'd like to do in analyzing proteins is take the sequence of a protein, which is cheap to obtain experimentally, and predict the structure of the protein, which is expensive and sometimes impossible to determine experimentally. It would also be interesting to be able to accurately predict function from sequence, identify functional sites in uncharacterized 3D structures, and eventually, build designed proteinsmolecular machines that do whatever we need them to do. But without an understanding of how sequence determines structure, these other goals can't reliably be achieved.

There are two approaches in computational modeling of protein structure. The first is knowledge-based modeling. Knowledge-based methods employ parameters extracted from the database of existing structures to evaluate and optimize structures or predict structure from sequence (the protein structure prediction problem). The second approach is based on simulation of physical forces and molecular dynamics. Physicochemical simulations are often used to attempt to model how a protein folds into its compact, functional, native form from a nonfunctional, not-as-compact, denatured form (the protein folding problem). In this chapter we focus on knowledge-based protein structure prediction and analysis methods in which bioinformatics plays an important role.

Ab-initio protein structure prediction from protein sequence remains an unsolved problem in computational biology. Although many researchers have worked to develop methods for structure prediction, the only methods that produce a large number of successful 3D structure predictions are those based on sequence homology. If you have a protein sequence and you want to know its structure, you either need a homologous structure to use as a template for model-building, or you need to find a crystallographer who will collaborate with you to solve the structure experimentally.

The protein-structure community is addressing the database gap between sequence and structure in a couple of ways. Pilot projects in structural genomicsthe effort to experimentally solve all, or some large fraction of, the protein structures encoded by an entire genomeare underway at several institutions. However, these projects stand little chance of catching up to the sheer volume of sequence data that's being produced, at least in the near future.

10.2.1 CASP: The Search for the Holy Grail

In light of the database gap, computational structure prediction is a hot target. It's often been referred to as the 'holy grail' of computational biology; it's both an important goal and an elusive, perhaps impossible, goal. However, it's possible to track progress in the area of protein structure prediction in the literature and try out approaches that have shown partial success.

Every two years, structure prediction research groups compete in the Community Wide Experiment on the Critical Assessment of Techniques for Protein Structure Prediction (CASP, https://predictioncenter.llnl.gov/). The results of the CASP competition showcase the latest methods for protein-structure prediction. CASP has three areas of competition: homology modeling, threading, and ab initio prediction. In addition, CASP is a testing ground for new methods of evaluating the correctness of structure predictions.

Homology modeling focuses on the use of a structural template derived from known structures to build an all-atom model of a protein. The quality of CASP predictions in 1998 showed that structure prediction based on homology is very successful in producing reasonable models in any case in which a significantly homologous structure was available. The challenge for homology-based prediction, as for sequence alignment, is detecting meaningful sequence homology in the Twilight Zonebelow 25% sequence homology.

Threading methods take the amino acid sequence of an uncharacterized protein structure, rapidly compute models based on existing 3D structures, and then evaluate these models to determine how well the unknown amino acid 'fits' each template structure. All the threading methods reported in the most recent CASP competition produce accurate structural models in less than half of the cases in which they are applied. They are more successfully used to detect remote homologies that can't be detected by standard sequence alignment; if parts of a sequence fit a fold well, an alignment can generally be inferred, although there may not be enough information to build a complete model.

Ab-initio prediction methods focus on building structure with no prior information. While none of the ab-initio methods evaluated in CASP 3 produce accurate models with any reliability, a variety of methods are showing some promise in this area. One informatics-based strategy for structure prediction has been to develop representative libraries of short structural segments out of which structures can be built. Since structural 'words' that aren't in the library of segments are out of bounds, the structural space to be searched in model building is somewhat constrained. Another common ab-initio method is to use a reduced representation of the protein structure to simulate folding. In these methods, proteins can be represented as beads on a string. Each amino acid, or each fixed secondary structure unit in some approaches, becomes a bead with assigned properties that attract and repel other types of beads, and statistical mechanical simulation methods are used to search the conformational space available to the simplified model. These methods can be successful in identifying protein folds, even when the details of the structure can't be predicted.

10.3 From 3D to 1D

Proteins and DNA are, in reality, complicated chemical structures made up of thousands or even millions of atoms. Simulating such complicated structures explicitly isn't possible even with modern computers, so computational biologists use abstractions of proteins and DNA when developing analytical methods. The most commonly used abstraction of biological macromolecules is the single-letter sequence. However, reducing the information content of a complicated structure to a simple sequence code leaves behind valuable information.

The sequence analysis methods discussed in Chapter 7 and Chapter 8, treat proteins as strings of characters. For the purpose of sequence comparison, the character sequence of a protein is almost a sufficient representation of a protein structure. However, even the need for substitution matrices in scoring protein sequence alignments points to the more complicated chemical nature of proteins. Some amino acids are chemically similar to each other and likely to substitute for each other. Some are different. Some are large, and some are small. Some are polar; some are nonpolar. Substitution matrices are a simple, quantitative way to map amino acid property information onto a linear sequence. Asking no questions about the nature of the similarities, substitution matrices reflect the tendencies of one amino acid to substitute for another, but that is all.

However, each amino acid residue in a protein structure (or each base in a DNA or RNA structure, as we are beginning to learn) doesn't exist just within its sequence context. 1D information has not proven sufficient to show unambiguously how protein structure and function are determined from sequence. The 3D structural and chemical context of a residue contains many types of information.

Until quite recently, 3D information about protein structure was usually condensed into a more easily analyzable form via 3D-to-1D mapping. A property extracted from a database can be mapped, usually in the form of a numerical or alphabetic character score, to each residue in a sequence. Knowledge-based methods for secondary structure prediction were one of the first uses of 3D-to-1D mapping. By mapping secondary structure context onto sequence information as a propertyattaching a code representing 'helix,' 'sheet,' or 'coil' to each amino acida set of secondary structure propensities can be derived from the structure database and then used to predict the secondary structure content of new sequences.

What important properties does each amino acid in a protein sequence have, besides occurrence in a secondary structure? Some commonly used properties are solvent accessibility, acid/base properties, polarizability, nearest sequence neighbors, and nearest spatial neighbors. All these properties have something to do with intermolecular interactions, as discussed in Chapter 9.

10.4 Feature Detection in Protein Sequences

Protein sequence analysis is based to some extent on understanding the physicochemical properties of the chemical components of the protein chain, and to some extent on knowing the frequency of occurrence of particular amino acids in specific positions in protein structures and substructures. Although protein sequence-analysis tools operate on 1D sequence data, they contain implicit assumptions about how structural features map to sequence data. Before using these tools, it's best to know some protein biochemistry.

Features in protein sequences represent the details of the protein's function. These usually include sites of post-translational modifications and localization signals. Post-translational modifications are chemical changes made to the protein after it's transcribed from a messenger RNA. They include truncations of the protein (cleavages) and the addition of a chemical group to regulate the behavior of the protein (phosphorylation, glycosylation, and acetylation are common examples). Localization or targeting signals are used by the cell to ensure that proteins are in the right place at the right time. They include nuclear localization signals, ER targeting peptides, and transmembrane helices (which we saw in Chapter 9).

Protein sequence feature detection is often the first problem in computational biology tackled by molecular biologists. Unfortunately, the software tools used for feature detection aren't centrally located. They are scattered across the Web on personal or laboratory sites, and to find them you'll need to do some digging using your favorite search engine.

One collection of web-based resources for protein sequence feature detection is maintained at the Technical University of Denmark's Center for Biological Sequence Analysis Prediction Servers (https://www.cbs.dtu.dk/services/). This site provides access to server-based programs for finding (among other things) cleavage sites, glycosylation sites, and subcellular localization signals. These tools all work similarly; they search for simple sequence patterns, or profiles, that are known to be associated with various post-translational modifications. The programs have standardized interfaces and are straightforward to use: each has a submission form into which you paste your sequence of interest, and each returns an HTML page containing the results.

10.5 Secondary Structure Prediction

Secondary structure prediction is often regarded as a first step in predicting the structure of a protein. As with any prediction method, a healthy amount of skepticism should be employed in interpreting the output of these programs as actual predictions of secondary structure. By the same token, secondary structure predictions can help you analyze and compare protein sequences. In this section, we briefly survey secondary structure prediction methods, the ways in which they are measured, and some applications.

Protein secondary structure prediction is the classification of amino acids in a protein sequence according to their predicted local structure. Secondary structure is usually divided into one of three types (alpha helix, beta sheet, or coil), although the number of states depends on the model being used.

Secondary structure prediction methods can be divided into alignment-based and single sequence-based methods. Alignment-based secondary structure prediction is quite intuitive and related to the concept of sequence profiles. In an alignment-based secondary structure prediction, the investigator finds a family of sequences that are similar to the unknown. The homologous regions in the family of sequences are then assumed to share the same secondary structure and the prediction is made not based on one sequence but on the consensus of all the sequences in the set. Single sequence-based approaches, on the other hand, predict local structure for only one unknown.

10.5.1 Alignment-Based and Hybrid Methods

Modern methods for secondary structure prediction exploit information from multiple sequence alignments, or combinations of predictions from multiple methods, or both. These methods claim accuracy in the 70 -77% range. Many of these programs are available for use over the Web. They take a sequence (usually in FASTA format) as input, execute some procedure on them, then return a prediction, usually by email, since the prediction algorithms are compute-intensive and tend to be run in a queue. The following is a list of six of the most commonly used methods:

PHD

PHD combines results from a number of neural networks, each of which predicts the secondary structure of a residue based on the local sequence context and on global sequence characteristics (protein length, amino acid frequencies, etc.) The final prediction, then, is an arithmetic average of the output of each of these neural networks. Such combination schemes are known as jury decision or (more colloquially) winner-take-all methods. PHD is regarded as a standard for secondary structure prediction.

PSIPRED

PSIPRED combines neural network predictions with a multiple sequence alignment derived from a PSI-BLAST database search. PSIPRED was one of the top performers on secondary structure prediction at CASP 3.

JPred

JPred secondary structure predictions are taken from a consensus of several other complementary prediction methods, supplemented by multiple sequence alignment information. JPred is another one of the top-performing secondary structure predictors. The JPred server returns output that can in turn be displayed, edited, and saved for use by other programs using the Jalview alignment editor.

PREDATOR

PREDATOR combines multiple sequence alignment information with the hydrogen bonding characteristics of the amino acids to predict secondary structure.

PSA

PSA is another Markov model-based approach to secondary structure prediction. It's notable for its detailed graphical output, which represents predicted probabilities of helix, sheet, and coil states for each position in the protein sequence.

10.5.2 Single Sequence Prediction Methods

The first structure prediction methods in the 1970s were single sequence approaches. The Chou-Fasman method uses rules derived from physicochemical data about amino acids to predict secondary structure. The GOR algorithm (named for its authors, Garnier, Osguthorpe, and Robson) and its successors use information about the frequency with which residues occur in helices, sheets, and coils in proteins of known structure to predict structures. Both methods are still in use, often on multiple-tool servers such as the SDSC Biology Workbench. Modern methods based on structural rules and frequencies can achieve prediction accuracies in the 70 -75% range, especially when families of related sequences are analyzed, rather than single sequences.

The surge in popularity of artificial intelligence methods in the 1980s gave rise to AI-based approaches to secondary structure prediction, most notably the pattern-recognition approaches developed in the laboratories of Fred Cohen (University of California, San Francisco) and Michael Sternberg (Imperial Cancer Research Fund), and the neural network applications of Terrence Sejnowski and N. Qian (then at Johns Hopkins). These methods exploited similar information as the earlier single-sequence methods did, using the AI techniques to automate knowledge acquisition and application.

10.5.3 Measuring Prediction Accuracy

Authors who present papers on secondary structure prediction methods often use a measure of prediction accuracy called Q3. The Q3 score is defined as:

Q = true_positives + true_negatives / total_residues

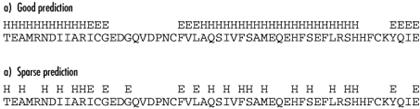

A second measure of prediction accuracy is the segment overlap score (Sov) proposed by Burkhard Rost and coworkers. The Sov metric tends to be more stringent than Q3, since it gives high scores to non-overlapping segments of a single kind of secondary structure, and penalizes sparse predictions (Figure 10-4).

Figure 10-4. Good and bad (sparse) secondary structure predictions

When comparing methods, it pays to be conservative; look at both the average scores and their standard deviations instead of the best reported score. As you can see, Q3 and Sov are fairly simple statistics. Unlike E-values reported in sequence comparison, neither is a test statistic; both measure the percent of residues predicted correctly instead of measuring the significance of prediction accuracy. And, as with genefinders, make sure you know what kind of data is used to train the prediction method.

10.5.4 Putting Predictions to Use

Originally, the goal of predicting secondary structure was to come up with the most accurate prediction possible. Many researchers took this as their goal, resulting in many gnawed fingernails and pulled-out hairs. As mentioned earlier, the hard-won lesson of secondary structure prediction is that it isn't very accurate. However, secondary structure prediction methods have practical applications in bioinformatics, particularly in detecting remote homologs. Drug companies compare secondary structure predictions to locate potential remote homologs in searches for drug targets. Patterns of predicted secondary structure can predict fold classes of proteins and select targets in structural genomics.

Secondary structure prediction tools such as PredictProtein and JPred combine the results of several approaches, including secondary structure prediction and threading methods. Using secondary structure predictions from several complementary methods (both single-sequence and homology-based approaches) can result in a better answer than using just one method. If all the methods agree on the predicted structure of a region, you can be more confident of the prediction than if it had been arrived at by only one or two programs. This is known as a voting procedure in machine learning.

As with any other prediction, secondary structure predictions are most useful if some information about the secondary structure is known. For example, if the structure of even a short segment of the protein has been determined, that data can be used as a sanity check for the prediction.

10.5.5 Predicting Transmembrane Helices

Transmembrane helix prediction is related to secondary structure prediction. It involves the recognition of regions in protein sequences that can be inserted into cell membranes. Methods for predicting transmembrane helices in protein sequences identify regions in the sequence that can fold into a helix and exist in the hydrophobic environment of the membrane. Transmembrane helix prediction grew out of research into hydrophobicity in the early 1980s, pioneered by Russell Doolittle (University of California, San Diego). Again, there are a number of transmembrane helix prediction servers available over the Web. Programs that have emerged as standards for transmembrane helix prediction include TMHMM (https://www.cbs.dtu.dk/services/TMHMM-1.0/), MEMSAT (https://insulin.brunel.ac.uk/~jones/memsat.html), and TopPred (https://www.sbc.su.se/~erikw/toppred2/).

Although structure determination for soluble proteins can be difficult, a far greater challenge is structure determination for membrane-bound proteins. Some of the most interesting biological processes involve membrane proteinsfor example, photosynthesis, vision, neuron excitation, respiration, immune response, and the passing of signals from one cell to another. Yet only a handful of membrane proteins have been crystallized. Because these proteins don't exist entirely in aqueous solution, their physicochemical properties are very different from those of soluble proteins and they require unusual conditions to crystallizeif they are amenable to crystallization at all.

As a result, many computer programs exist that detect transmembrane segments in protein sequence. These segments have distinct characteristics that make it possible to detect them with reasonable certainty. In order to span a cell membrane, an alpha helix must be about 17-25 amino acids in length. Because the interior of a cell membrane is composed of the long hydrocarbon chains of fatty acids, an alpha helix embedded in the membrane must present a relatively nonpolar surface to the membrane in order for its position to be energetically favorable.

Early transmembrane segment identification programs exploited these problems directly. By analyzing every 17-25 residue windows of an amino acid sequence and assigning a hydrophobicity score to each window, high-scoring segments can be predicted to be transmembrane helices. Recent improvements to these early methods have boosted prediction of individual transmembrane segments to an accuracy level of 90 -95%.

The topology of the protein in the membrane isn't as easy to predict. The orientation of the first helix in the membrane determines the orientation of all the remaining helices. The connections of the helices can be categorized as falling on one side or the other of the membrane, but determining which side is which, physiologically, is more complicated.

10.5.6 Threading

The basic principle of structure analysis by threadingis that an unknown amino acid sequence is fitted into (threaded through) a variety of existing 3D structures, and the fitness of the sequence to fold into that structure is evaluated. All threading methods operate on this premise, but they differ in their details.

Threading methods don't build a refined all-atom model of the protein; rather, they rapidly substitute amino acid positions in a known structure with the sidechains from the unknown sequence. Each sidechain position in a folded protein can be described in terms of its environment: to what extent the sidechain is exposed to solvent and, if it isn't exposed to solvent, what other amino acids it's in contact with. A threaded model is scored highly if hydrophobic residues are found in solvent-inaccessible environments and hydrophilic residues on the protein surface. But these high scores are possible only if buried charged and polar residues are found to have appropriate countercharges or hydrogen bonding partners, etc.

Threading is most profitably used for fold recognition, rather than for model building. For this purpose, the UCLA-DOE Structure Prediction Server (https://www.doe-mbi.ucla.edu/people/frsur/frsur.html) is by far the easiest tool to use. It allows you to submit a single sequence and try out multiple fold-recognition and evaluation methods, including the DOE lab's own Gon prediction method as well as EMBL's TOPITS method and NIH's 123D+ method. Other features, including BLAST and FASTA searches, PROSITE searches, and Bowie profiles, which evaluate the fitness of a sequence for its apparent structure, are also available.

Another threading server, the 3D-PSSM server offered by the Biomolecular Modelling Laboratory of the Imperial Cancer Research Fund, provides a fold prediction based on a profile of the unknown sequence family. 3D-PSSM incorporates multiple analysis steps into a simple interface. First the unknown protein sequence is compared to a nonredundant protein sequence database using PSI-BLAST, and a position-specific scoring matrix (PSSM) for the protein is generated. The query PSSM is compared to all the sequences in the library database; the query sequence is also compared to 1D-PSSMs (PSSMs based on standard multiple sequence alignments) and 3D-PSSMs (PSSMs based on structural alignments) of all the protein families in the library database. Secondary structure predictions based on these comparisons are shown, aligned to known secondary structures of possible structural matches for the query protein. The results from the 3D-PSSM search are presented in an easy-to-understand graphical format, but they can also be downloaded, along with carbon-alpha-only models of the unknown sequence built using each possible structural match as a template.

Most threading methods are considered experimental, and new methods are always under development. More than one method can be used to help identify any unknown sequence, and the results interpreted as a consensus of multiple experts. The main thing to remember about any structural model you build using a threading server is that it's likely to lack atomic detail, and it's also likely to be based on a slightly or grossly incorrect alignment. The threading approach is designed to assess sequences as likely candidates to fit into particular folds, not to build usable models. Putative structural alignments generated using threading servers can serve as a basis for homology modeling, but they should be carefully examined and edited prior to building an all-atom model

10.6 Predicting 3D Structure

As was stated earlier in the chapter, predicting protein structure from sequence is a difficult task, and there is no method yet that satisfies all parameters. There are, however, a number of tools that can predict 3D structure. They fall into two subgroups: homology modeling and ab-initio prediction.

10.6.1 Homology Modeling

Let's say you align a protein sequence (a 'target' sequence) against the sequence of another protein with a known structure. If the target sequence has a high level of similarity to the sequence with known structure (over the full length of the sequence), you can use that known structure as a template for the target protein with a reasonable degree of confidence.

There is a standard process that is used in homology modeling. While the programs that carry out each step may be different, the process is consistent:

Use the unknown sequence as a query to search for known protein structures.

Produce the best possible global alignment of the unknown sequence and the template sequence(s).

Build a model of the protein backbone, taking the backbone of the template structure as a model.

In regions in which there are gaps in either the target or the template, use a loop-modeling procedure to substitute

segments of appropriate length.

Add sidechains to the model backbone.

Optimize positions of sidechains.

Optimize structure with energy minimization or knowledge-based optimization.

The key to a successful homology-modeling project isn't usually the software or server used to produce the 3D model. Your skill in designing a good alignment to a template structure is far more critical. A combination of standard sequence alignment methods, profile methods, and structural alignment techniques may be employed to produce this alignment, as we discuss in the example at the end of this chapter. Once a good alignment exists, there are several programs that can use the information in that alignment to produce a structural model.

10.6.1.1 Modeller

Modeller (https://guitar.rockefeller.edu/modeller/modeller.html) is a program for homology modeling. It's available free to academics as a standalone program or as part of MSI's Quanta package (https://www.msi.com).

Modeller has no graphical interface of its own, but once you are comfortable in the command-line environment, it's not all that difficult to use. Modeller executables can be downloaded from the web site at Rockefeller University, and installation is straightforward; follow the directions in the README file available with the distribution. There are several different executables available for each operating system; you should choose based on the size of the modeling problems you will use them for. The README file contains information on the array size limits of the various executables. There are limits on total number of atoms, total number of residues, and total number of sequences in the input alignment.

As input to Modeller, you'll need two input files, an alignment file, and a Modeller script. The alignment file format is described in detail in the Modeller manpages; the Modeller script for a simple alignment consists of just a few lines written in the TOP language (Modeller's internal language). Modeller can calculate multiple models for any input. If the ENDING_ MODEL value in the example script shown is set to a number other than one, more models are generated. Usually, it's preferable to generate more than one model, evaluate each model independently, and choose the best result as your final model.

The example provided in the Modeller documentation shows the setup for an alignment with a pregenerated alignment file between one known protein and one unknown sequence:

INCLUDE # Include the predefined TOP routines

SET ALNFILE = 'alignment.ali' # alignment filename

SET KNOWNS = '5fd1' # codes of the templates

SET SEQUENCE = '1fdx' # code of the target

SET ATOMS_FILES_DIRECTORY = './:../atom_files'# directories for input atom files

SET STARTING_MODEL= 1 # index of the first model

SET ENDING_MODEL = 1 # index of the last model

# (determines how many models to calculate)

CALL ROUTINE = 'model' # do homology modeling

Modeller is run by giving the command mod scriptname. If you name your script fdx.top, the command is mod fdx.

Modeller is multifunctional and has built-in commands that will help you prepare your input:

SEQUENCE_SEARCH

Searches for similar sequences in a database of fold class representative structures

MALIGN3D

Aligns two or more structures

ALIGN

Aligns two blocks of sequences

CHECK_ ALIGNMENT

Evaluates an alignment to be used for modeling

COMPARE_SEQUENCES

Scores sequences in an alignment based on pairwise similarity

SUPERPOSE

Superimposes one model on another or on the template structure

ENERGY

Generates a report of restraint violations in a modeled structure

Each command needs to be submitted to Modeller via a script that calls that command, as shown in the previous sample script. Dozens of other Modeller commands and full details of writing scripts are described in the Modeller manual.

One caveat in automated homology modeling is that sidechain atoms may not be correctly located in the resulting model; automatic model building methods focus on building a reasonable model of the structural backbone of the protein because homology provides that information with reasonable confidence. Homology doesn't provide information about sidechain orientation, so the main task of the automatic model builder is to avoid steric conflicts and improbable conformations rather than optimize sidechain orientations. Incorrect sidechain positions may be misleading if the goal of the model building is to explore functional mechanisms.

10.6.1.2 How Modeller builds a model

Though Modeller incorporates tools for sequence alignment and even database searching, the starting point for Modeller is a multiple sequence alignment between the target sequence and the template protein sequence(s). Modeller uses the template structures to generate a set of spatial restraints, which are applied to the target sequence. The restraints limit, for example, the distance between two residues in the model that's being built, based on the distance between the two homologous residues in the template structure. Restraints can also be applied to bond angles, dihedral angles, and pairs of dihedrals. By applying enough of these spatial restraints, Modeller effectively limits the number of conformations the model can assume.

The exact form of the restraints are based on a statistical analysis of differences between pairs of homologous structures. What those statistics contribute is a quantitative description of how much various properties are likely to vary among homologous structures. The amount of allowed variation between, for instance, equivalent alpha-carbon-to-alpha-carbon distances is expressed as a PDF, or probability density function.

What the use of PDF-based restraints allows you to do, in homology modeling, is to build a structure that isn't exactly like the template structure. Instead, the structure of the model is allowed to deviate from the template but only in a way consistent with differences found between homologous proteins of known structure. For instance, if a particular dihedral angle in your template structure has a value of -60º, the PDF-based restraint you apply should allow that dihedral angle to assume a value of 60 plus or minus some value. That value is determined by what is observed in known pairs of homologous structures, and it's assigned probabilistically, according to the form of the probability density function.

Homology-based spatial restraints aren't the only restraints applied to the model. A force field controlling proper stereochemistry is also applied, so that the model structure can't violate the rules of chemistry to satisfy the spatial restraints derived from the template structures. All chemical restraints and spatial restraints applied to the model are combined in a function (called an objective function) that's optimized in the course of the model building process.

10.6.1.3 ModBase: a database of automatically generated models

The developers of Modeller have made available a queryable online database of annotated homology models. The models are prepared using an automated prediction pipeline. The first step in the pipeline is to compare each unknown protein sequence with a database of existing protein structures. Proteins that have significant sequence homology to domains of known structures are then modeled using those structures as templates. Unknown sequences are aligned to known structures using the ALIGN2D command in Modeller, and 3D structures are built using the Modeller program. The final step in the pipeline is to evaluate the models; results of the evaluation step are presented to the ModBase user as part of the search results. Since this is all standard procedure for homology-model development that's managed by a group of expert homology modelers, checking ModBase before you set off to build a homology model on your own is highly recommended.

The general procedure for building a model with Modeller is to identify homologies between the unknown sequence and proteins of known structures, build a multiple alignment of known structures for use as a template, and apply the Modeller algorithm to the unknown sequence. Models can subsequently be evaluated using standard structure-evaluation methods.

10.6.1.4 The SWISS-MODEL server

SWISS-MODEL is an automated homology modeling web server based at the Swiss Institute of Bioinformatics. SWISS-MODEL allows you to submit a sequence and get back a structure automatically. The automated procedure that's used by SWISS-MODEL mimics the standard steps in a homology modeling project:

Uses BLAST to search the protein structure database for sequences of known structure

Selects templates and looks for domains that can be modeled based on non-homologous templates

Uses a model-building program to generate a model

Uses a molecular mechanics force field to optimize the model

You must supply an unknown sequence to initiate a SWISS-MODEL run in their First Approach mode; however, you can also select the template chains that are used in the model building process. This information is entered via a simple web form. You can have the results sent to you as a plain PDB file, or as a project file that can be opened using the SWISS-PDBViewer, a companion tool for the SWISS-MODEL server you can download and install on your own machine.

Although that sounds simple, such an automatic procedure is error-prone. In a nonautomated molecular modeling project, there is plenty of room for user intervention. SWISS-MODEL actually allows you to intervene in the process using their Project Mode. In Project Mode, you can use the SWISS-PDBViewer to align your template and target sequences manually, then write out a project file, and upload it to the SWISS-MODEL server.

10.6.2 Tools for Ab-Initio Prediction

Since ab-initio structure prediction from sequence has not been done with any great degree of success so far, we can't recommend software for doing this routinely. If you are interested in the ab-initio structure-prediction problem and want to familiarize yourself with current research in the field, we suggest you start with any of these tools: the software package RAMP developed by Ram Samudrala, the I-Sites/ ROSETTA prediction server developed by David Baker and Chris Bystroff, and the ongoing work of John Moult. Recent journal articles describing these methods can serve as adequate entry points into the ab-initio protein structure prediction literature.

10.7 Putting It All Together: A Protein Modeling Project

So how do all of these tools work to produce a protein structure model from sequence? We haven't described every single point and click in this chapter, because most of the software is web-based and quite self-explanatory in that respect. However, you may still be wondering how you'd put it all together to manage a tough modeling project.

As an example, we built a model of a target sequence from CASP 4, the most recent CASP competition. We've deliberately chosen a difficult sequence to model. There are no unambiguously homologous structures in the PDB, though there are clues that can be brought together to align the target with a possible template and build a model. We make no claims that the model is correct; its purpose is to illustrate the kind of process you might go through to build a partial 3D model of a protein based on a distant similarity.

The process for building an initial homology model when you do have an obvious, strong homology to a known structure is much more straightforward: simply align the template and target along their full length, edit the alignment if necessary, write it out in a format that Modeller can read, and submit; or submit the sequence of your unknown to SWISS-MODEL in First Approach mode.

10.7.1 Finding Homologous Structures

The first step in any protein modeling project is to find a template structure (if possible) to base a homology model on.

Using the target sequence T0101 from CASP 4, identified as a '400 amino acid pectate lyase L' from a bacterium called Erwinia chrysanthemi, we searched the PDB for homologs. We started by using the PDB SearchFields form to initiate a FASTA search.

The results returned were disheartening at first glance. As the CASP target list indicated, there were no strong sequence homologies to the target to be found in the PDB. None of the matches had E-values less than 1, though there were several in the less-than-10 range. None of the matches spanned the full length of the protein, the longest matching being a 330 amino acid overlap with a chondroitinase, with an E-value of 3.9.

Each of the top scoring proteins looked different, too, as you can see in Figure 10-5. The top match was an alpha-beta barrel type structure, while the second match (the chondroitinase) was a mainly beta structure with a few decorative alpha helices, and the third match was an entirely different, multidomain all-beta structure.

Out of curiosity, we also did a simple keyword search for pectate lyase in the PDB. There were eight pectate lyase structures listed, but none, apparently, were close enough in sequence to the T0101 target to be recognized as related by sequence information alone. None of these structures was classified as pectate lyase L; they included types A, E, and C. However, we observed that each of the pectate lyase molecules in the PDB had a common structural feature: a large, quasihelical arrangement of short beta strands known as a beta solenoid, or, less picturesquely, as a single-stranded right-handed beta helix (Figure 10-6).

Figure 10-5. Pictures of top three sequence matches of a target sequence from CASP 4

Figure 10-6. The beta-solenoid domain

10.7.2 Looking for Distant Homologies

We used CE to examine the structural neighbors of the known pectate lyases. Interestingly, one of the three proteins (1DBG, a chondroitinase from Flavobacterium heparinium) we first identified with FASTA as a sequence match for our target sequence showed up as a high-scoring structural neighbor of many of the known pectate lyases.

Although the homology between T0101 and these other pectate lyases wasn't significant, the sequence similarity between T0101 and their close structural neighbor 1DBG seemed to suggest that the structure of our target protein might be distantly related to that of the other pectate lyases (Figure 10-7). Note that the alignment in the figure shows a strongly conserved structural domainthe ladderlike structure at the right of the molecule where the chain traces coincide.

Figure 10-7. A structural alignment of known pectate lyase structures; the beta solenoid domain is visible as a ladderlike structure in the center of the molecule

However, in order to do any actual homology modeling, we need to somehow align the T0101 sequence to potential template structures. And since none of the pectate lyase sequences were similar enough to the unknown sequence to be aligned to it using standard pairwise alignment, we would need to get a little bit crafty with our alignment strategy.

10.7.3 Predicting Secondary Structure from Sequence

We applied several secondary structure prediction algorithms to the T0101 target sequence using the JPred structure prediction server. While the predictions from each method aren't exactly the same, we can see from Figure 10-8 that the consensus from JPred is clear: the T0101 sequence is predicted to form many short stretches of beta structure, exactly the pattern that is required to form the beta-solenoid domain.

Figure 10-8. Partial secondary structure predictions for T0101, from JPred

10.7.4 Using Threading Methods to Find Potential Folds

We also sent the sequence to the 3D-PSSM and 123D+ threading servers to analyze its fitness for various protein folds. The top-scoring results from the 3D-PSSM threading server, with E-values in the 95% certainty range, included the proteins 1AIR (a pectate lyase), 1DBG (the chondroitinase that was identified as a homolog of our unknown by sequence-based searching), 1IDK, and 1PCL, all pectate lyases identified by CE as structural neighbors of 1DBG. These proteins were also found in the top results from 123D+.

10.7.5 Using Profile Methods to Align Distantly Related Sequences

We now had evidence from multiple sources that suggested the structures 1AIR, 1DBG, and 1IDK would be reasonable candidates to use as templates to construct a model of the T0101 unknown sequence. However, the remaining challenge was to align the unknown sequence to the template structures. We had many different alignments to work with: the initial FASTA alignment of the unknown with 1DBG; the CE structural alignment of 1DBG and its structural neighbors 1AIR, 1DBG, and 1IDK; and the individual alignments of predicted secondary structure in the unknown to known secondary structure for each of the database hits from 3D-PSSM. Each alignment was different, of course, because they were generated by different methods. We chose to combine the information in the individual 3D-PSSM sequence-to-structure alignments of the unknown sequence with 1AIR and 1IDK into a single alignment file. We did this by aligning those two alignments to each other using Clustal's Profile Alignment mode. Finally, we wrote out the alignment to a single file in a format appropriate for Modeller and used this as input for our first approach.

10.7.6 Building a Homology Model

We created the following input for Modeller:

The input script, peclyase.top:

Homology modelling by the MODELLER TOP routine 'model'.

INCLUDE # Include the predefined TOP routines

SET ALNFILE = 'peclyase.ali' # alignment filename

SET KNOWNS = '1air','1idk' # codes of the templates

SET SEQUENCE = 't0101' # code of the target

SET ATOM_FILES_DIRECTORY = './templates' # directories for input atom files

SET STARTING_MODEL= 1 # index of the first model

SET ENDING_MODEL = 3 # index of the last model

# (determines how many models to calculate)

CALL ROUTINE = 'model' # do homology modeling

We created a sequence alignment file, peclyase.ali, in PIR format, built as described in the example and modified to indicate to Modeller whether each sequence was a template or a target.

We also placed PDB files, containing structural information for the template chains of 1AIR and 1IDK, in a templates subdirectory of our working directory. The files were named 1air.atm and 1idk.atm, as Modeller requires, and we then ran Modeller to create structural models. The models looked similar to their templates, especially in the beta solenoid domain, and evaluated reasonably well by standard methods of structure verification, including 3D/1D profiles and geometric evaluation methods. However, just like the actual CASP 4 competitors, we await the publication of the actual structure of the T0101 target for validation of our structural model.

10.8 Summary

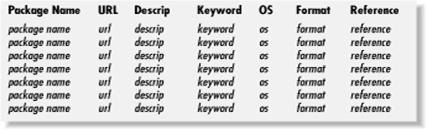

Solving protein structure is complicated at best, but as you've seen, there are a number of software tools to make it easier. Table 1 provides a summary of the most popular structure prediction tools and techniques available to you.

Table 1. Structure Prediction Tools and Techniques

|

What you do |

Why you do it |

What you use to do it |

|

Secondary structure prediction |

As a starting point for classification and structural modeling |

JPred, Predict-Protein |

|

Threading |

To check the fitness of a protein sequence to assume a known fold; to identify distantly related structural homologs |

3D-PSSM, PhD, 123D |

|

Homology modeling |

To build a model from a sequence, based on homologies to known structures |

Modeller, SWISS-MODEL |

|

Model verification |

To check the fitness of a modeled structure for its protein sequence |

VERIFY-3D, PROCHECK, WHAT IF |

|

Ab-initio structure modeling |

To predict a 3D structure from sequence in the absence of homology |

ROSETTA, RAMP |

Chapter 11. Tools for Genomics and Proteomics

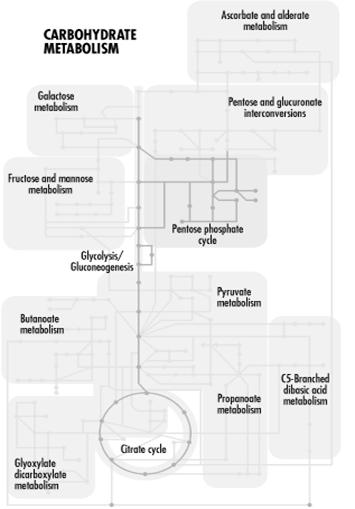

The methods we have discussed so far can be used to analyze a single sequence or structure and compare multiple sequences of single-gene length. These methods can help you understand the function of a particular gene or the mechanism of a particular protein. What you're more likely to be interested in, though, is how gene functions manifest in the observable characteristics of an organism: its phenotype. In this chapter we discuss some datatypes and tools that are beginning to be available for studying the integrated function of all the genes in a genome.

What sets genomic science apart from the traditional experimental biological sciences is the emphasis on automated data gathering and integration of large volumes of information. Experimental strategies for examining one gene or one protein are gradually being replaced by parallel strategies in which many genes are examined simultaneously. Bioinformatics is absolutely required to support these parallel strategies and make the resulting data useful to the biology community at large. While bioinformatics algorithms may be complicated, the ultimate goals of bioinformatics and genomics are straightforward. Information from multiple sources is being integrated to form a complete picture of genomic function and its expression as the pheotype of an organism, as well as to allow comparison between the genomes of different organisms. Figure 11-1 shows the sort of flowchart you might create when moving from genetic function to phenotypic expression.

Figure 11-1. A flowchart moving from genome to phenotype

From the molecular level to the cellular level and beyond, biologists have been collecting information about pieces of this picture for decades. As in the story of the blind men and the elephant, focusing on specific pieces of the biological picture has made it difficult to step back and see the functions of the genome as a whole. The process of automating and scaling up biochemical experimentation, and treating biochemical data as a public resource, is just beginning.

The Human Genome Project has not only made gigabytes of biological sequence information available; it has begun to change the entire landscape of biological research by its example. Protein structure determination has not yet been automated at the same level as sequence determination, but several pilot projects in structural genomics are underway, with the goal of creating a high-speed structure determination pipeline. The concept behind the DNA microarray experimentthousands of microscopic experiments arrayed on a chip and running in paralleldoesn't translate trivially to other types of biochemical and molecular biology experiments. Nonetheless, the trend is toward efficiency, miniaturization, and automation in all fields of biological experimentation.

A long string of genomic sequence data is inherently about as useful as a reference book with no subject headings, page numbers, or index. One of the major tasks of bioinformatics is creating software systems for information management that can effectively annotate each part of a genome sequence with information about everything from its function, to the structure of its protein product (if it has one), to the rate at which the gene is expressed at different life stages of an organism. Currently, the only way to get the functional information that is needed to fully annotate and understand the workings of the genome is traditional biochemical experimentation, one gene or protein at a time. The growing genome databases are the motivation for further parallelization and automation of biological research.

Another task of genome information management systems is to allow users to make intuitive, visual comparisons between large data sets. Many new data integration projects, from visual comparison of multiple genomes to visual integration of expression data with genome map data, are under development.

Bioinformatics methods for genome-level analysis are obviously not as advanced in their development as sequence and structure analysis methods. Sequence and structure data have been publicly available since the 1970s; a significant number of whole genomes have become available only very recently. In this chapter, we focus on some data presentation and search strategies the research community has identified as particularly useful in genomic science.

11.1 From Sequencing Genes to Sequencing Genomes

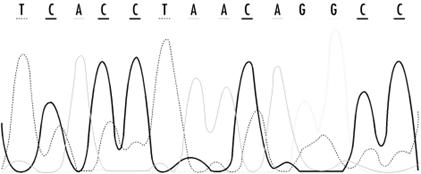

In Chapter 7, we discussed the chemistry that allows us to sequence DNA by somehow producing a ladder of sequence fragments, each differing in size by one base, which can then be separated by electrophoresis. One of the first computational challenges in the process of sequencing a gene (or a genome) is the interpretation of the pattern of fragments on a sequencing gel.

11.1.1 Analysis of Raw Sequence Data: Basecalling

The process of assigning a sequence to raw data from DNA sequencing is called basecalling. As an end user of genome sequence data, you don't have access to the raw data directly from the sequencer; you have to rely on a sequence that has been assigned to this data by some kind of processing software. While it's not likely you will actually need basecalling software, it is helpful to remember what the software does and that it can give rise to errors.

If this step doesn't produce a correct DNA sequence, any subsequent analysis of the sequence is affected. All sequences deposited in public databases are affected by basecalling errors due to ambiguities in sequencer output or to equipment malfunctions. EST and genome survey sequences have the highest error rates (1/10 -1/100 errors per base), followed by finished sequences from small laboratories (1/100 - 1/1,000 per base) and finished sequences from large genome sequencing centers (1/10,000 -1/100,000 per base).[1] Any sequence in GenBank is likely to have at least one error. Improving sequencing technology, and especially the signal detection and processing involved in DNA sequencing, is still the subject of active research.

These values were provided by Dr. Sean Eddy of Washington University.

There are two popular high-throughput protocols for DNA sequencing. As discussed earlier, DNA sequencing as it is done today relies on the ability to create a ladder of fragments of DNA at single-base resolution and separate the DNA fragments by gel electrophoresis. The popular Applied Biosystems sequencers label the fragmented DNA with four different fluorescent labels, one for each base-specific fragmentation, and run a mixture of the four samples in one gel lane. Another commonly used automated sequencer, the Pharmacia ALF instrument, runs each sample in a separate, closely spaced lane. In both cases, the gel is scanned with a laser, which excites each fluorescent band on the gel in sequence. In the four-color protocol, the fluorescence signal is elicited by a laser perpendicular to the gel, one lane at a time, and is then filtered using four colored filters to obtain differential signals from each fluorescent label. In the single-color protocol, a laser parallel to the gel excites all four lanes from a single sequencing experiment at once, and the fluorescent emissions are recorded by an array of detectors. Each of these protocols has its advantages in different types of experiments, so both are in common use. Obviously, the differences in hardware result in differences in the format of the data collected, and the use of proprietary file formats for data storage doesn't resolve this problem.

There are a variety of commercial and noncommercial tools for automated basecalling. Some of them are fully integrated with particular sequencing hardware and input datatypes. Most of them allow, and in fact require, curation by an expert user as sequence is determined.

The raw result of sequencing is a record of fluorescence intensities at each position in a sequencing gel. Figure 11-2 shows detector ouput from a modern sequencing experiment. The challenge for automated basecalling software is to resolve the sequence of apparent fluorescence peaks into four-letter DNA sequence code. While this seems straightforward, there are fairly hard limits on how much sequence can be read in a single experiment. Because separation of bands on a sequencing gel isn't perfect, the quality of the separation and the shape of the bands deteriorates over the length of the gel. Peaks broaden and intermingle, and at some point in the sequencing run (usually 400 -500 bases), the peaks become impossible to resolve. Various properties of DNA result in nonuniform reactions with the sequencing gel, so that fragment mobility is slightly dependent on the identity of the last base in a fragment; overall signal intensities can depend on local sequence and on the reagents used in the experiment. Unreadable regions can occur when DNA fragments fold back upon themselves or when a sequencing primer reacts with more than one site in a DNA sequence, leading to sample heterogeneity. Because these are fairly well-understood, systematic errors, computer algorithms can be developed to compensate for them. The ultimate goal of basecalling software development is to improve the accuracy of each sequence read, as well as to extend the range of sequencing runs, by providing means to deconvolute the more ambiguous fluorescence peaks at the end of the run.

Figure 11-2. Detector output from a modern sequencing experiment

Most sequencing projects deal with the inherent errors in the sequencing process by simply sequencing each region of a genome multiple times and by sequencing both DNA strands (which results in high-quality reads of both ends of a sequence). If you read that a genome has been sequenced with 4X coverage or 10X coverage, that means that portion of the genome has been sequenced multiple times, and the results merged to produce the final sequence.

Modern sequencing technologies replace gels with microscopic capillary systems, but the core concepts of the process are the same as in gel-based sequencing: fragmentation of the DNA and separation of individual fragments by electrophoresis.

At this point, the major genome databases don't provide raw sequence data to users, and for most applications, access to raw sequence data isn't really necessary. However, it is likely that, with the constant growth of computing power, this will change in the future, and that you may want to know how to reanalyze the raw sequence data underlying the sequences available in the public databases.

One noncommercial software package for basecalling is Phred, which is available from the University of Washington Genome Center. Phred runs on either Unix or Windows NT workstations. It uses Fourier analysis to resolve fluorescence traces to predict an evenly spaced set of peak locations, then uses dynamic programming to match the actual peak locations with the predicted results. It then annotates output from basecalling with the probability that the call is an error. Phred scores represent scaled negative log probability that a base call is in error; hence, the higher the Phred score, the lower the probability that an error has been made. These values can be helpful in determining whether a region of the genome may need to be resequenced. Phred can also feed data to a sequence-assembly program called Phrap, which then uses both the sequence information and quality scores to aid in sequence assembly.

11.1.2 Sequencing an Entire Genome

Genome sequencing isn't simply a scaled-up version of a gene-sequencing run. As noted earlier, the sequence length limit of a sequencing run is something like 500 base pairs. And the length of a genome can range from tens of thousands to billions of base pairs. So in order to sequence an entire genome, the genome has to be broken into fragments, and then the sequenced fragments need to be reassembled into a continuous sequence.

There are two popular strategies for sequencing genomes: the shotgun approach and the clone contig approach. Combinations of these strategies are often used to sequence larger genomes.

11.1.2.1 The shotgun approach

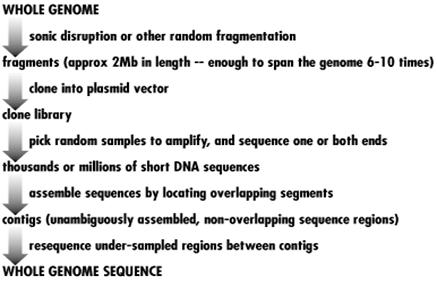

Shotgun DNA sequencing is the ultimate automated approach to DNA sequencing. In shotgun sequencing, a length of DNA, either a whole genome or a defined subset of the genome, is broken into random fragments. Fragments of manageable length (around 2,000 KB) are cloned into plasmids (together, all the clones are called a clone library). Plasmids are simple biological vectors that can incorporate any random piece of DNA and reproduce it quickly to provide sufficient material for sequencing.

If a sufficiently large amount of genomic DNA is fragmented, the set of clones spans every base pair of the genome many times. The end of each cloned DNA fragment is then sequenced, or in some cases, both ends are sequenced, which puts extra constraints on the way the sequences can be assembled. Although only 400 -500 bases at the end or ends of the fragment are sequenced, if enough clones are randomly selected from the library and sequenced, the amount of sequenced DNA still spans every base pair of the genome several times. In a shotgun sequencing experiment, enough DNA sequencing to span the entire genome 6 -10 times is usually required.

The final step in shotgun sequencing is sequence assembly, which we discuss in more detail in the next section. Assembly of all the short sequences from the shotgun sequencing experiment usually doesn't result in one single complete sequence. Rather, it results in multiple contigsunambiguously assembled lengths of sequence that don't overlap each other. In the assembly process, contigs start and end because a region of the genome is encountered from which there isn't enough information (i.e., not enough clones representing that region) to continue assembling fragments. The final steps in sequencing a complete genome by shotgun sequencing are either to find clones that can fill in these missing regions, or, if there are no clones in the original library that can fill in the gaps, to use PCR or other techniques to amplify DNA sequence that spans the gaps.

Recently, Celera Genomics has shown that shotgun DNA sequencingsequencing without a mapcan work at the whole genome level even in organisms with very large genomes. The largely completed Drosophila genome sequence is evidence of their success.

11.1.2.2 The clone contig approach

The clone contig approach relies on shotgun sequencing as well, but on a smaller scale. Instead of starting by breaking down the entire genome into random fragments, the clone contig approach starts by breaking it down into restriction fragments, which can then be cloned into artificial chromosome vectors and amplified. Restriction enzymes are enzymes that cut DNA. These enzymes are sequence-specific; that is, they recognize only one specific sequence of DNA, anywhere from 6-10 base pairs in length. By pure statistics, any base has a 1 in 4 chance of occurring randomly in a DNA sequence; an N-residue sequence has a 1 in 4N chance of occurring. Enzymes that cut at a specific 6 -10 base pair sequence of DNA end up cutting genomic DNA relatively rarely, but because DNA sequence isn't random, the restriction enzyme cuts result in a specific pattern of fragment lengths that is characteristic of a genome.

Each of the cloned restriction fragments can be sequenced and assembled by a standard shotgun approach. But assembly of the restriction fragments into a whole genome is a different sort of problem. When the genome is digested into restriction fragments, it is only partially digested. The amount of restriction enzyme applied to the DNA sample is sufficient to cut at only approximately 50% of the available restriction sites in the sample. This means that some fragments will span a particular restriction site, while other fragments will be cut at that particular site and will span other restriction sites. So the clone library that is made up of these restriction fragments will contain overlapping fragments.

Chromosome walking is the process of starting with a specific clone, then finding the next clone that overlaps it, and then the next, etc. Methods such as probe hybridization or PCR are used to help identify the restriction fragment that has been inserted into each clone, and there are a number of experimental strategies that can make building up the genome map this way less time-consuming. A genome map is a record of the location of known features in the genome, which makes it relatively simple to associate particular clone sequences with a specific location in the genome by probe hybridization or other methods.

Genomes can be mapped at various levels of detail. Geneticists are used to thinking in terms of genetic linkage maps, which roughly assign the genes that give rise to particular traits to specific loci on the chromosome. However, genetic linkage maps don't provide enough detail to support the assembly of a full genome worth of sequence, nor do they point to the actual DNA sequence that corresponds to a particular trait. What genetic linkage maps do provide, though, is a set of ordered markers, sometimes very detailed depending on the organism, which can help researchers understand genome function (and provide a framework for assembling a full genome map).

Physical maps can be created in several ways: by digesting the DNA with restriction enzymes that cut at particular sites, by developing ordered clone libraries, and recently, by fluorescence microscopy of single, restriction enzyme-cleaved DNA molecules fixed to a glass substrate. The key to each method is that, using a combination of labeled probes and known genetic markers (in restriction mapping) or by identifying overlapping regions (in library creation), the fragments of a genome can be ordered correctly into a highly specific map (see Figure 11-2).

11.1.2.3 LIMS: Tracking all those minisequences

In carrying out a sequencing project, tracking the millions of unique DNA samples that may be isolated from the genome is one of the biggest information technology challenges. It's also probably one of the least scientifically exciting, because it involves keeping track of where in the genome each sample came from, which sample goes into each container, where each container goes in what may be a huge sample storage system, and finally, which data came from which sample. The systems that manage output from high-throughput sequencing are called Laboratory Information Management Systems (LIMS), and while we will not discuss them in the context of this book, LIMS development and maintenance makes up the lion's share of bioinformatics work in industrial settings. Other high-throughput technologies, such as microarrays and cheminformatics, also require complicated LIMS support.

11.2 Sequence Assembly

Basecalling is only the first step in putting together a complete genome sequence. Once the short fragments of sequence are obtained, they must be assembled into a complete sequence that may be many thousands of times their length. The next step is sequence assembly.

Sequence assembly isn't something you're likely to be doing on your own on a large scale, unless you happen to be working for a genome project. However, even small labs may need to solve sequence-assembly problems that require some computer assistance.

DNA sequencing using a shotgun approach provides thousands or millions of minisequences, each 400 -500 fragments in length. The fragments are random and can partially or completely overlap each other. Because of these overlaps, every fragment in the set can be identified by sequence identity as adjacent to some number of other fragments. Each of those fragments overlaps yet another set of fragments, and so on. It's standard procedure for the sequences of both ends of some fragments to be known, and the sequences of only one end of other fragments to be known. Figure 11-3 illustrates the shotgun sequencing approach.

Figure 11-3. The shotgun DNA sequencing approach

Ultimately, all the fragments need to be optimally tiled together into one continuous sequence. Identifying sequence overlaps between fragments puts some constraints on how the sequences can be assembled. For some fragments, the length of the sequence and the sequences of both its ends are known, which puts even more constraints on how the sequences can be assembled. The assembly algorithm attempts to satisfy all the constraints and produce an optimal ordering of all the fragments that make up the genome.

Repetitive sequence features can complicate the assembly process. Some fragments will be uncloneable, and the sequencing process will fail in other cases, leaving gaps in the DNA sequence that must be resolved by resequencing. These gaps complicate automated assembly. If there isn't sufficient information at some point in the sequence for assembly to continue, the sequence contig that is being created comes to an end, and a new contig starts when there is sufficient sequence information for assembly to resume.

The Phrap program, available from the University of Washington Genome Center, does an effective job assembling sequence fragments, although a large amount of computer time is required. The accompanying program Consed is a Unix-based editor for Phrap sequence assembly output. TIGR Assembler is another genome assembly package that works well for small genomes, BACs, or ESTs.

11.3 Accessing Genome Informationon the Web

Partial or complete DNA sequences from hundreds of genomes are available in GenBank. Putting those sequence records together into an intelligible representation of genome structure isn't so easy. There are several efforts underway to integrate DNA sequence with higher-level maps of genomes in a user-friendly format. So far, these efforts are focused on the human genome and genomes of important plant and animal model systems. They aren't collected into one uniform resource at present, although NCBI does aim to be comprehensive in its coverage of available genome data eventually.