| CATEGORII DOCUMENTE |

| Bulgara | Ceha slovaca | Croata | Engleza | Estona | Finlandeza | Franceza |

| Germana | Italiana | Letona | Lituaniana | Maghiara | Olandeza | Poloneza |

| Sarba | Slovena | Spaniola | Suedeza | Turca | Ucraineana |

Data Mining

Introduction to Data Mining

Organizations worldwide generate large amount of data that is mostly unorganized. This unorganized data requires processing to be done to generate meaningful and useful information. In order to organize large amount of data, it is necessary to implement the concept of database management systems such as Oracle and SQL Server. These database management systems require usage of SQL, a specialized query language to retrieve data from a database. However, the use of SQL is not always adequate to meet the end user requirements of specialized and sophisticated information from an unorganized large data bank. This requires looking for certain alternative techniques to retrieve information from large and unorganized source of data.

Following chapters introduce the basic concepts of data mining, a specialized data processing technique. These chapters explore the various tasks, models, and techniques that are used in data mining in order to retrieve meaning and useful information from large and unorganized data.

What is Data Mining?

Data Mining is well known as Knowledge Discovery in Databases.

Data mining is the technique of extracting meaningful information from large and mostly unorganized data banks. It is the process of performing automated extraction and generation predictive information from large amount of data. It enables user to understand the current market trends and enables you to take proactive measures to gain maximum benefit from the same.

The data mining process uses a variety of analysis tools to determine the relationships between data in the data banks and use the same to make valid predictions. Data mining is the integration of various techniques from multiple disciplines such as statistics, machine learning, pattern recognition, neural networks, image processing, and database management systems and so on.

The Data Mining Process

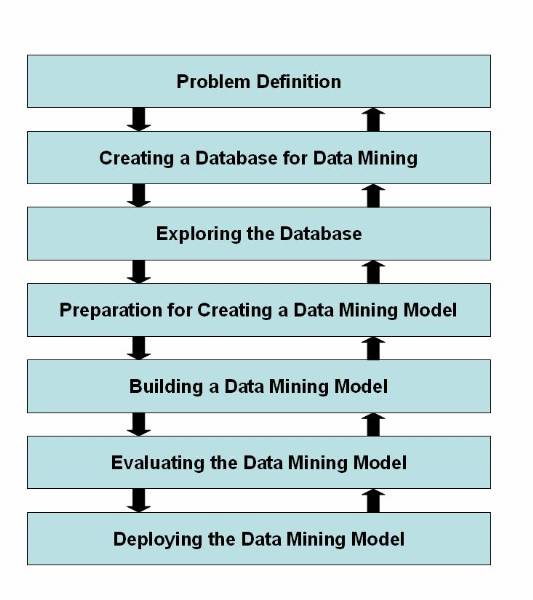

Data mining operations require a systematic approach. The process of data mining is generally specified in the form of an ordered list but the process is not linear, sometimes is necessary to step back and rework on the previously performed step.

The

general phases in the data mining process to extract knowledge as shown on ![]() are:

are:

1. Problem definition

This phase is to understand the problem and the domain environment in which the problem occurs. The problem has to be clearly defined before proceeding further. Problem definition specifies the limits within which the problem needs to be solved. It also specifies the cost limitations to solve the problem.

2. Creating a database for data mining

This phase is to create a database where the data to be mined are stored for knowledge acquisition. Creating a database does not require creation specialized database management system. You can even use a flat file or a spreadsheet to store data. Data warehouse is also a kind of data storage where large amount of data is stored for data mining. The creation of data mining database consumes about 50% to 90% of the overall data mining process.

3. Exploring the database

This phase is to select and examine important data sets of a data mining database in order to determine their feasibility to solve the problem. Exploring the database is a time-consuming process and requires a good user interface and computer system with good processing speed.

4. Preparation for creating a data mining model

This phase is to select variables to act as predictors. New variables are also built depending upon the existing variables along with defining the range of variables in order to support imprecise information.

5. Building a data mining model

This phase is to create multiple data mining models and to select the best of these models. Building a data mining model is an interactive process. At times, user needs to go back to the problem definition phase in order to change the problem definition itself. The data mining model that the user selects can be a decision tree, an artificial neural network, or an association rule model.

6. Evaluating the data mining model

This phase is to evaluate the accuracy of the selected data mining model. In data mining, the evaluating parameter is data accuracy in order to test the working of the model. This is because the information generated in the simulated environment varies from the external environment. The errors that occur during the evaluation phase needs to be recorded and the cost and time involved in rectifying the error needs to be estimated. External validation is also needs to be performed in order to check whether the selected model performs correctly when provided real world values.

7. Deploying the data mining model

This phase is to deploy the built and the evaluated data mining model in the external working environment. A monitoring system should monitor the working of the model and generate reports about its performance. The information in the report helps enhance the performance of selected data mining model.

Figure 1.2-1 Life Cycle of the Data Mining Process

Data Mining Tasks

Data mining makes use of various algorithms to perform a variety of tasks. These algorithms examine the sample data of a problem and determine a model that fits close to solving the problem. The models that user determines to solve a problem are classified as predictive and descriptive.

A predictive model enables to the user to predict the values of data by making use of known results from a different set of sample data. The data mining tasks that form the part of the predictive model are:

Classification

Regression

Time series analysis

A descriptive model enables you to determine the patterns and relationships in a sample data. The data mining tasks that form the part of the descriptive model are:

Clustering

Summarization

Association rules

Sequence discovery

Classification

Classification is an example of data mining task that fits in the predictive model of data mining. The use of classification task enables the user to classify data in a large data bank into predefined set of classes. The classes are defined before studying or examining data in the data bank. Classification tasks not only enable the user to study and examine the existing sample data but also enable him to predict the future behaviour of that sample data.

Example: The fraud detection in credit card related transaction, text document analysis, and probability of an employee to leave an organization are some of the tasks that you can determine by applying the technique of classification. For example, in case of text document analysis, you can predefine four classes of document: computational linguistics, machine learning, natural language processing, and other documents.

Regression

Regression is an example of data mining task that fits in the predictive model of data mining. The use of regression task enables user to forecast future data values based on the present and past data values. Regression task examines the values of data and develops and mathematical formula. The result produced on using this mathematical formula enables user to predict future behaviour of existing data. The major drawback of regression is that you can implement regression on linear quantitative data such as speed and weight to predict future behaviour of them.

Example: User can use regression to predict the future behaviour of users saving patterns based on the past and the current values of savings. Regression also enables a growing organization to predict the need for recruiting new employees and purchasing their corresponding resources based on the past and current growth rate of the organization.

Time Series Analysis

Time series analysis is and example of data mining task that fits in the predictive model of data mining. The use of time series analysis task enables user to predict future values for the current set of values are time dependent. Time series analysis makes use of current and past sample data to predict the future values. The values that you use for time series analysis are evenly distributed as hourly, daily, weekly, monthly, yearly, and so on. You can draw a time-series plot to visualize the amount of change in data for specific changes in time. You can use time series analysis for examining the trends in the stock market for various companies for a specific period and according make investments.

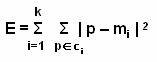

Clustering

Clustering is an example of data mining task that fits in the descriptive model of data mining. The use of clustering enables user to create new groups and classes based on the study of patterns and relationship between values of data in a data bank. It is similar to classification but does not require user to predefine the groups of classes. Clustering technique is otherwise known as unsupervised learning or segmentation. All those data items that resembles more closely with each other are clubbed together in a single group, also known as clusters.

Example: User can use clustering to create clusters or groups of potential consumers for a new product based on their income and location. The information about these groups is then used to target only those consumers that have been listed in those specific groups.

Summarization

Summarization is an example of data mining task that fits in the descriptive model of data mining. The use of summarization enables user to summarize a large chunk of data containing in a Web page or a document. The study of this summarized data enables you to get the gist of the entire Web page or the document. Thus, summarization is also known as characterization or generalization. Summarization task searches for specific characteristics and attributes of data in the large chunk of given data and then summarizes the same.

Example: The values of television rating system (TRS) provide user with the extent of viewership for various television programs on a daily basis. The comparative study of such TRS values from different countries enables the user to calculate and summarize the average level of viewership of television programs, all over the world.

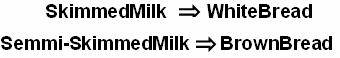

Association Rules

Association rules is an example of data mining task that fits in the descriptive model of data mining. The use of association rules enables you to establish association and relationships between large unclassified data items based on certain attributes and characteristics. Association rules define certain rules of associativity between data items and then use those rules to establish relationships.

Example: Association rules are largely used by those organizations that are into retail sales business. For example, the study of purchasing patterns of various consumers enables the retail business organizations to consolidate their market share vybetter planning and implementing the established association rules. You can also use association rules to describe the associativity of telecommunication failures, or the satellite link failures. The result of these association rules can help prevent such failures by taking appropriate measures.

Sequence Discovery

Sequence discovery is an example of data mining task that fits in the descriptive model of data mining. The use of sequence discovery enables the user to determine the sequential patterns that might exist in a large and unorganised data bank. The user discovers the sequence in a data bank by using the time factor.

Example: The user associates the data items by the time at which it was generated and the likes. The study of sequence of events in crime detection and analysis enables security agencies and police organizations to solve a crime mystery and prevent their future occurrence. Similarly, the occurence of certain strange and unknown diseases may be attributed to specific seasons. Such studies enables biologists to discover preventive drugs or propose preventive measures that can be taken against such diseases.

Data Mining Techniques

The data mining techniques provides the user with a way to use various data mining tasks such as classification and clustering in order to predict solution sets for a problem. Data mining techniques provides you with a level of confidence about the predicted solutions in terms of the consistency of prediction and in terms of the frequency of correct predictions. Some of the data mining techniques include:

Statistics

Machine Learning

Decision Trees

Hidden Markov Models

Artificial Neural Networks

Genetic Algorithms

Statistics

Statistics is the branch of mathematics, which deals with the collection and analysis of numerical data by using various methods and techniques. You can use statistics in various stages of the data mining. Statistics can be used in:

Data cleansing stage

Data collection and sampling stage

Data analysis stage

Some of the statistical techniques that find its extensive usage in data mining are:

Point estimation: It is the process of calculating a single value from a given sample data using statistical techniques such as mean, variance, media, and so on.

Data summarization: it is the process of providing summary information from a large data bank without studying and analysing each and every data elements in the data bank. Creating a histogram is one of the most useful ways to summarize data using various statistical techniques such as calculating the mean, median, mode, and variance.

Bayesian techniques: It is the technique of classifying data items available in a data bank. The simplest of all Bayesian technique is the use of Bayes Theorem that has led to the development of various advanced data mining techniques.

Testing and hypothesis: It is the technique of applying sample data to a hypothesis in order to prove or disprove the hypothesis.

Correlation: It is the technique of determining the relationship between two or more different set of data variables by measuring the values of them.

Regression: It is the technique of estimating the value of a continuous output data variable from some input data variables.

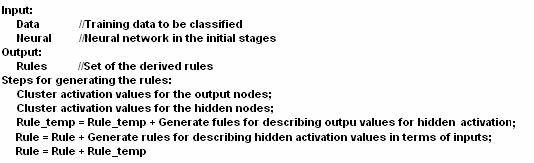

Machine Learning

Machine learning is the process of generating a computer system 5that is capable of independently acquiring data and integrating that data to generate useful knowledge. The concept of machine learning is to make a computer system behave and act as a human being who learns from experience, analyses the observation made, such that a system is developed that continuously self-improves and provides increased efficiency and effectiveness. The use of machine learning in data mining is that machine learning enables you to discover new and interesting structures and formats about a set of data that are previously unknown.

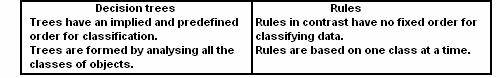

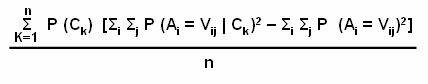

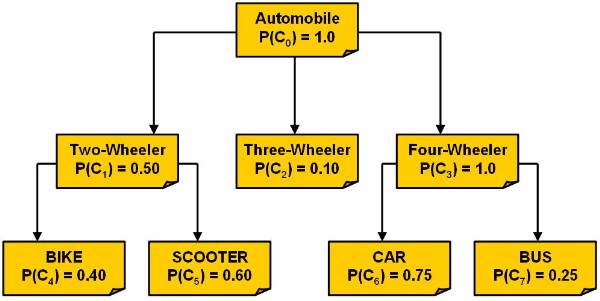

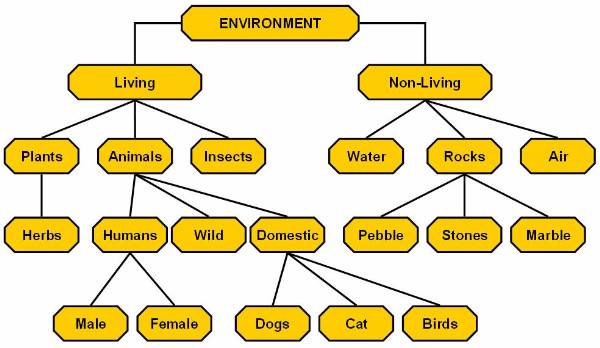

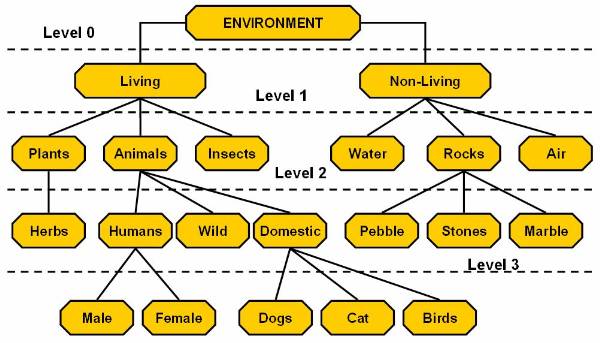

Decision Trees

A decision tree is a tree-shaped structure, which represents a predictive model. In a decision tree, each branch of the tree represents a classification question while the leaves of the tree represent the partition of the classified information. User needs to follow the branches of a decision tree that reflects the data elements the user have such that when he reach the bottom-most leaf of the tree, you have the answer to your question in that leaf node. Decision trees are generally suitable for tasks related to clustering and classification. It helps generate rules that can be used to explain the decision being taken.

Hidden Markov Models

Hidden Markov model is a model that enables the user to predict future actions action to be taken in time series. The models provide the probability of a future event, when provided with the present and previous events. Hidden Markov models are constructed using the data collected from a series of events such that the series of present and past events enables the user to determine future events to a certain degree. In simple terms, Hidden Markov models are a kind of time series model where the outcome is directly related to the time. One of the constraints of Hidden Markov models is hat the future actions of the series of the events are calculated entirely by the present and past events.

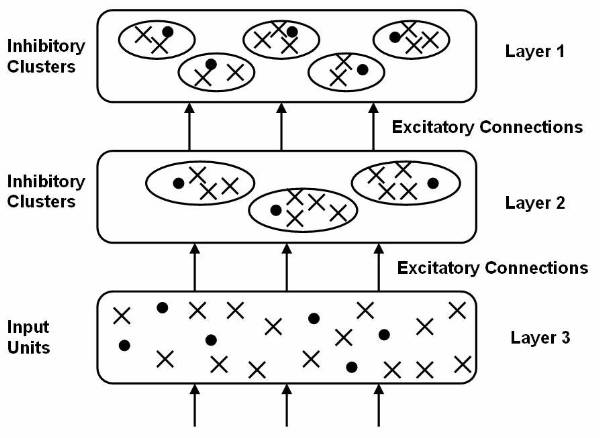

Artificial Neural Networks

Artificial neural networks are non-linear predictive models that use the concept of learning and training and closely resemble the structure of biological neural networks. In artificial neural network, a large set of historical data is trained or analysed in order to predict the output of a particular future situation or a problem. You can use artificial neural network in a wide variety of applications such as the fraud detection in online credit card transactions, secret military operations, and for operation, which requires biological simulations.

Genetic Algorithms

Genetic algorithm is a search algorithm that enables you to locate optimal binary strings by processing on initial random population of binary strings by performing operations such as artificial mutation, crossover, and selection. The process of genetic algorithm is in an analogy with the process of natural selection. You can use genetic algorithms to perform tasks such as clustering and association rules. If you have a certain set of sample data, then genetic algorithms enables you to determine the best possible model out of a set of models in order to represent the sample data. While using the genetic algorithm technique, first you arbitrarily select a model to represent the sample data. Then it is performed a set of iterations on the selected model to generate now models. Out of the many generated models, you select the most feasible model to represent the sample data using the defined fitness function.

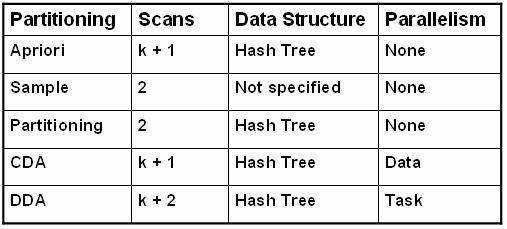

Process Models of Data Mining

Systematic approach of data mining has to be followed for meaningful retrieval of data from large data banks. Several process models has been proposed by various individuals and organizations that provides a systematic steps for data mining. The three most popular process models of data mining are:

5 A's process model

CRISP-DM process model

SEMMA process model

Six-Sigma process model

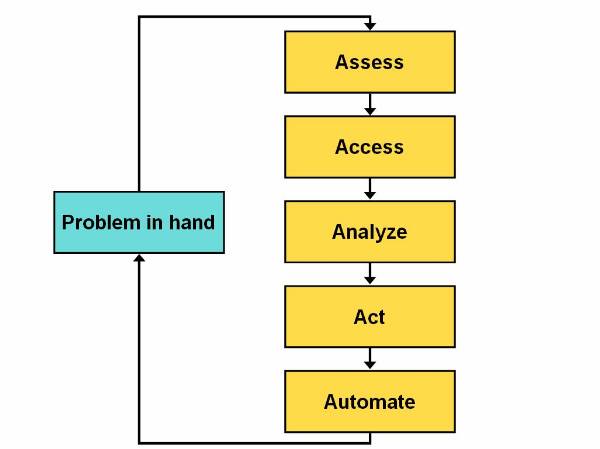

The5 A's Process Model

The 5 A's process model ![]() has been proposed and used by SPSS Inc,

has been proposed and used by SPSS Inc,

The 5 A's process model of data mining generally begins by first assessing the problem in hand. The next logical step is to access or accumulate data that are related to the problem. After that, the use analyse the accumulated data from different angles using various data mining techniques. The user then extracts meaningful information from the analysed data and implements the result to solve the problem in hand. At last, the user tries to automate the Data Mining process by building software that uses the various techniques that he used in the 5 A's process model.

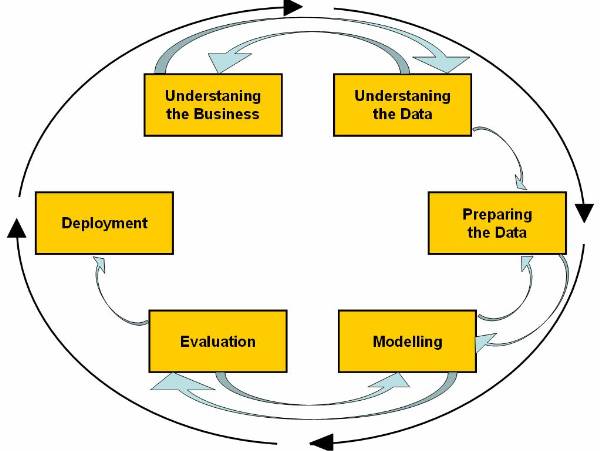

The CRISP-DM Process Model

The Cross-Industry Standard Process for Data Mining (CRISP-DM) process model has been proposed by a group of vendors (NCS Systems Engineering Copenhagen (Denmark), Daimler-Benz AG (Germany), SPSS/Integral Solutions Ltd. (United Kingdom), and OHRA Verzekeringen en Bank Group B. V (The Netherlands).

According to CRISP-DM, a given data mining

project has a life cycle consisting of six phases, as illustrated in ![]() . The Phase sequence is adaptive.

That is, the next phase in the sequence often depends on the outcomes

associated with the preceding phase. The most significant dependencies between

phases are indicated by the arrows. The solution to a particular business or

research problem leads to further questions of interest, which may then be

attacked using the same general process as before. Lessons learned from past

projects should always be brought to bear as input into new projects. Following

is an outline of each phase. Although conceivably, issues encountered during

the evaluation phase can send the analyst back to any of the previous phases

for amelioration, for simplicity there is shown only the most common loop, back

to the modelling phase.

. The Phase sequence is adaptive.

That is, the next phase in the sequence often depends on the outcomes

associated with the preceding phase. The most significant dependencies between

phases are indicated by the arrows. The solution to a particular business or

research problem leads to further questions of interest, which may then be

attacked using the same general process as before. Lessons learned from past

projects should always be brought to bear as input into new projects. Following

is an outline of each phase. Although conceivably, issues encountered during

the evaluation phase can send the analyst back to any of the previous phases

for amelioration, for simplicity there is shown only the most common loop, back

to the modelling phase.

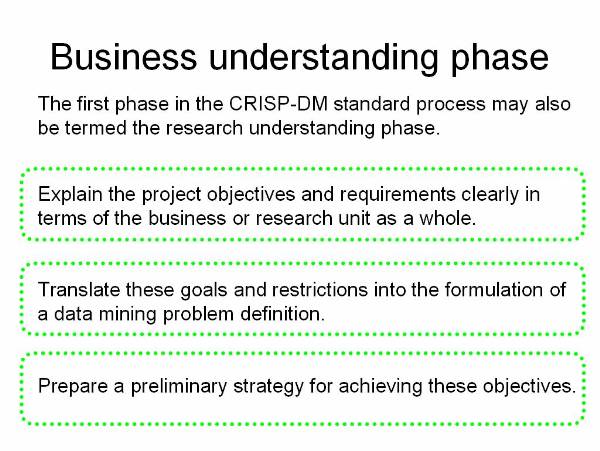

The Life cycle of CRISP-DM process model consist of six phases:

Understanding the business![]() : This phase is to understand the

objectives and requirements of the business problem and generating a data

mining definition for the business problem.

: This phase is to understand the

objectives and requirements of the business problem and generating a data

mining definition for the business problem.

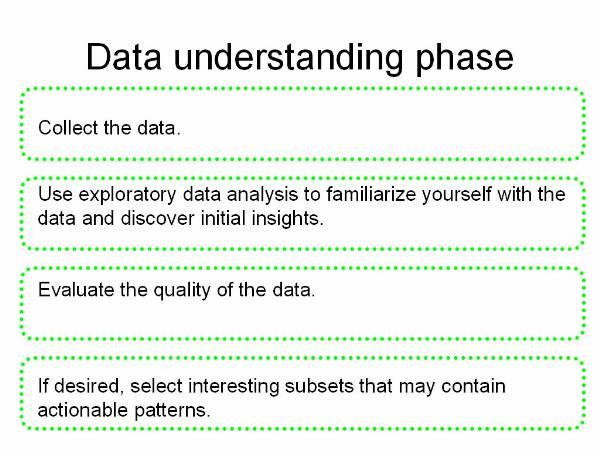

Understanding the data![]() : This phase is to first analyze

the data collect in the first phase and study its characteristics and matching

patterns to propose a hypothesis for solving the problem.

: This phase is to first analyze

the data collect in the first phase and study its characteristics and matching

patterns to propose a hypothesis for solving the problem.

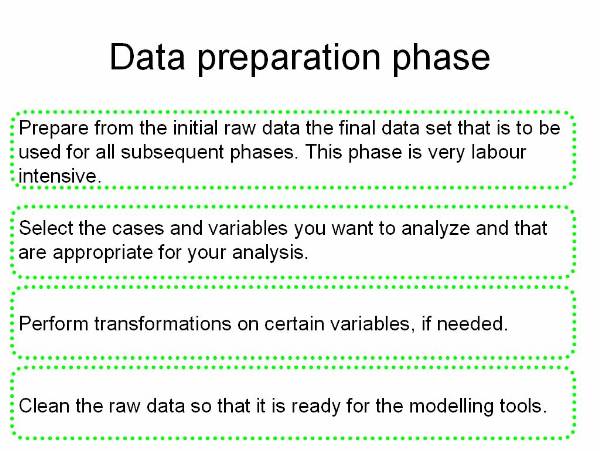

Preparing the data![]() : This phase is to create final

datasets that are input to various modelling tools. The raw data items are

first transformed and cleaned to generate datasets that are in the form of

tables, records, and fields.

: This phase is to create final

datasets that are input to various modelling tools. The raw data items are

first transformed and cleaned to generate datasets that are in the form of

tables, records, and fields.

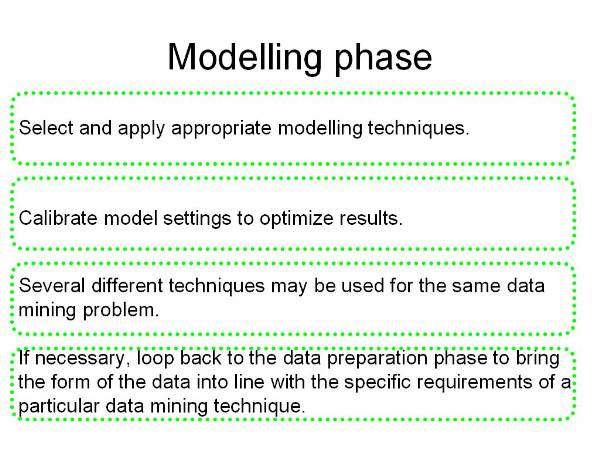

Modelling![]() : This phase is to select and

apply different modelling techniques of data mining. The user input the

datasets collected from the previous phase to these modelling techniques and

analyse the generated output.

: This phase is to select and

apply different modelling techniques of data mining. The user input the

datasets collected from the previous phase to these modelling techniques and

analyse the generated output.

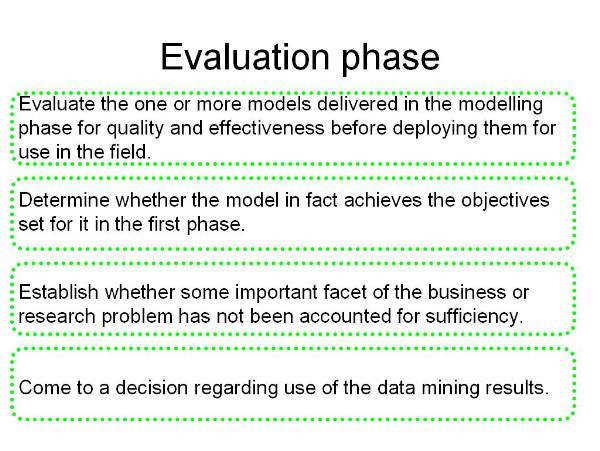

Evaluation![]() : This phase is to evaluate a

model or a set of models that the user generates in the previous phase for

better analysis of the refined data.

: This phase is to evaluate a

model or a set of models that the user generates in the previous phase for

better analysis of the refined data.

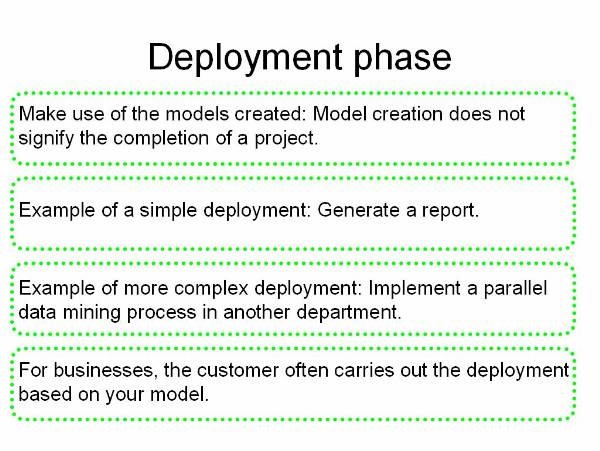

Deployment![]() : This phase is to organize and

implement the knowledge gained from the evaluation phase in such a way that it

is easy for the end users to comprehend.

: This phase is to organize and

implement the knowledge gained from the evaluation phase in such a way that it

is easy for the end users to comprehend.

The SEMMA Process Model

The SEMMA (Sample, Explore, Modify, Model, Assess) process model has been proposed and used by SAS Institute Inc.

The life cycle of the SEMMA process model consists of five phases:

Sample: This phase is to extract a portion from a large data bank such that you are able to retrieve meaningful information from the extracted portion of data. Selecting a portion from a large data bank significantly reduces the amount of time required to process them.

Explore: This phase is to explore and refine the sample portion of data using various statistical data mining techniques in order to search for unusual trends and irregularities in the sample data. For sample, an online trading organization can use the technique of clustering to find a group of consumers that have similar ordering patterns.

Modify: This phase is to modify the explored data by creating, selecting and transforming the predictive variables for the selection of a prospective data mining model. As per the problem in hand, the user nay need to add new predictive variables or delete existing predictive variables to narrow down the search for a useful solution to the problem.

Model: This phase is to select a data mining model that automatically search for a combination of data, which the user can use to predict the required result for the problem. Some of the modelling techniques that are possible to use as a model are neural networks and statistical models.

Assess: This phase is to assess the use and reliability of the data generated by the model that the user selected in the previous phase and estimate its performance. He can assess the selected model by applying the sample data that he collected in the sample phase and check the output data.

The Six-Sigma Process Model

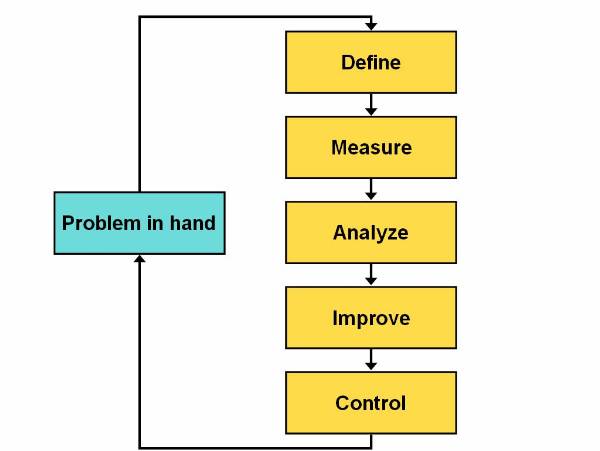

Six-Sigma is a data-driven process model that eliminates defects, wastes, or quality control problems that generally occurs in a production environment. This model has been pioneered by Motorola and popularised by General Electric. Six-Sigma is very popular in various American industries due to its easy implementation, and it is likely to be implemented worldwide. This process model is based on various statistical techniques, use of various types of data analysis techniques, and implementation of systematic training of all the employees of an organization. Six-Sigma process model postulates a sequence of five stages called DMAIC (Define, Measure, Analyse, Improve, and Control).

The life cycle of the Six-Sigma process model

consists of five phases![]()

Define: This phase defines the goals of a project along with its limitations. This phase also identifies the issues that need to be addressed in order to achieve the defined goal.

Measure: This phase collects information about the current process in which the work is done and to try to identify the basics of the problem.

Analyse: This phase identifies the root cause of the problem in hand and ensure those root causes by using various data analysis tools.

Improve: This phase implements all those solutions that tries and solves the root causes of the problem in hand. The root causes are identifies in the previous phase.

Control: This phase monitors the outcome of all its previous phases and suggests improvement measures in each of its earlier phases.

Figure 1.5-1 Life Cycle of the 5 A's Process model

Figure 1.5-2 CRISP-DM

Cross-Industry Standard Process for Data Mining

Figure 1.5-3 The CRISP-DM Process Model

Understanding the Business

Figure 1.5-4 The CRISP-DM Process Model

Figure 1.5-5 The CRISP-DM Process Model

Figure 1.5-6 The CRISP-DM Process Model

Figure 1.5-7 The CRISP-DM Process Model

Figure 1.5-8 The CRISP-DM Process Model

Figure 1.5-9 Life Cycle of the Six-Sigma Process Model

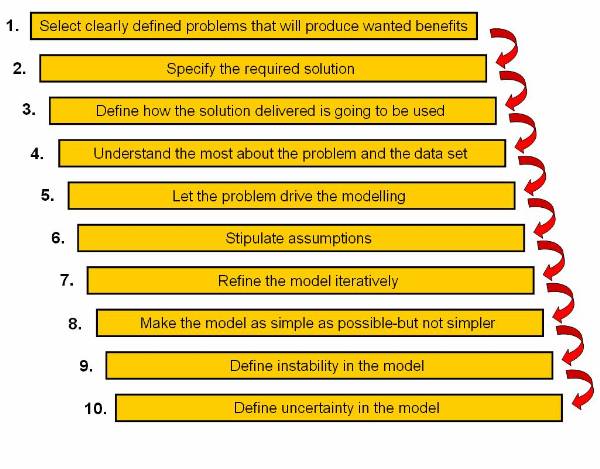

The 10 Commandments of Data Mining

10 Commandments or 10 Golden Rules of data

mining help the miner guide through the data preparation process: ![]()

Select clearly defined problems that will produce wanted benefits.

Specify the required solution.

Define how the solution delivered is going to be used.

Understand as much as possible about the problem and the data set.

Let the problem drive the modelling (i.e.: tool selection, data preparation).

Stipulate assumptions.

Refine the model iteratively.

Make the model as simple as possible-but not simpler.

Define instability in the model (critical areas where change in output is drastically different for small changes in inputs).

Define uncertainty in the model (critical areas and ranges in the data set where the model produces low confidence predictions/insights).

Rules 4, 5, 6, 7, and 10 have particular relevance for data preparation. Data can inform only when summarized to a specific purpose, and the purpose of data mining is to wring information out of data. Understanding the nature of the problem and the data (rule 4) is crucial. Only by understanding the desired outcome can the data be prepared to best represent features of interest that bear on the topic (rule 5). It is also crucial to expose unspoken assumption (rule 6) in case data are not warranted.

Modelling data is a process of incremental improvement (rule 7), or loosely: prepare the data, build a model, look at the results, re-prepare the data in light of what the model shows, and so on. Sometimes, re-preparation is used to eliminate problems, to explicate useful features, to reduce garbage, to simplify the model, or for other reasons. At the point that time and resources expire, the project is declared either a success or a failure, and it is on to the next one.

Uncertainty in the model (rule10) may reflect directly on the data. It may indicate a problem in some part of the data domain - lack of data, the presence of outliers or some other problem. 5 of 10 golden rules of data mining bear directly on data preparation.

Figure 1.6-1 10 Commandments

Data Warehouse Introduction

Companies use data warehouses to store data, such as product sales statistics and employee productivity. This collection of data informs various business decisions, such as market strategies and employee performance appraisals. In today's market-scenario, a company needs to make quick and accurate decisions. The easiest way for swift retrieval of data is the data warehouse. Organizations maintain large databases that are updated by daily transactions. There databases are operational databases. These databases are designed for daily transactions and not for maintaining historical records. This purpose is solved by a data warehouse.

Objective of a Data Warehouse

Data warehouse provides the decision makers, in a company, with tools to systematically organize data and make decisions based on the data. A company maintains separate data warehouse and operational database so that the decision making body of the company functions efficiently. A data warehouse provides the decision making body of the company with a platform that provides a historical data for analysis.

You can discriminate a data warehouse from an operational database on bases of the following four concepts:

Subject-oriented: A data warehouse is designed to maintain records sales, product, supplier and customer. This is in contrast to the design of a database, which is designed to maintain daily operations. A data warehouse provides a simple and concise collection of data usually related to a particular subject that is used for decision-making in a company.

Integrated: A data warehouse is an integrated resource formed out of many other resources. These resources can be varied ranging from databases to flat files. Data from these resources is cleaned and integrated to make a consistent data warehouse.

Nonvolatile: A data warehouse has a physically separate identity and is made out of the operational data. Because of this separation, the operations on a data warehouse are restricted to updating and adding data and accessing data.

Time variant: A data warehouse is maintained by an organization for improvement and progress of the company. The decisions are made by analysing past trends in companies performance. For these decisions to be made correctly there is an explicit or implicit time constraint for each data warehouse structure.

Data Warehousing

Data warehousing is the term use to name the process of constructing and using a data warehouse. Construction of a data warehouse is done by keeping in mind that the decision making body of a company wants to get an overview of the data and not the details of the data. Construction of a data warehouse consists of three operations on data:

Data integration: Involves establishing connection between the collected data and to bring the chunks of data in a standard form.

Data cleaning: Involves removal of the data chunks, which are not useful in the decision making process.

Data consolidation: Involves combining of the data chunks and uniting them to form a data warehouse.

When a company forms a data warehouse it expects to achieve:

Customer satisfaction: A company wants to maintain a record of the customer needs. The company also maintains a record of the buying trends of its products.

Product portfolios: A company wants to manage the product portfolios so that product repositioning is possible. The decision making body refers to this data and formulate product strategies.

Profit sources: A company wants to make profits on their products. The decision making body refers to this data and finds the places where it can make profits.

Customer relationship: A company wants to maintain a homogeneous environment for its customers. The decision making body refers to this data and finds cost effective method fir customer satisfaction.

Data Warehouse Dependents

The data warehouse dependents are those parts of data warehouse, which are not directly connected with the warehouse functioning. The dependents are data repositories developed for specialized purposes. The commonly used dependents are data marts and meta-data. Data marts contain a summary of data present in the data warehouse. Meta-data is a storage place for information about data stored in data warehouse.

Data Marts

Data mart is a database that contains a subset of data present in a data warehouse. Data marts are created to structure the data in a data warehouse according to issues, such as hardware platforms and access control strategies. User can divide a data warehouse into data marts after the data warehouse has been created.

The division of a data warehouse into data marts results in faster responses to database queries, as data contained in data mart is less than data contained in a data warehouse. The two ways to implement data marting are:

Basic model of data marting

Hybrid model of data marting

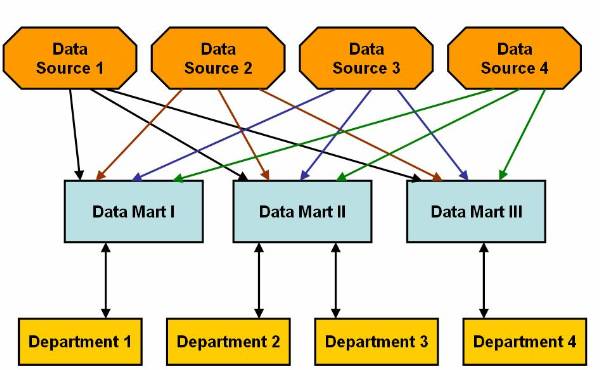

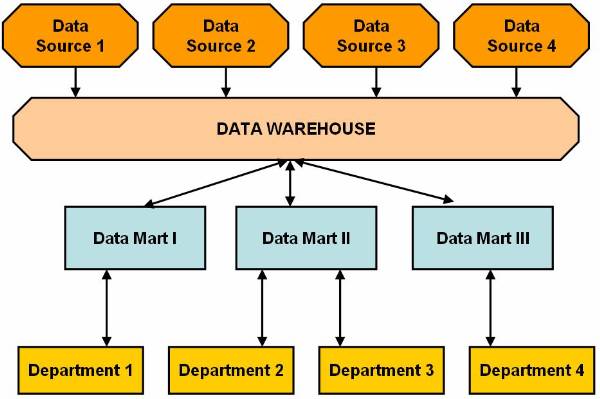

The failure of data warehousing to address the

knowledge workers culture and the warehouse maintenance prompted data marting.

Data marting has two models, basic model and hybrid model. The basic model of

data marting focuses on knowledge worker needs whereas hybrid model focuses

both on business needs as well as knowledge worker needs. You can only create

data marts in basic model of data marting. Data is extracted directly to data

marts in basic model of data marting from the data sources. It is possible to

use Multi dimensional Database Management System (MDDBMS), Relation Database

Management System (RDBMS) and Big Wide Tables (BTW) to implement data marts. On

this figure is shown basic model of data marting in which data marts extracts

data from the data sources![]() . End Users access data from data

marts.

. End Users access data from data

marts.

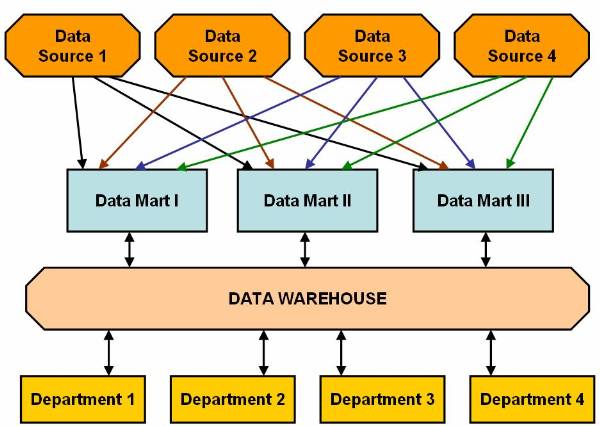

Hybrid model of data marting has an enterprise

wide data warehouse to store data. The data inside this data warehouse is

present in both summarized and detailed form. You need to divide data warehouse

into data marts depending on needs of knowledge workers. Data sources provide

data to data warehouse. In other words data warehouse extract data from the

data sources and stores the data inside it. User can also use RDBMS, MDDBMS and

BWT to implement data marts in hybrid model of data marting to use most of the

benefits and avoids most of the limitations of both data warehouse and data

mart.![]() This Data marting hybrid model

shows how users access data stored in data marts. Data warehouse updates data

in the data marts.

This Data marting hybrid model

shows how users access data stored in data marts. Data warehouse updates data

in the data marts.

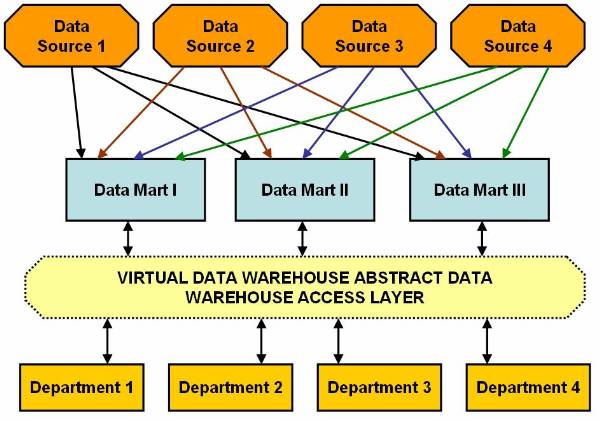

There are three approaches to implement hybrid model of data marting. The first approach is described above and remaining two approaches are:

Extracting data through data marts

Virtual data warehouse

User can use data marts in place of data

warehouse to extract data from data sources in hybrid model of data marting.

Data marts updates data warehouse with the data. Knowledge workers access the

data from the data warehouse. ![]() This figure shows a hybrid model

of data marting in which end users access data stored in data warehouse. Data

marts update data in the data warehouse.

This figure shows a hybrid model

of data marting in which end users access data stored in data warehouse. Data

marts update data in the data warehouse.

User can also use virtual data warehouse in

place of real data warehouse. The virtual data warehouse is software that

consists of data warehouse access layer. Data warehouse access layer enables

end users to access data in data marts. Data warehouse layer also controls the

data marts. ![]() This figure shows how a hybrid model of data

marting using virtual data warehouse works in an organization.

This figure shows how a hybrid model of data

marting using virtual data warehouse works in an organization.

Metadata

Metadata is information about the data in the data warehouse describing the content, quality, condition and other appropriate characteristics. In data warehouse metadata describes and defines the warehouse objects.

The metadata is stored in the metadata repository. That can be viewed as the storage place for the summary of data stored in the data warehouse. A metadata repository needs to have the following:

Structure for the data warehouse: Repository needs to contain description for the schemas of the warehouse, data hierarchies and data definitions.

Operational metadata: Repository needs to contain history if the data that are migrated from a resource and the operations performed on the data. Different stages of data are active, archived or purged. Active is current data, archived is copied data and purged is deleted data.

Summarization algorithms for metadata: Repository needs to contain measure definition algorithms and dimension definition algorithms. It should contain data on partitions, granularity and predefined queries and reports.

Mapping constructs metadata: Repository needs to contain the mapping constructs used to map the data from the operational data to the data warehouse. These include data extraction, data cleaning and data transformation rules.

System performance metadata: Repository needs to contain profiles that improve the data access and retrieval performance. It should also contain the data used for timing and scheduling of the update and deletion of data.

User can then view metadata as a summary of the data in the warehouse. There are other methods for summarizing the data in a data warehouse. These can be listed as:

Current detailed data: Maintained on disks.

Older detailed data: Maintained on temporary storage devices.

Light summarized data: Mostly temporary form of storages for backup of data and are rarely maintained on physical memory.

Highly summarized data: Mostly temporary form of storages for data transitions and are rarely maintained on physical memory as well.

Metadata is very important for a data warehouse as it can act as a quick reference for the data present in the warehouse. This acts as a soul reconstruction reference of the warehouse in case of any reconfiguration of data.

Figure 2.2-1 Basic Model of Data Marting

Figure 2.2-2 Hybrid Model of Data Marting

Figure 2.2-3 Extracting Data Through Data Mart

Figure 2.2-4 Virtual Data Warehouse Model of Data Marting

Data Warehouse Architecture

Data warehouse can be built in many ways. The warehouse can be based on bottom-up, top-down or even on a the combination of both of these approaches. The approach followed in designing a data warehouse determines the outcome. The data warehouse can have a layered architecture or a component-based architecture.

Process of Designing a Data warehouse

The process of warehouse design follows the approach chosen for the designing. The bottom-up approach starts with carrying experiments for organizing data and then forming prototypes for the warehouse. This approach is generally followed in early stages of business modelling and also allows testing of new technological developments before implementing them in the organization. This approach enables the organization to progress through experiments and that to at a less expense.

The top-down approach starts with designing and planning the overall warehouse. This approach is generally followed when the technology being modelled is relatively old and tested. This design process is intended to solve well-defined business problems.

The combined approach is when an organization has the benefits of a planed approach from the top-down approach and the benefits of rapid implementation from the bottom-up approach.

The constructing a data warehouse may consist of the following steps:

Planning

Requirement study

Problem analysis

Warehouse design

Data integration and testing

Development of data warehouse

Those steps can be implemented in designing a warehouse. The methods used for designing a warehouse are:

Waterfall method: Systematic and structured analysis is performed before moving to the next step of design.

Spiral method: Rapid generating of functional systems and then these are released in a short span of time.

Generally, a warehouse design process contains:

Choosing business process for modelling.

Choosing the fundamental unit of data to be represented.

Choosing the dimensions for the data representation.

Choosing various measures for fact table.

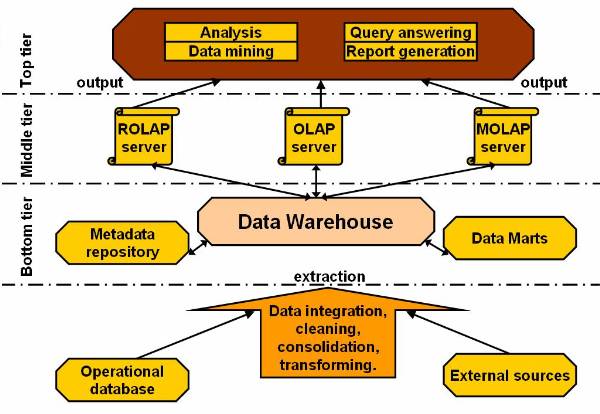

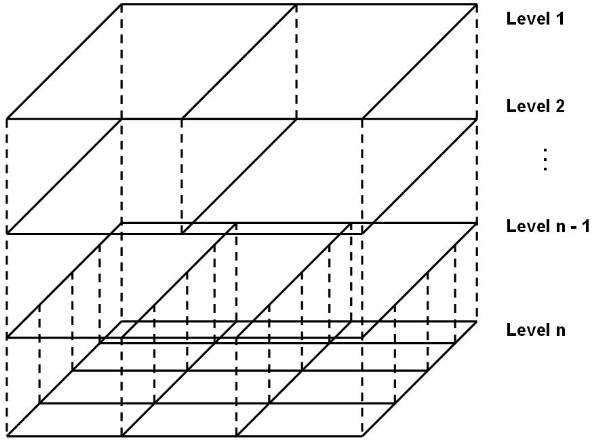

Layered Architecture of a Data Warehouse

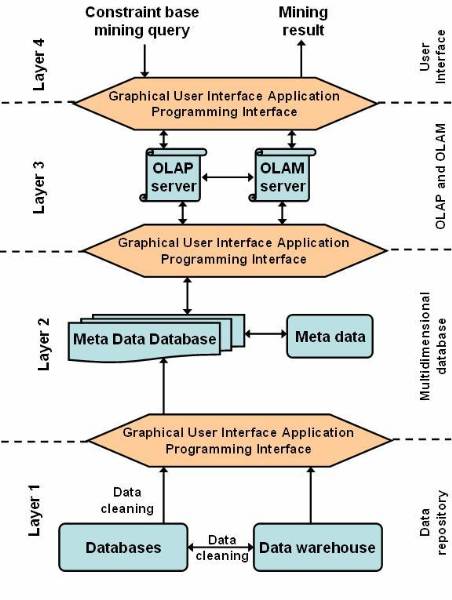

The architecture of a data Warehouse can be

based on many approaches. One of the most commonly used approaches for

designing a warehouse is the layered architecture. It divides the warehouse

into three interrelated layers (as shown on ![]() ). The figure

). The figure ![]() shows the three-tier architecture of data

warehouse. The figure clearly discriminates the bottom tier, the middle tier

and the top tier of the warehouse design.

shows the three-tier architecture of data

warehouse. The figure clearly discriminates the bottom tier, the middle tier

and the top tier of the warehouse design.

The lowest portion of the architecture consists of operational database and other external sources for extracting data. This data is cleaned, integrated, and consolidated to form a good shape. Once this is done, the data is passed to the next stage, to the bottom tier in the architecture.

In this model of data warehouse the bottom tier is mostly a relation database system. The bottom tier extracts data from data sources and places it in the data warehouse. Application program interfaces known as gateways, such as Open Database Connectivity (ODBC) are used for the retrieval of data form data sources, such as operational databases. The gateway facilitates generation of Structured Query Language (SQL) codes for the data from the database management system. The commonly used gateways are ODBC, Open Linking and Embedding for Data Bases (OLE-DB), Java Database Connectivity (JDBC).

The middle tier of consists of OLAP servers. These servers can be implemented in the form of Relational OALP servers or in the form of Multidimensional OLAP servers. Both these approaches can be explained as:

ROLAP model is extended relational database, which maps the operations on multidimensional data to standard relation operations.

MOLAP model directly implements multidimensional data and operations.

The third tier of this architecture contains queering tools, analysis tools and data mining tools.

Figure 2.3-1 Architecture of a Data Warehouse

Data Warehouse Components Based Architecture

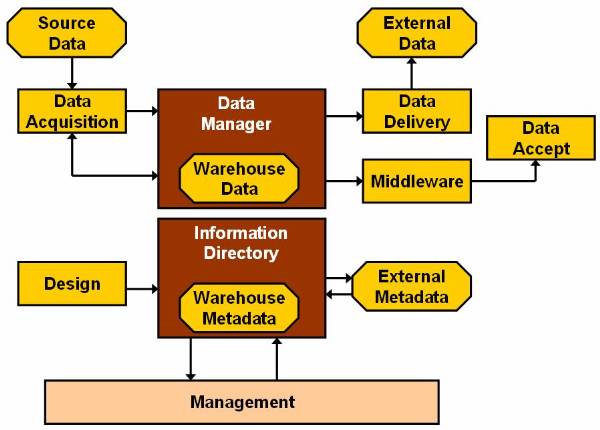

The data warehouse design based on components is

a collection of various components, such as the design component and the data

acquisition components. The design component of the data warehouse architecture

enables you to design the database for the warehouse. It collects the data from

various sources and stores it in the database of the data warehouse. ![]()

The Design Component

The design component of the data warehouse architecture enables you to design the database for the warehouse. The data warehouse designers and data warehouse administrators are design component for designing data to be stored in the database of the data warehouse. The data warehouse databases design depends on the size and the complexity of data. The designers use parallel database software and the parallel hardware in case of extremely large and complex databases. De-normalized database designs may be involved, they are known as star schemas.

The Data Manager Component

The data manager is responsible for managing and providing access to data stored in the data warehouse. The various components of data warehouse use data manager component for creating, managing, and accessing data stored in data warehouse. The managers for data can be classified into two types:

Relational Database Management System (RDBMS)

Multidimensional Database Management System (MDBMS)

The RDBMS is usually used managing data for a large enterprise data warehouse. The MDBMS is usually used for managing data for a small size departmental data warehouse. Database management systems for building data warehouse is employed according to the following criteria:

Number of users for the data warehouse

Complexities of queries give by the end user

Size of the database to be managed

Processing of the complex queries given by the end user

The Management Component

The management component of data warehouse is responsible for keeping track of the functions performed by the user queries on data. These queries are monitored and stored to keep track of the transactions for further use. The various functions of the management component are:

Manage the data acquisition operations.

Managing backup copies of data being stored in the warehouse.

Recovering lost data from the backup and placing it back in the data warehouse.

Providing security and robustness to data stored in the data warehouse.

Authorizing access to data stored in the data warehouse and providing validation feature.

Managing operations performed on data by keeping a track of alterations done on data.

Data Acquisition Component

The data acquisition component collects data from various sources and stores it in the database of the data warehouse. It uses data acquisition applications for collecting data from various sources. These applications are based on rules, which are defined and designed by the developers of the data warehouse. The rules define the sources for collecting data for the warehouse. These rules define the clean up and enhancement operations to perform on data before storing the data in the data warehouse. The operations performed during data clean up are:

Restructuring of some of the records and fields of data in warehouse

Removing the irrelevant and redundant data from that being entered in the warehouse.

Obtaining and adding missing data to complete the database and thereby make it consistent and informative.

Verifying integrity and consistency of the data to be stored.

The data enhancement operations performed on data include:

Decoding and translating the values in fields for data.

Summarizing data.

Calculating the derived values for data.

The data is store in the databases of the data warehouse after performing clean up and enhancement operations. The data is stored in the data warehouse database using Data Manipulation Languages (DML) such as SQL.

The data acquisition component creates definition for each of the data acquisition operations performed. These definitions are stored in the information directory and are known as the metadata. The various products use this metadata to acquire data from the sources and transfer it to the warehouse database. Many products can be used for data acquisition, for example:

Code Generator: Create 3 GL/4 GL capture programs according the rules defined by the data acquisition component. The code generator is used for constructing the data warehouse that requires data from large number of sources and requires extensive cleaning of the data.

Data Pump: Runs on the computer other than the data source or the target data warehouse computer, the computer running the data pump is also known as the pump server. The pump server performs data cleaning and data enhancement operations at the regular intervals and the results are sent to the data warehouse systems.

Data Replication Tool: Captures modifications in the source database at one computer and then make changes to the copy of the source database on different computers. The data replication tools are used when data is obtained from less number of the sources and requires less data cleaning.

Data Reengineering Tool: Performs data clean up and data enhancement operations. The reengineering tools make relevant changes in the structure of the data and sometimes delete the data contents such as name and the address data.

Generalized Data Acquisition Tools: Move data stored on a source computer to the target computer. The different data acquisition tools have different abilities to perform data enhancement and data clean up activities on the data.

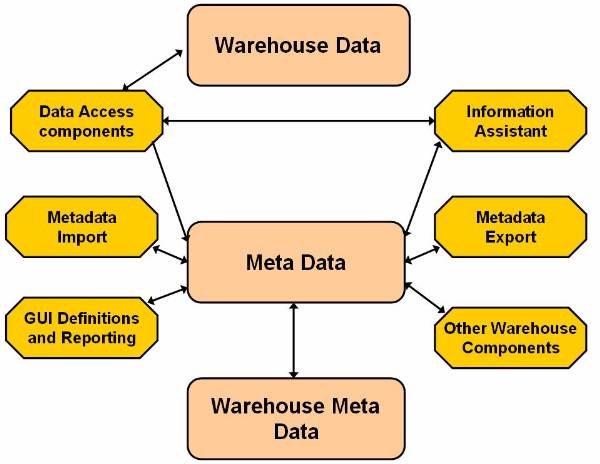

Information Directory Component

The information directory provides details of data stored in a data warehouse. The information directory component helps the technical and the business users to know about the details of data stored in data warehouse. The information directory enables the users to view the metadata, which is the data about the data stored in the data warehouse. The metadata is prepared while designing the data warehouse and performing the data acquisition operations.

Figure ![]() shows various components of the information

directory. The main components of the information directory are the metadata

manager, metadata, and the information assistant.

shows various components of the information

directory. The main components of the information directory are the metadata

manager, metadata, and the information assistant.

The metadata manager manages the input and export metadata. The metadata stored in the information directory:

Technical data:

Information regarding the various sources of data.

Information regarding the target where the data is to be stored.

Rules to perform clean up operations on the data.

Rules to perform data enhancement.

Information regarding mapping between various sources of data and data warehouse.

Business data

Information about predefined reports and queries.

Various business terms associated with the data.

The information assistant component of the business data facilitates easy access to the technical and the business metadata. It enables to understand the data from the business point of view. It enables to create new documents, search data, analyse reports, and run queries.

Data Delivery and Middleware Component

The middle ware component provides access to the data stored in the data warehouse. The data delivery component is used for transferring data to other warehouses. The data delivery component also distributes data to the end user products such as local database and spreadsheet. The data warehouse administrator determines the data for distributing to other data warehouses. The information assistant of the information component of the data warehouse prepares the schedule for delivering data to the other warehouses.

The middleware connects the warehouse databases and end user data tools, there are two types:

Analytical Server: Assists in analysing multidimensional data.

Intelligent data warehousing middleware: Controls access to the database of the warehouse and provides the business view of the data stored in the warehouse.

Data Access Component

The data access and the middleware components provide access to the data stored in the data warehouse. The data access component consists of various tools that provide access to the data stored in a data warehouse. The various data access tools are:

Tools for analysing data, queries and reports.

Multidimensional data analysis tools for accessing MDBMS.

Multidimensional data analysis tools for accessing RDBMS.

Figure 2.4-1 The Data Warehousing Architecture

Figure 2.4-2 The Components of the Information Directory

OLAP and its Types

Large amount of data are maintained in the form of relational databases. The relational database is usually used for transaction processing. For successfully executing these transactions, the database is accompanied with highly efficient data processor for many small transactions. The new trend is to form tools that make a data warehouse out of the database.

The user needs to be able to clearly distinguish between a data warehouse and an On-Line Analytical Processing (OLAP). The first and the most prominent difference is that a data warehouse is based on relational technology. OLAP enables corporate members to gain speedy insight into data through interactive access to a variety of views of information.

Collection of data in a warehouse is typically based on two questions WHO and WHAT which provide information about the past events on the data. A collection of data in OLAP is based on questions such as WHAT IF and WHY. A collection of data is a data warehouse whereas an OLAP transforms data in a data warehouse into strategic information.

Most of the OLAP applications have three features in common:

Multidimensional views of data.

Calculation-intensive capabilities.

Time intelligence.

The Multidimensional View

The multidimensional view represents the various ways of representing data for the corporate to make quick and efficient decisions. Usually the corporate needs to view data in more than one form for making decisions. These views may be distinct of may be interlinked depending on the data represented in them. Data may consist of financial data, such as scenario of organization and time. Data may also consist of dales data, such as product sales by geography and time. A multidimensional view of data provides data for analytical processing.

The Calculation-intensive Capabilities

The calculation-intensive capabilities represent the ability of the OLAP to handle computations. The OLAP is expected to do more than just aggregating data and giving results. The OLAP is expected to do more than just aggregating data and giving results. The OLAP is expected to provide computations that are more tedious for example, calculating percentage of total records and allocation problems, which require hierarchal details.

OLAP Performance indicators use algebraic equations. These may consist of sales trend predicting algorithms, such as percentage growth. Analysing and comparing sales of a company with its competitors consists of complex calculation. OLAP is required to handle and solve these complex computations. OLAP software provides with tools for computational comparisons that enable the decision makers more self-independent.

The Time Intelligence

Any analytical application has time as an integral component. Since a business performance is always judged on time, it becomes an integral part for the decision-making. An efficient OLAP always views time as an integral part.

The time hierarchy can be used in different manner by the OLAP. For example, a decision maker can ask to see the sales for the month July or the sales for the first three months of the year 2007. The same decision maker can ask to see the sales for red t-shirts but may never bother to see the sales for the first three shirts. The OLAP system should define the period based comparisons very clearly.

The OLAP is implemented on warehouse servers and can be divided into three modelling techniques depending on the data organization:

Relational OLAP server

Multidimensional OLAP server

Hybrid OLAP server

Relational OLAP server (ROLAP)

The ROLAP server is placed between relational back-end server and client front-end tool. These servers use relational database management system for storing the warehouse data. The ROLAP servers provide optimisation routines for each database management system in the back-end. It provides for aggregation and navigation tools for the database. The ROLAP has a higher scalability than Multidimensional OLAP server (MOLAP).

Multidimensional OLAP server (MOLAP)

The MOLAP servers use array-based multidimensional storage engines for providing a multidimensional view for data. These map multidimensional views directly from data cube array structure. The advantage in using a data cube is that fast indexing and summarized data.

Hybrid OLAP server (HOLAP)

The HOLAP servers use the concepts of both MOLAP and ROLAP. This has the benefit of greater scalability as in case of the ROLAP and has faster computation power and in case of the MOLAP.

Relating Data Warehousing and Data Mining

A Data Warehouse is a place where data is stored for convenient mining. Data Mining is the process of extracting information from various databases and re-organizing to for other purposes. Online analytical mining is a technique for connecting data warehouse and data mining.

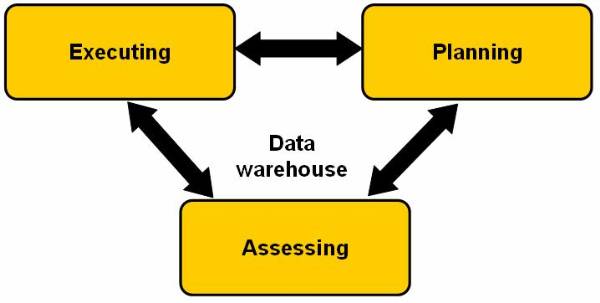

Data Warehouse Usage

Data warehouse and data marts are used in many

applications. Data in warehouses and data marts contain a refined form of data,

which is in an integrated and processed form of data and is stored under one

roof. The data maintained in data warehouse forms an integral part of the planning

closed loop structure. This figure ![]() shows the stages in decision-making process of

the organization. This process is a close loop and is completely dependent on

the data warehouse.

shows the stages in decision-making process of

the organization. This process is a close loop and is completely dependent on

the data warehouse.

The common use of data warehouse is in the following fields:

Banking services

Consumer products

Demand based production

Controlled manufacturing

Financial services

Retailer services

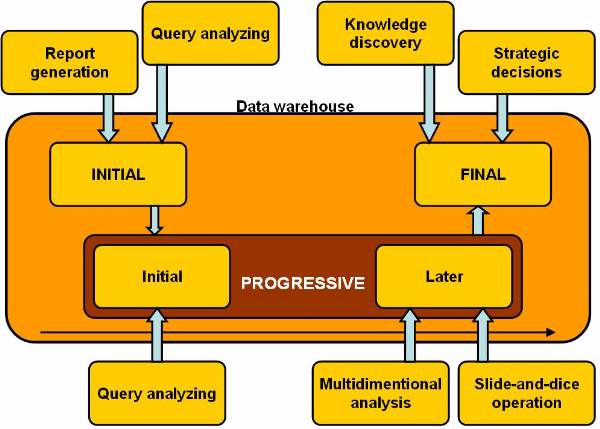

A data warehouse has to be fed with a large

amount of data, so that it provides information for the decision making body of

the organization. This becomes time dependent because the history of events is

an important factor in decision-making. For example, if a decision is to be

taken on changing the price of a product then for the relative change in sales,

then the decision making body needs to see data for the past years trends. The

decision-making body come to an effective conclusion making use of the data

warehouse. The data warehouse development stages figure ![]() shows that a data warehouse has three stages

in its evolution initial, progressive and final stage. In the initial stage of

evolution, a data warehouse is concerned with report generation and query

analysing. Tools used in the initial stage are database access and retrieval

tools.

shows that a data warehouse has three stages

in its evolution initial, progressive and final stage. In the initial stage of

evolution, a data warehouse is concerned with report generation and query

analysing. Tools used in the initial stage are database access and retrieval

tools.

After the initial stage the data warehouse progresses to the progressive stage. The progression is divided into two stages initial and later. In the initial part of the progressive stage, the data warehouse is used in analysing the data, which has been collected and summarized over the past period. This analysis of data is used for report generation and formulation of charts, used are database-reporting tools.

In the later part of the progressive stage, the data warehouse is used for performing multidimensional analysis and sophisticated slice and dice operation using database-analysis tools.

In the final stage, the data warehouse is employed for the knowledge discovery and making strategic decision. The decision-making body usually makes use of data mining techniques and uses data mining tools.

The data warehouse will be of no use if decision-makers are not able to use it or are not able to understand the results produces by querying the data warehouse. For this reason there are three kinds of data warehouse application:

Information-processing - this tool is intended towards supporting the data warehouse querying and it provides with these features - reporting using cross tabs, basic statistical analysis, charts, graphs or tables.

Analytical-processing - it operates on data both in summarized and detailed form and supports basic OLAP operations such as these - slice and dice, drill-down, roll-up or pivoting.

Data-mining - it provides mining results using the visualization tools and supports knowledge discovery by finding - hidden patterns, associations, constructing analytical modes, performing classification and performing predictions.

Data Warehouse to Data Mining

OLAP and data mining are disjoint, rather data mining is a step ahead from OLAP. The OLAP is data summarization and aggregation tools that help data analysis, of the other hand data mining allows automated discovery of implicit pattern and knowledge recognition in large amounts of data. The goal of OLAP tools is to simplifying and supporting interactive data analysis, whereas the goal of data mining tools is to automate as many processes as possible.

Data mining can be viewed in the form where it covers both data description and data modelling. The OLAP results are directly based on summary of data present in data warehouse. This is useful for summary based comparison. This is also provided by data mining but is an inefficient or an incomplete utilization of the capabilities of data mining. Data mining is capable of processing data at much deeper granularity levels than just processing data, which is stored in data warehouse and it has the capability of analysing data in transactional, spatial, textual and multimedia form.

On-Line Analytical Mining (OLAM)

There is a substantial amount of research carried out in the field of data mining in accordance with the platform to be used. The various platforms with which data mining has been tested are:

Transaction databases

Time-series databases

Relational databases

Spatial databases

Flat files

Data warehouses

There are many more of the platforms with which data mining has been successfully tested. One of the most important is integration of On-Line Analytical Mining, also known as OLAP mining. This facilitates data mining and mining knowledge in multidimensional database, which is important for the following four reasons:

High Quality of data in data warehouse

Information processing structure surrounding data warehouse

OLAP based exploratory data analysis

On-line selection of data mining functions.

An OLAM server performs similar

operations as that of the OLAP server, as it is shown on ![]() , where OLAM and OLAP servers are

together.

, where OLAM and OLAP servers are

together.

Figure 2.6-1 Closed

Figure 2.6-2 Data Warehouse Development Stages

Figure 2.6-3 OLAM and OLAP Servers Together

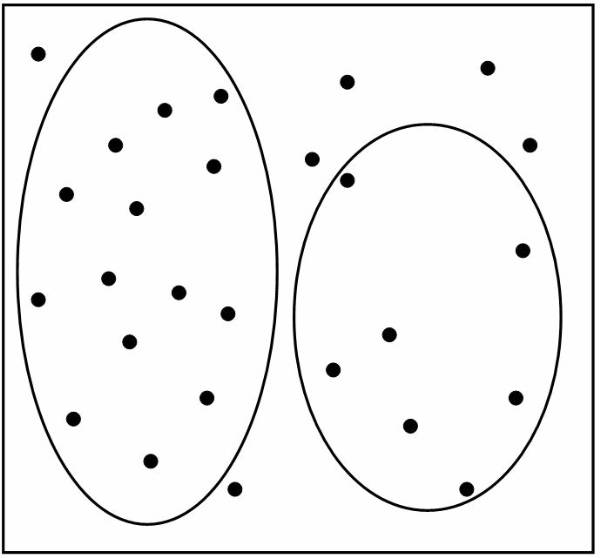

Introduction to Classification

Classification is one of the most simple and highly used techniques of data mining. In classification data needs to be divided into segments and then make distinct non-overlapping groups. For dividing data into groups user has to have certain information about the data. This knowledge about data is most important for performing any type of classification.

Classification problems aim to identify the characteristics that indicate the group to which each case belongs. This pattern can be used both to understand the existing data and to predict how new instances will behave. Data mining creates classification models by examining already classified data (cases) and inductively finding a predictive pattern. Sometimes an expert classifies a sample of the database, and this classification is then used to create the model which will be applied to the entire database.

In the preceding definition, classification is displayed as mapping from the database to the set of classes. The classification process is divided into two phases:

Developing a model for evaluating and training data.

Classifying tuples from target database.

The basic methods that can be used for classification are:

Specifying boundaries: Provides a method for classification by dividing input database into categories. Each of these categories is associated with a class.

Probability distribution: Determines the probability definition of a class at a particular point. Consider a class Cj, the probability definition at one point ti is P (ti | Cj). In the definition, each tuple, is considered to have a single value and not multiple values. If the probability of the class is known to be as P (Cj), then the probability that ti is in the class Cj is determined by the equation: P (Cj) = P (ti | Cj)

Using posterior probability: Determines the probability for each class. The posterior probability for each class is computed and tj is assigned to the class with highest probability. For example for a data value ti, the probability that ti is in the class Cj can be determined. The equation for posterior probability is: P (Cj / ti)

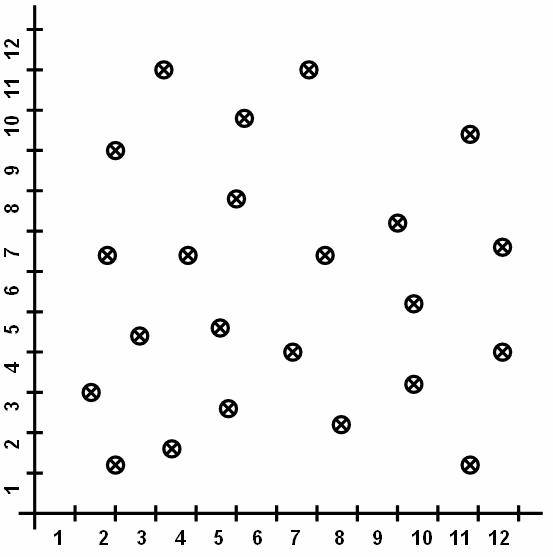

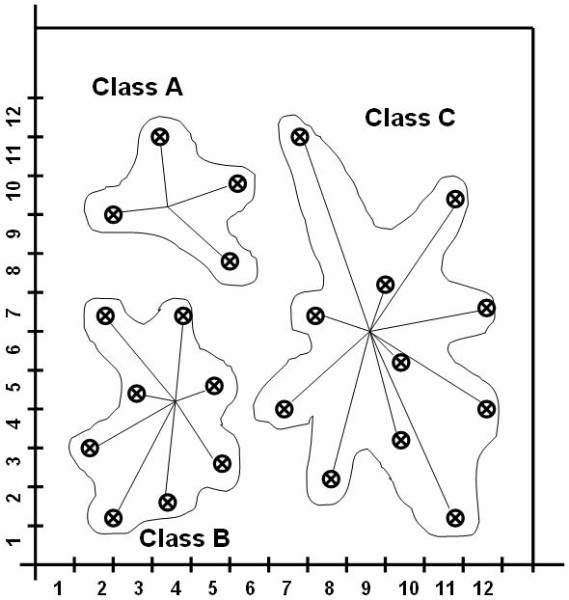

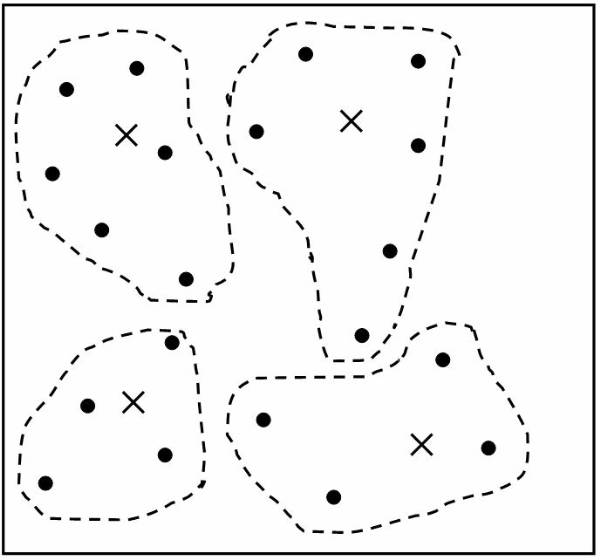

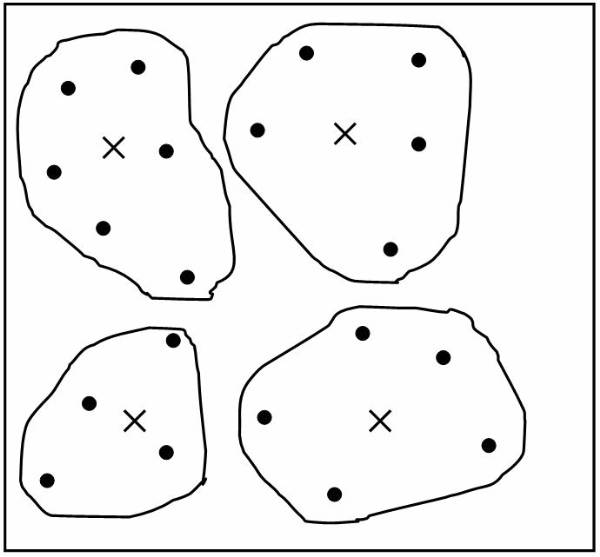

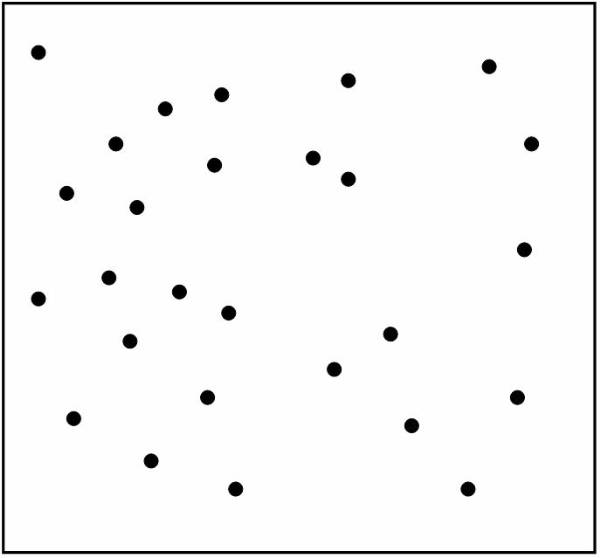

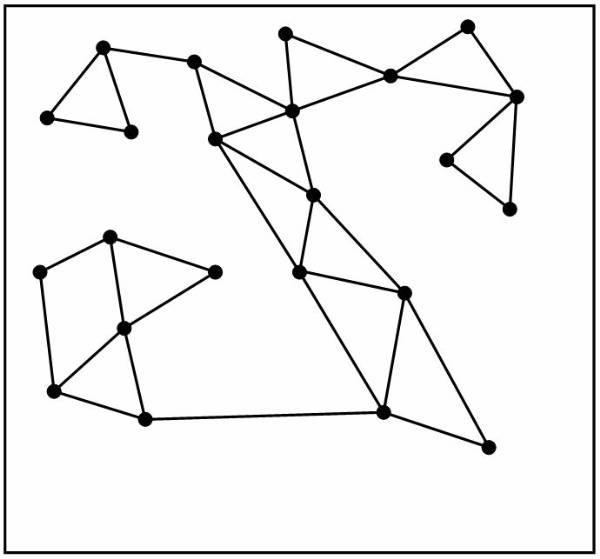

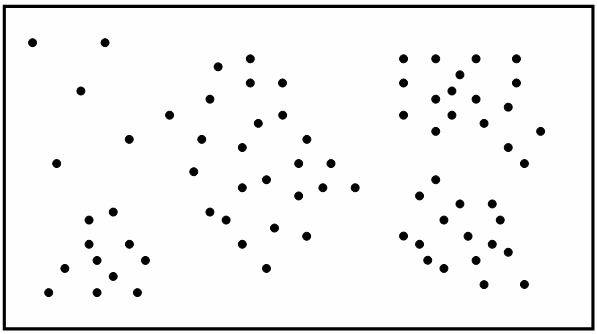

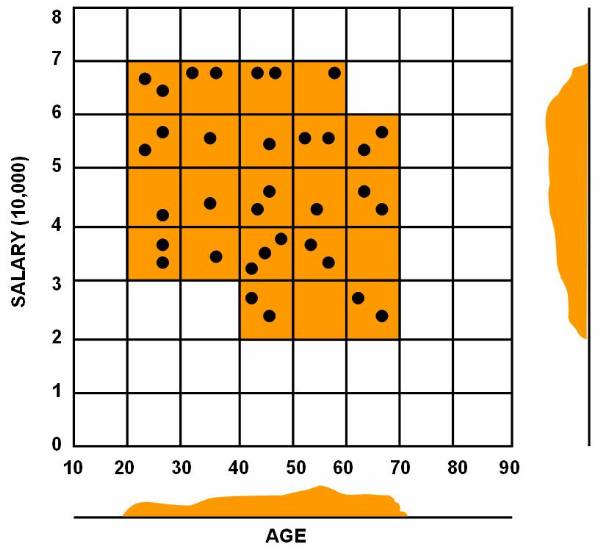

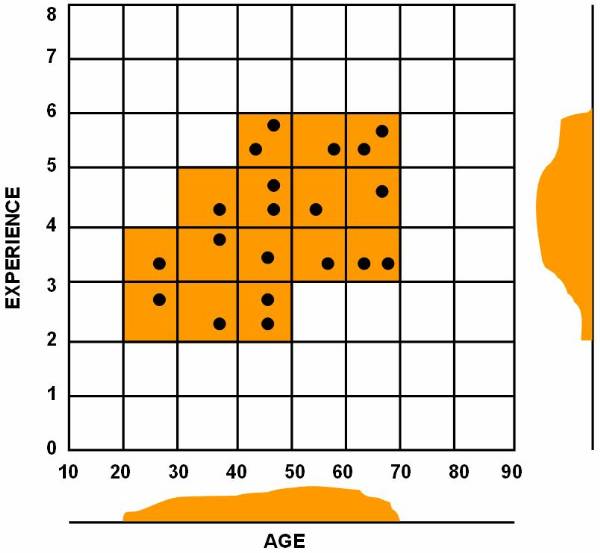

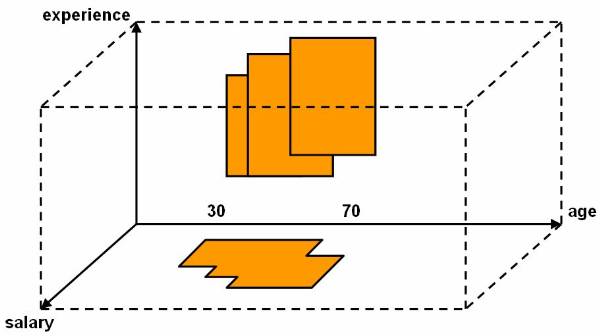

Consider a database based on the values of the

two variables such that the tuple t = [ x, y ] where 0 £ x £ 10 and 0 £ y £ 12. Display the values of x and y

on graph. Figure ![]() shows the points plotted in graph. The

following figure

shows the points plotted in graph. The

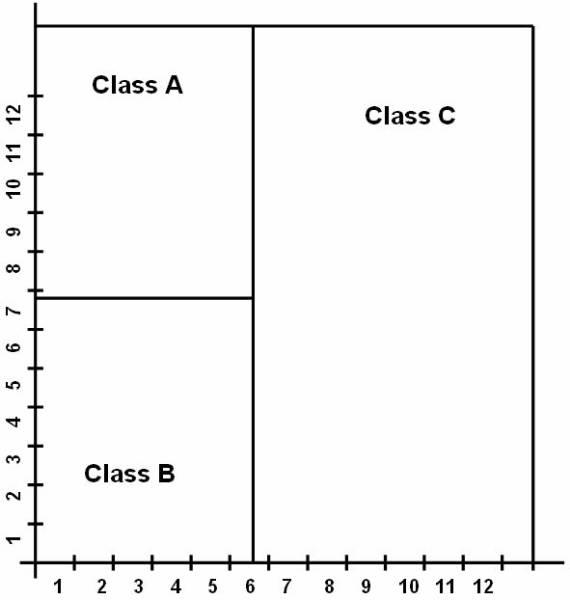

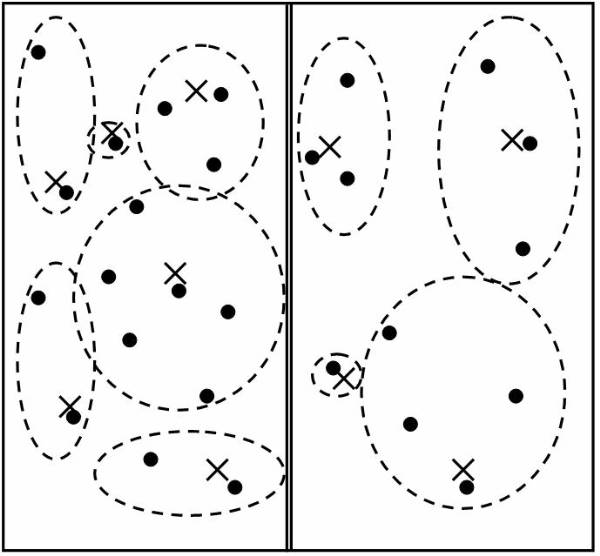

following figure ![]() shows, that the reference classes dividing the

whole reference space into three classes A, B, and C. And this figure

shows, that the reference classes dividing the

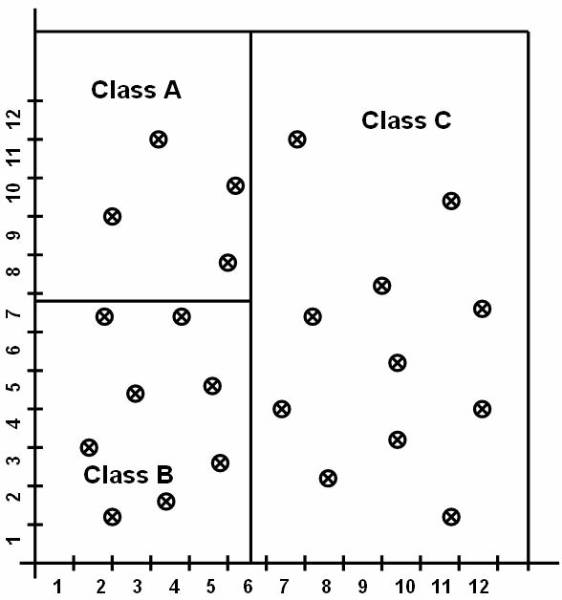

whole reference space into three classes A, B, and C. And this figure ![]() shows the data in the database classified into

three classes.

shows the data in the database classified into

three classes.

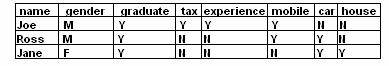

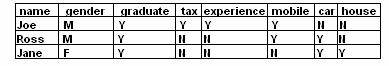

Example:

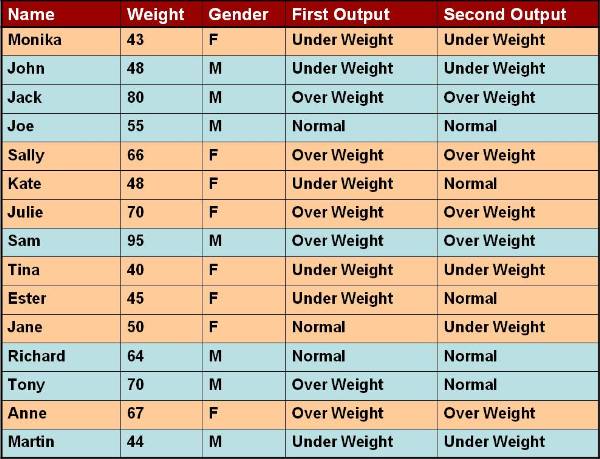

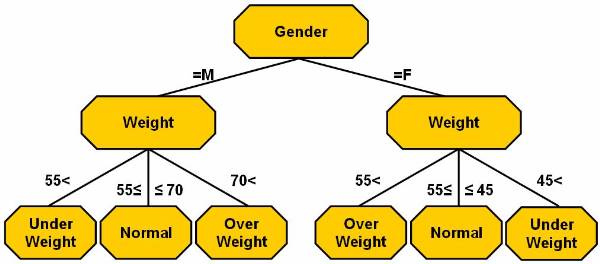

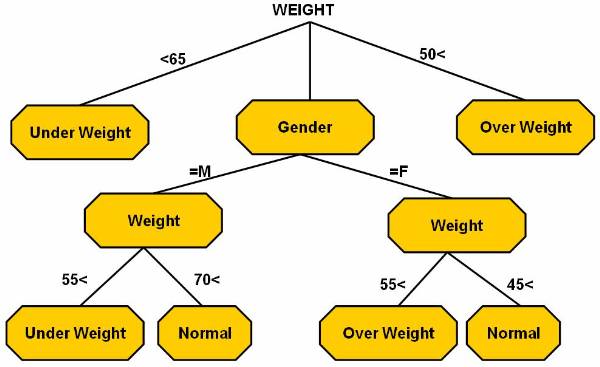

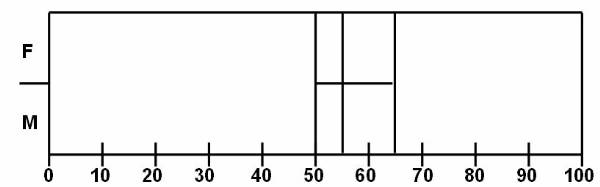

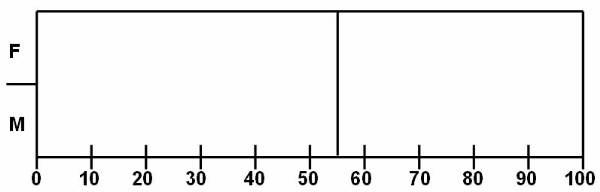

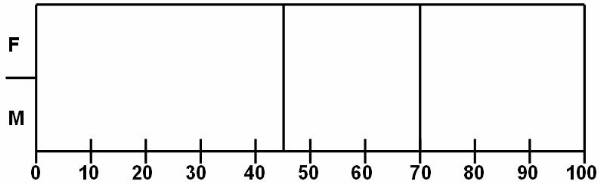

User can categorize the weight of different

people and classify them into categories. Table 4 ![]() lists the attributes of database. The output

of the table can be categorised in two categories. In the first category, user

is concerned about the gender of the person. The rules for the output

classification are:

lists the attributes of database. The output

of the table can be categorised in two categories. In the first category, user

is concerned about the gender of the person. The rules for the output

classification are:

65 < weight over weight

£ weight £ normal

weight < 50 under weight

The output of the second category is based on the output of the first category. The gender of person has to be considered in second category, and here are the rules:

male

70 < weight over weight

£ weight £ normal

weight < 55 under weight

female

55 < weight over weight

£ weight £ normal

weight < 45 under weight

The classification mentioned in the second output is not as simple as the first output, as in the second output there are used classification techniques based on many complex methods.

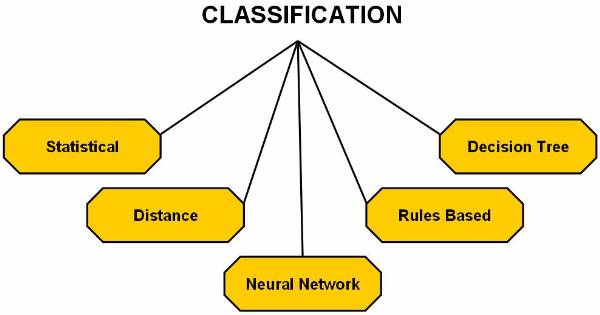

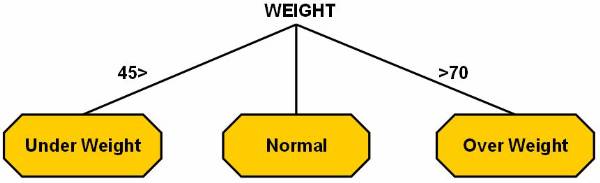

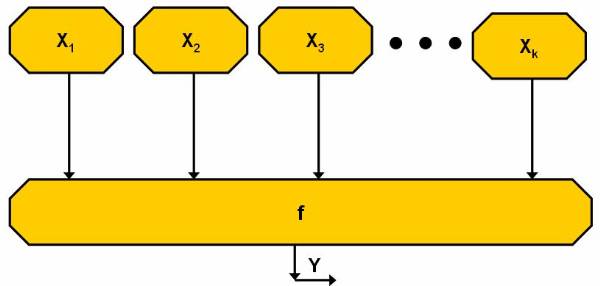

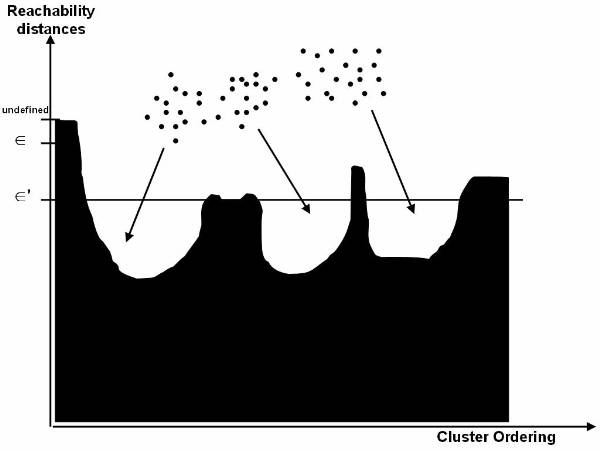

Methods of classification which can be chosen

from based on algorithms ![]()

Statistics based algorithms

Distance based algorithms

Decision tree based algorithms

Neural network based algorithms

Rule based algorithms

Figure 3.1-1 Data in Database

Graph with the plotted points

Figure 3.1-2 Reference Classes

Distribution of the classes for the classification purpose.

Figure 3.1-3 Data Items Placed in Different Classes

The data in the database are classified into three classes.

Figure 3.1-4 Database Attributes

List of the attributes of database.

Figure 3.1-5 The Classification Methods as Applied to Data

The Distance Based Algorithms

In the Distance Based algorithm, the item mapped to a particular class is considered similar to the items already present in that class and different from the items present in other classes. If the above statement is true then the alikeness or similarity is used to measure the distance between items. This distance is termed as alikeness that is the measure of difference between the items. The concept of similarity is same as the one used in string matching or that followed by the search engines over the Internet. The databases in this search process are the Web pages. In case of search engines over the Internet, the Web pages are divided into two classes:

Those that answer the search query given to the search engine.

Those that do not answer the search query given to the search engine.

This proves that those Web pages that answer users query are more alike to query than those that did not match. The pages retrieved by the search engine are similar in context, all containing the same set of keywords in the user search query.

The concept of similarity is applied to more general classification methods. The user needs to decide the similarity check criteria and after those are decided, they are applied to the database. Most of the similarity checks depend on discreet numerical values so these are not applied to databases with abstract data types.

There are two approaches followed for classification on the bases of distance:

Simple approach

K nearest neighbors

Simple Approach

For understanding the simple approach is important that user know about the information retrieval approach, where information is retrieved from the textual data. The development of the information helps the user retrieve the data from the libraries. The basic request for data retrieval will be finding all library documents related to a particular query because in order to generate a result there needs to be some kind of comparison for query matching.

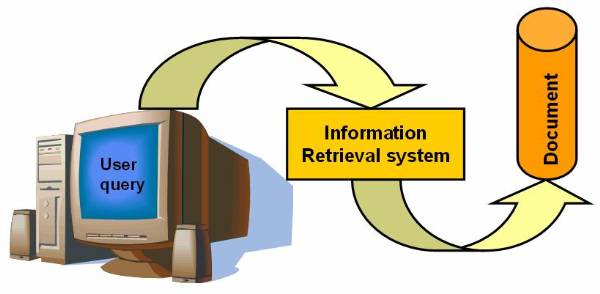

Information Retrieval System

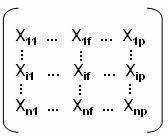

The information retrieval system consists of libraries, which are stored in a set of document, which can be represented as:

D =

The input given as a query for search purpose consists of keywords, for example the query string can be denote with 'q'. The similarity between each document present in the document set and the query given is calculated as:

Similarity ( q, Di )

This similarity function is a set membership function, and it describes similarity between the documents and the query string given by the user. Measuring the performance of the information retrieval process is done by two methods: Precision and Recall.

The precision equation answers the question: Are the documents retrieved by matching of the information retrieval process, are those required by me and will they give me the required information? The precision equation can be display as:

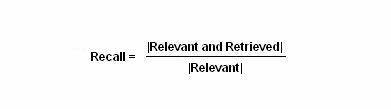

This recall equation answers the question: Does the matching process of information retrieval process retrieve all documents matching the query?

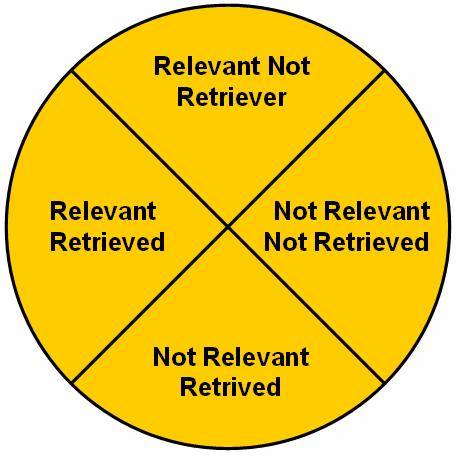

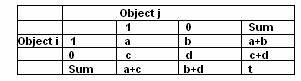

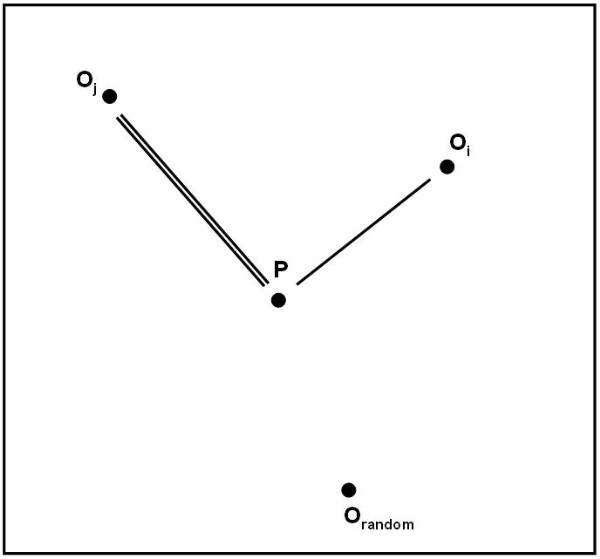

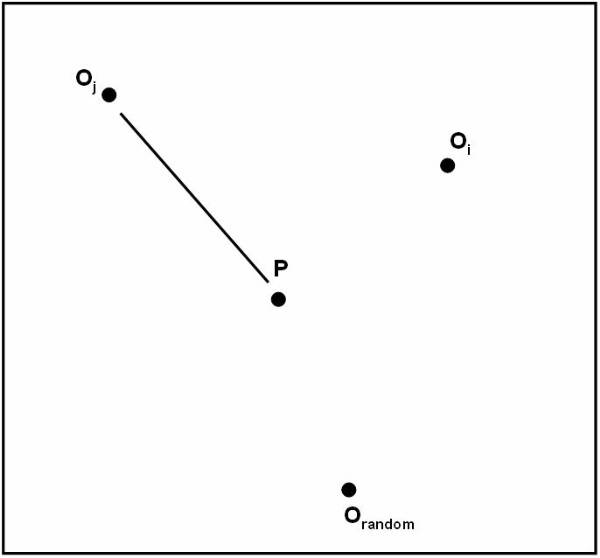

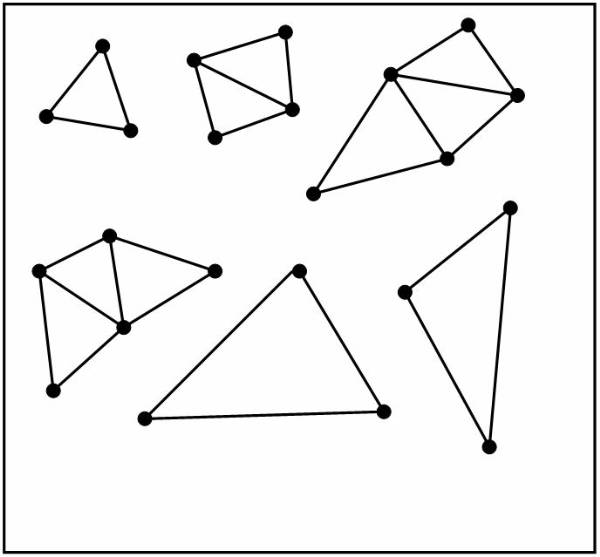

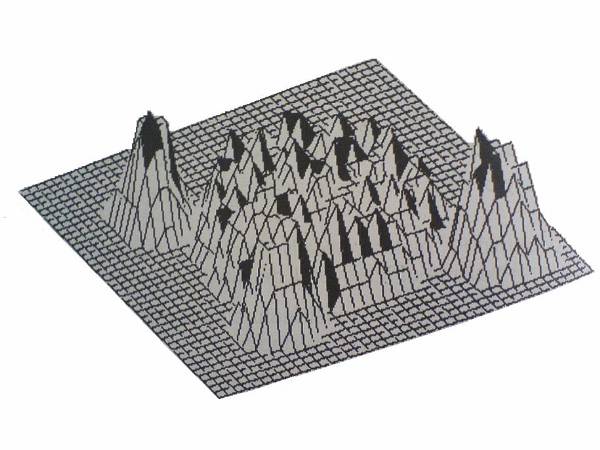

This figure ![]() shows the working of the query processing. And

the following figure

shows the working of the query processing. And

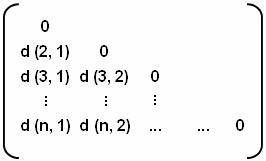

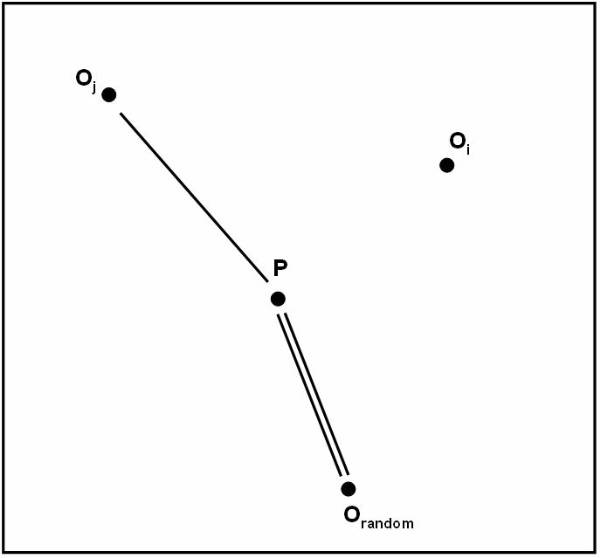

the following figure ![]() displays the four possible results generated

by the information retrieval querying process.

displays the four possible results generated

by the information retrieval querying process.

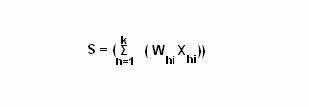

User can use an equation for clustering the output. The equation to cluster the output is:

Similarity ( qi, Dj)

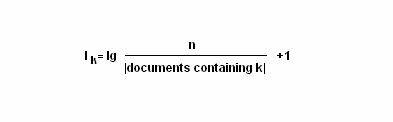

The concept of inverse document frequency result is used for similarity measure purposes. For example, for a given relation equation there are n number of documents to be searched, k is the keywords in the query string, and I signifies the inverse document frequency. The equation to represent the inverse document frequency result is:

The information retrieval process enables the user to associate each distinct class to a shortcut, which represents the class. For example, the function to represent a tuples ti as a vector is:

ti = <ti1, ti2, ti3, , tik>

The functions to define a class using vector of tuples is:

Cj = <CJ1, Cj2, Cj3, , Cjk>

The user can define the classification problem as a tuples database D = , where ti = <ti1, ti2, ti3, , tik> and a set of classes C = , where Cj = <Cj1, Cj2, Cj3, , Cjk> , the classification problem is to assign each ti to class Cj such that for each Ci ¹ Cj.

User need to calculate the respective representative for each class to calculate the similarity measures. The equation to calculate the similarity measure is:

similarity (ti, Cj) similarity (ti, C1) ; C1IC

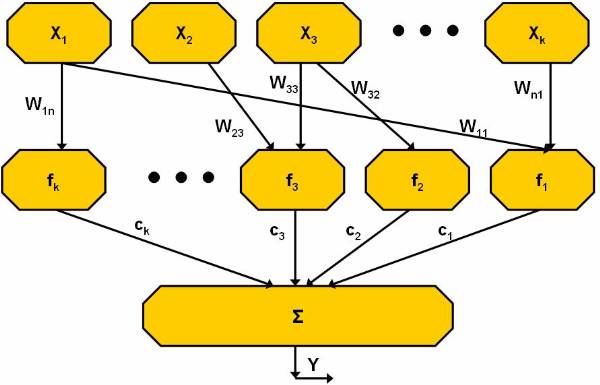

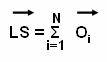

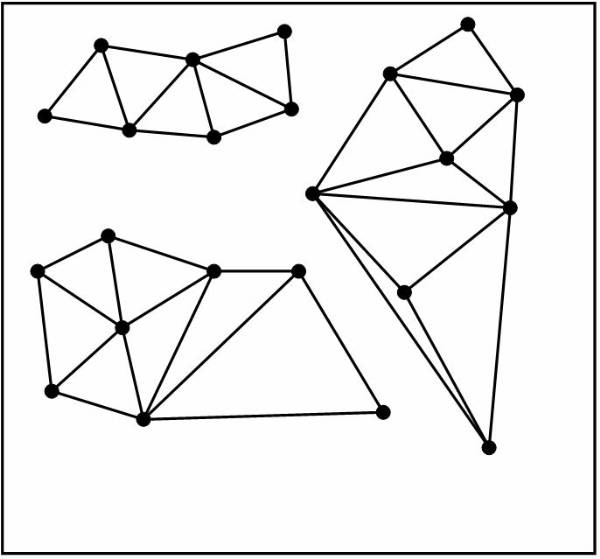

The

following figure ![]() shows the representation of each class for

classification purpose and it shows the classes with their respective

representative point.

shows the representation of each class for

classification purpose and it shows the classes with their respective

representative point.

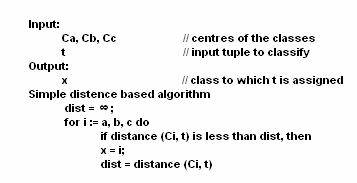

Algorithm follows the distance-based approach for assuming that for each class is represented by its center. For example the user can represent the centre of Class A as Ca, the centre of Class B as Cb, and centre of Class C as Cc. To perform simple classification the user have the following pseudo code:

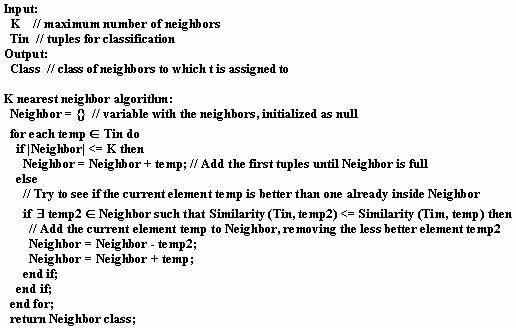

The K Nearest Neighbors

The K nearest neighbor is the most commonly used method for finding the distance measures. This technique is based on the assumption that the database contains not only data but also the classification for each item present in the database.

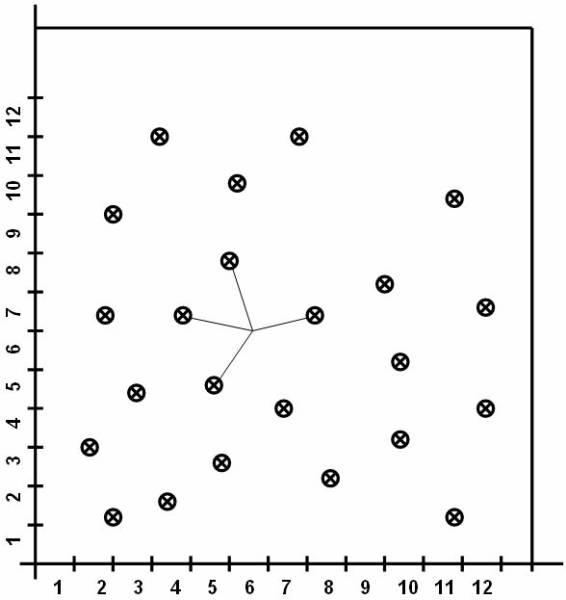

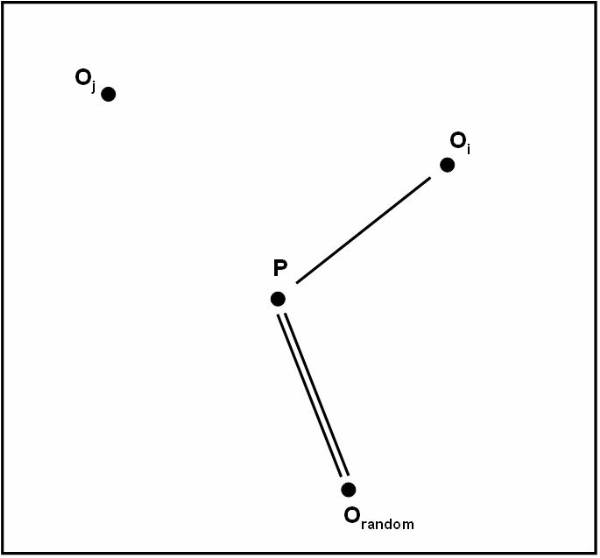

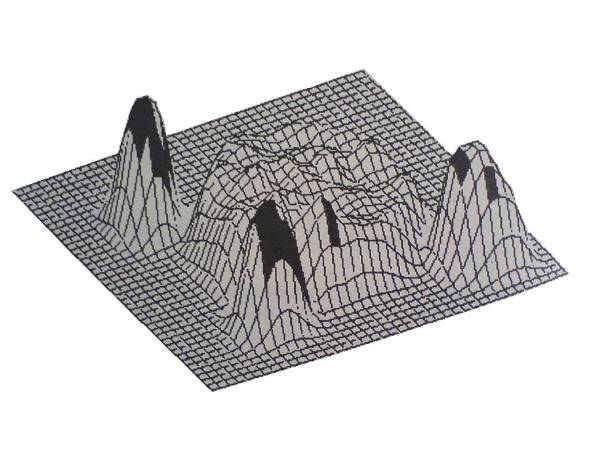

This

approach works as if a new item is to be added and classification is to be made

for this item. Instead of calculating its distance from all the other items in the

K nearest neighbor approach the distance with the K closest items is calculated

for each class. The new item is placed in the class, which has the highest

density for the K closest items. This figure ![]() shows the training points as K=4.

shows the training points as K=4.

The pseudo code to perform K nearest neighbor classification is:

Figure 3.2-1 Working of the Query Processing

Figure 3.2-2 Four Possibilities of the Querying Process

Figure 3.2-3 Representation of Classes for Classification

Figure 3.2-4 Training Points

Training points as K=4.

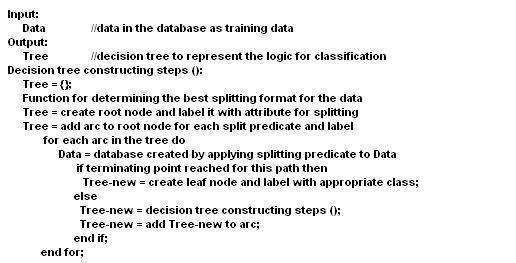

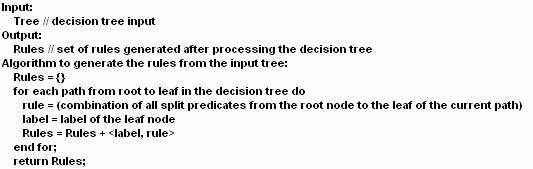

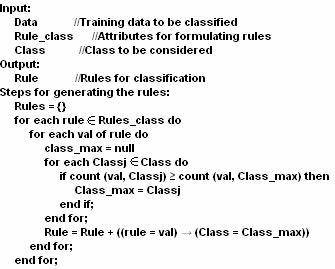

The Decision Tree Based Algorithms

Decision tree method for classification is based on construction of a tree to model classification process. When the complete tree is constructed it is applied to each tuple and hence does classification for each, and there are two steps involved:

Building the tree named as decision tree.

Applying the decision tree to database.

In this approach classification is performed by dividing the search space into sections, these are rectangular regions. The tuple is classified on the basis of the region it is placed in. User can define a decision tree as:

A tuple database D= , where ti = <ti1, ti2, ti3, , tik>, and that the database schema contains the attribute as a set A = and the class is denoted by the set C= , where Cj = <Cj1, Cj2, Cj3, , Cjk>

Decision tree is a tree associated with D as it has the following properties:

Each node is labelled with a predefined attribute Ai

Each leaf node is labelled with a class Cj

Each arc is labelled with a predicate that can be applied to the attribute associated with parent.

The decision tree consists of two parts

Induction constructs a decision tree for the data in the database or training data.

For each tuple ti I D apply the decision tree, as this will determine its class.

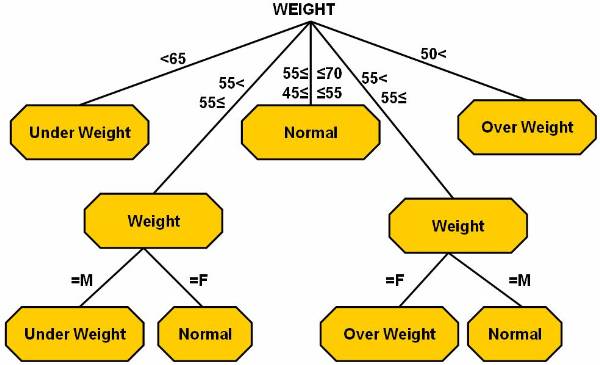

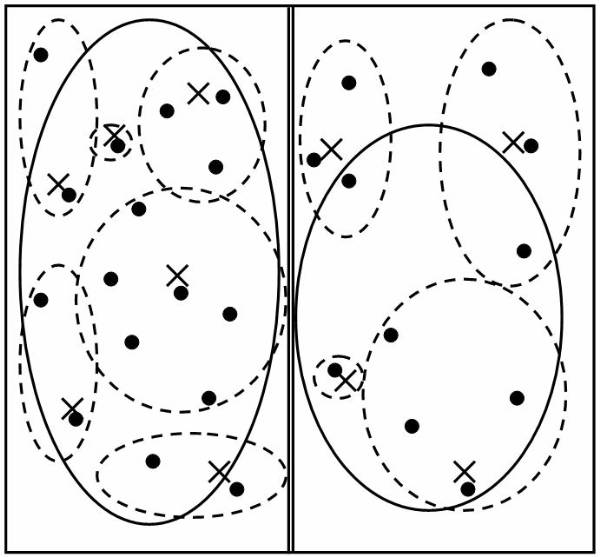

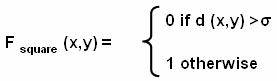

This decision tree ![]() represents the logic for mapping.

represents the logic for mapping.

The pseudo code to represent the decision tree method is:

In

the above pseudo code, splitting attributes are used to label the nodes. The

splitting predicates are represented by arcs. The splitting predicates for

gender are male and for the weight

are . The

training data can be formulated in different ways as shown on following

figures. Figure ![]() shows the decision tree.

shows the decision tree.

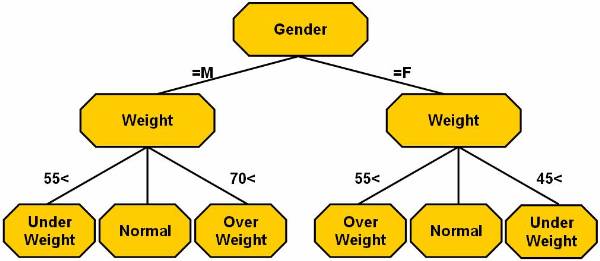

The

balanced tree ![]() is a type of decision tree used to analyse the

data present in the database. The rectangular splitting of database region is

also done according to the balanced tree. The tree has the same depth irrespective

of the path taken to reach from the root node to a leaf node.

is a type of decision tree used to analyse the

data present in the database. The rectangular splitting of database region is

also done according to the balanced tree. The tree has the same depth irrespective

of the path taken to reach from the root node to a leaf node.

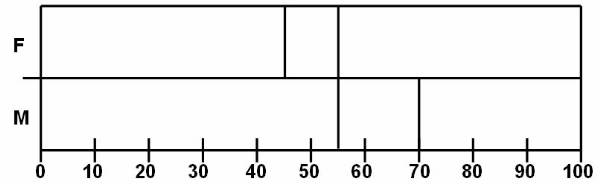

A

deep tree is used for classifying training data. Figure ![]() shows the decision tree, which is used for the

weight classification. The rectangular splitting

shows the decision tree, which is used for the

weight classification. The rectangular splitting ![]() of database region is also done according to

the deep tree.

of database region is also done according to

the deep tree.

A